Weekly AI Roundup: AGI Price Tags and the Rise of DeepSeek-V3

In this week’s exploration of the artificial intelligence landscape, we dive into two significant developments that highlight the intricacies of achieving General Artificial Intelligence (AGI) and the innovations emerging from AI research.

AGI with a Price Tag: OpenAI and Microsoft’s Ambitious Vision

OpenAI’s quest for Artificial General Intelligence (AGI) has recently become entangled with financial aspirations. According to confidential documents, a pact formed between Microsoft and OpenAI stipulates that AGI will be recognized when it has generated a staggering profit margin of $100 billion. This agreement underscores the economic implications of achieving AGI, indicating that it is not merely a technological milestone, but a lucrative one as well.

The definitions put forth by both Microsoft and OpenAI describe AGI as highly autonomous systems surpassing human capabilities in economically significant tasks. As cited from both entities:

“AGI would be capable of performing a range of functions at or beyond human levels.”

However, the path to this ambitious financial benchmark appears daunting. Currently, OpenAI is projected to incur a $5 billion loss in 2024, spotlighting the challenges of turning AI advancements into profitable ventures. Recent financial maneuvers reveal that investments from giants like Microsoft and Nvidia are contingent on OpenAI transitioning into a Public Benefit Corporation (PBC). This transformation is crucial as both companies allocate resources to various AI initiatives, with Microsoft depending heavily on OpenAI’s technologies.

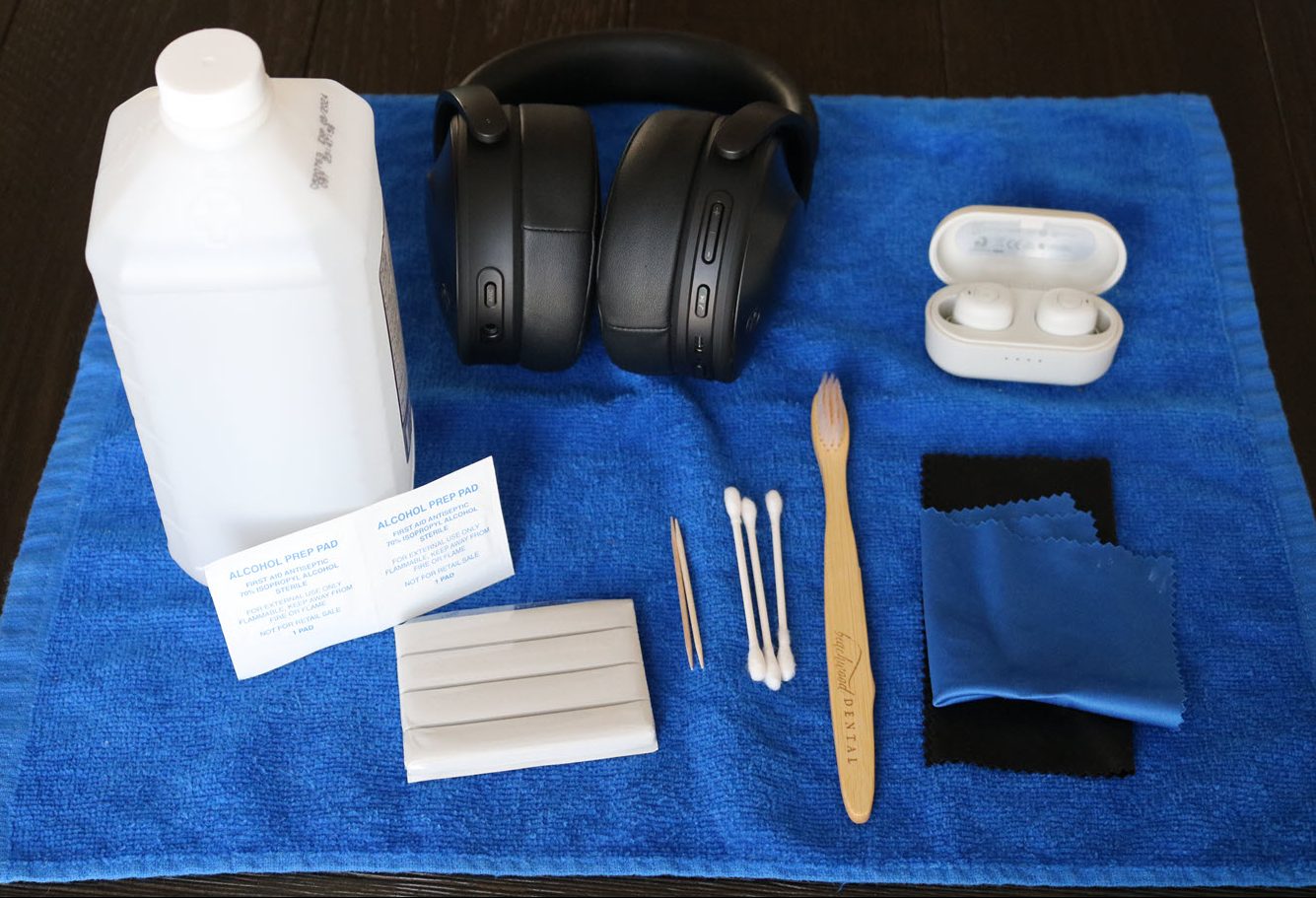

![]() The path to AGI: A balance between aspiration and finance.

The path to AGI: A balance between aspiration and finance.

The implications of this agreement suggest that it may take time before we witness genuine AGI technology. Microsoft, meanwhile, may leverage their partnership to integrate powerful AI applications without formal recognition of AGI status in the interim.

DeepSeek’s Leap Forward: The 671B Parameter Model

In an exciting breakthrough, DeepSeek, a rising star in the AI ecosystem, has launched DeepSeek-V3, an open-source large language model comprising 671 billion parameters. This model isn’t just a collection of numbers; it can generate text, develop software code, and engage in various related tasks, claiming superiority over numerous existing models during rigorous benchmarking.

DeepSeek-V3 employs a mixture of experts (MoE) architecture, allowing it to allocate resources intelligently by activating only the most relevant neural network for specific tasks. This innovation not only reduces operational costs but ensures that the model’s extensive capabilities can be harnessed efficiently. While training the model involved an impressive 2.788 million GPU hours and utilized 14.8 trillion tokens, the careful design promises significant output quality enhancement without demanding colossal infrastructure.

The introduction of multihead latent attention and multitoken prediction are standout features, enhancing its performance in text processing tasks and improving overall response time. In comparative evaluations against previous models and competitors, DeepSeek-V3 outperformed others in coding and math benchmarks, positioning itself as a formidable player in large language models.

New frontiers in language model technology.

New frontiers in language model technology.

DeepSeek has made the source code for this powerful model available on Hugging Face, embodying the ethos of collaboration and advancement within the AI sector.

Conclusion

As we navigate through the evolving landscape of artificial intelligence, the juxtaposition of financial implications alongside groundbreaking model innovations paints a vivid picture of both potential and responsibility. With companies like OpenAI and DeepSeek leading the charge, the future of AI holds exciting possibilities fueled by creativity and rigorous inquiry.

Stay tuned for more insights and breakthroughs as the week unfolds!