Unlocking the Power of LLM-Generated Code with LLM Sandbox

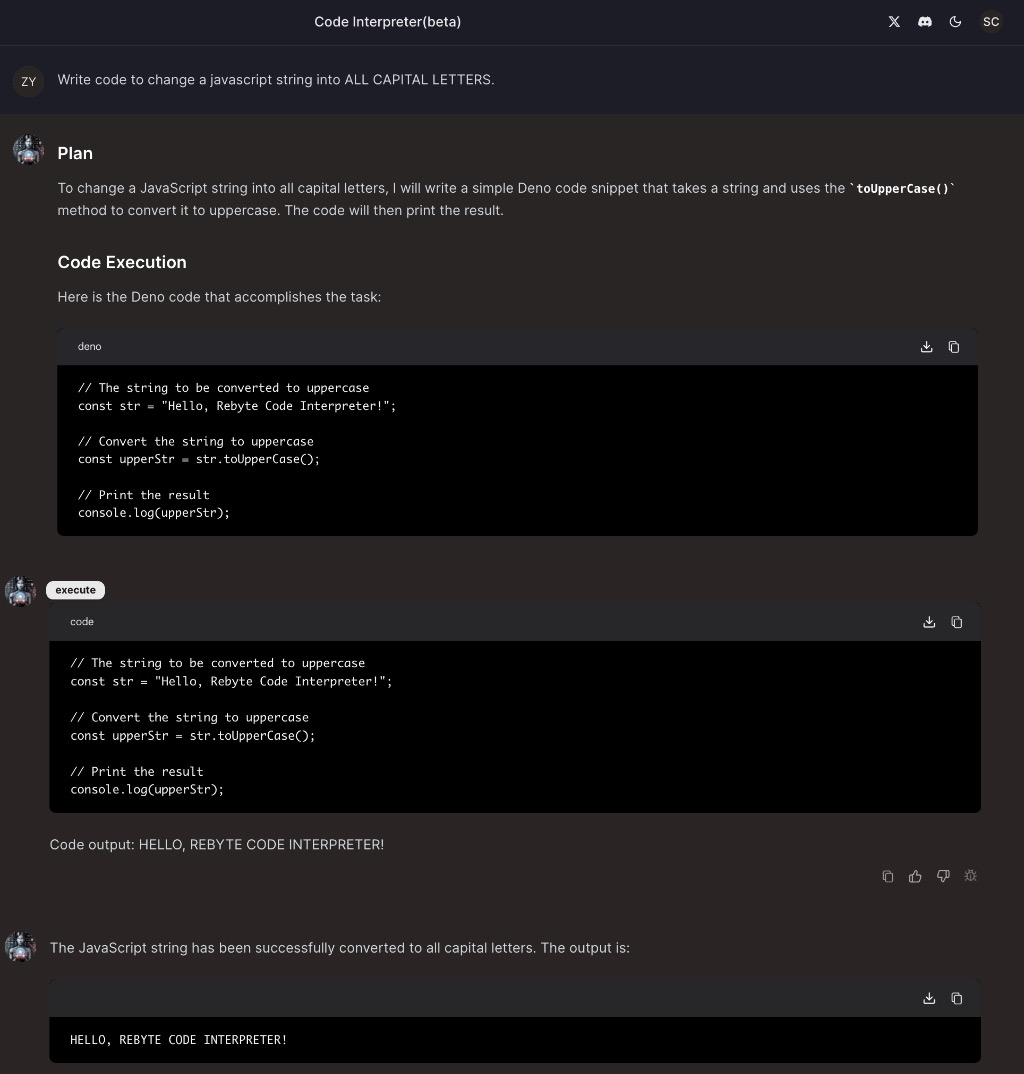

As AI researchers, developers, and hobbyists continue to push the boundaries of what is possible with large language models (LLMs), the need for secure and efficient code execution has become increasingly important. This is where LLM Sandbox comes in – a lightweight and portable environment designed to run LLM-generated code in a secure and isolated manner using Docker containers.

“Isolation: The key to secure code execution”

LLM Sandbox is the brainchild of developers who sought to simplify the process of integrating code interpreters into LLM applications without worrying about the security and stability of their host system. With its easy-to-use interface, users can set up, manage, and execute code within a controlled Docker environment, streamlining the development process.

Key Features of LLM Sandbox

• Easy Setup: Create sandbox environments with minimal configuration. • Isolation: Run code in isolated Docker containers to protect your host system. • Flexibility: Support for multiple programming languages, including Python, Java, JavaScript, C++, Go, and Ruby. • Portability: Use predefined Docker images or custom Dockerfiles. • Scalability: Integrate with Kubernetes and remote Docker hosts.

Getting Started with LLM Sandbox

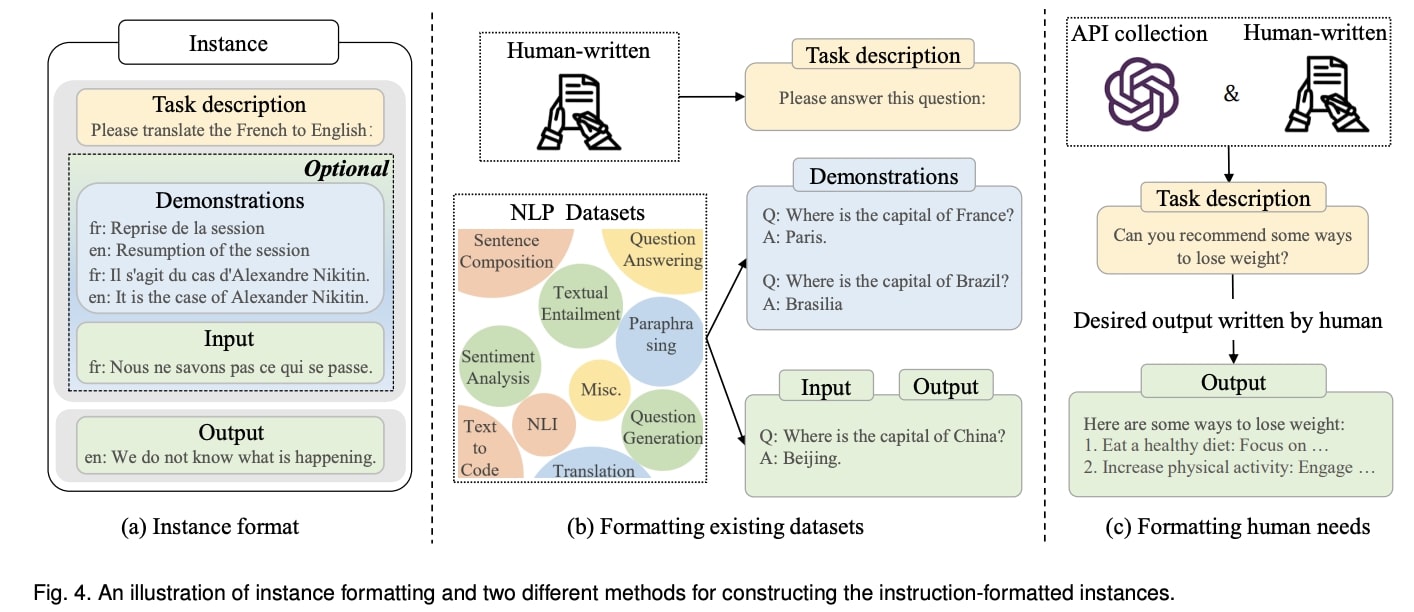

To get started with LLM Sandbox, you can install it using either Poetry or pip. Once installed, you can create a SandboxSession object with the desired configuration, open a session to build/pull the Docker image and start the Docker container, run code inside the sandbox, and close the session to stop and remove the Docker container.

“Running code in a secure environment”

“Running code in a secure environment”

LLM Sandbox seamlessly integrates with Langchain and LlamaIndex, allowing you to run generated code in a safe and isolated environment. For example, you can use LLM Sandbox with Langchain to execute code generated by LLMs.

Conclusion

LLM Sandbox is a game-changer for AI researchers, developers, and hobbyists who want to securely execute code generated by LLMs. With its ease of use, flexibility, and scalability, LLM Sandbox is the perfect solution for integrating code interpreters into LLM applications.

“Unlocking the power of LLM-generated code”

Photo by

Photo by