The Limitations of Large Language Models (LLMs)

The rise of Large Language Models (LLMs) and Generative AI has brought about a new era of technological advancement, promising transformative automation and innovation across various industries. However, as organizations rush to develop next-generation AI applications, they are encountering significant challenges. One key limitation lies in the fact that LLMs are only as intelligent as the data they are trained on, lacking crucial organizational knowledge.

According to experts, while LLMs possess vast knowledge of historical events and literature, they lack insights into specific organizations, including customers, products, and employees. This gap poses a critical challenge in leveraging LLMs effectively within an enterprise context.

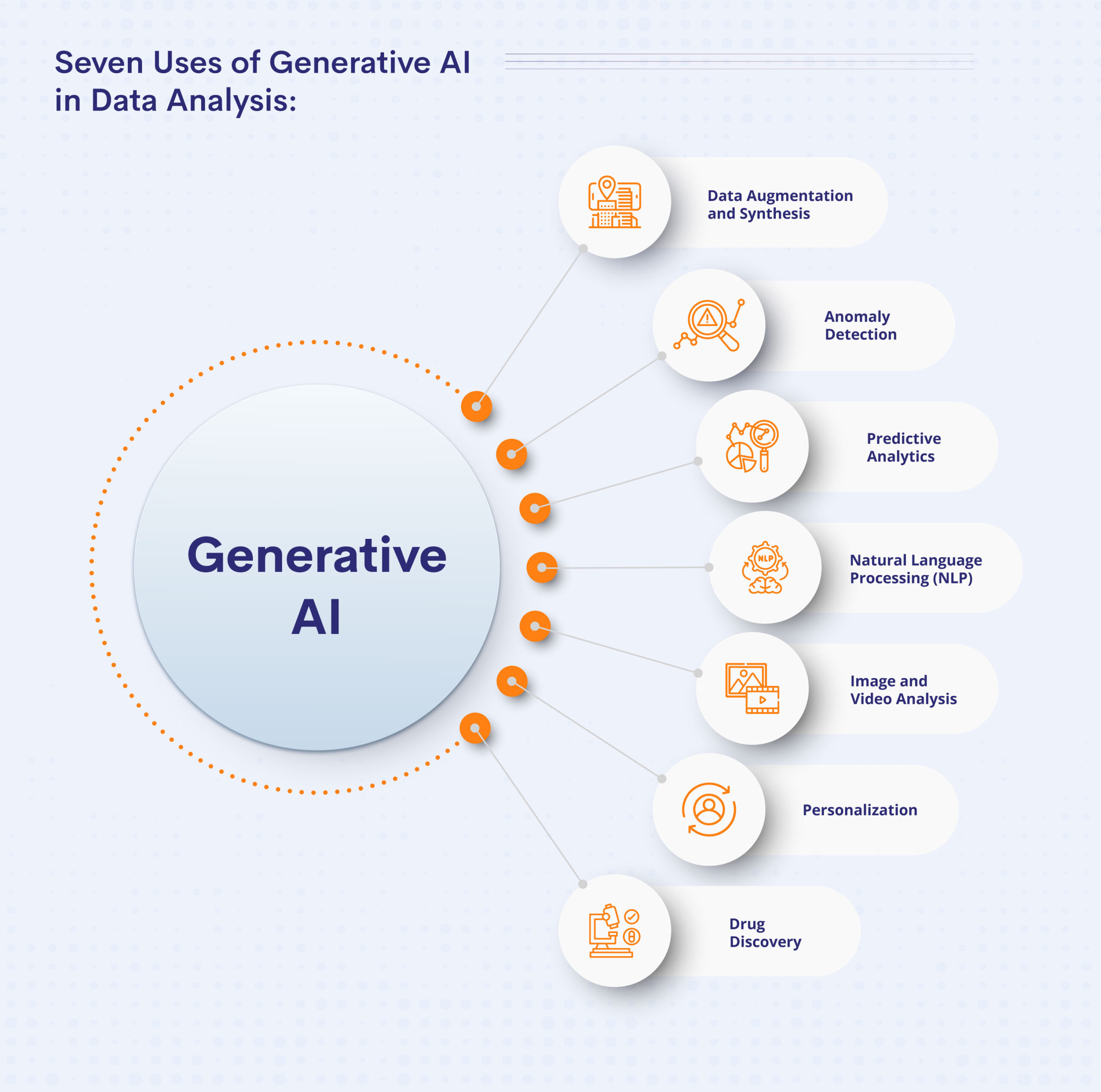

Augmentation: Empowering Generative AI with Knowledge

To address the knowledge gap, experts suggest the concept of Augmentation as a key strategy to empower Generative AI. While re-training existing models with corporate data is a viable option, it often leads to complexity and cost challenges. Moreover, the need for continuous re-training to keep the models updated poses practical limitations.

An emerging solution, known as Retrieval Augment Generation (RAG) architecture, offers a promising approach to augment LLMs with additional data securely and cost-effectively. By integrating knowledge during the prompting process rather than embedding it within the model, RAG architecture enhances the AI’s capabilities without compromising data security.

Illustration of GenAI Agent augmented with organizational knowledge

Illustration of GenAI Agent augmented with organizational knowledge

Data Management Foundation for GenAI Applications

As organizations strive to harness the full potential of Generative AI, building a robust data management foundation becomes imperative. A unified data access layer, coupled with a semantic layer, plays a crucial role in providing rich context and facilitating seamless data integration for AI applications.

Experts emphasize the importance of a logical data layer equipped with advanced query optimization techniques to ensure efficient data access and utilization by LLM-powered AI applications. Additionally, stringent data governance and security measures are essential to mitigate the risks of data breaches and ensure compliance with data protection regulations.

Leveraging Logical Data Fabric for AI Integration

In the quest to power next-generation AI applications, the logical data fabric emerges as a critical enabler. Platforms like the Denodo Platform, leveraging data virtualization technology, offer a consolidated gateway for AI applications to access integrated data without the need for data movement or consolidation.

The logical data fabric provides a unified access point, a rich semantic layer, and query optimization capabilities, streamlining the interaction between LLMs and enterprise data repositories. This integration accelerates the development of powerful AI agents and enhances the overall efficiency of AI-driven processes.

Denodo Platform Augmenting and Supporting an AI Interaction

Denodo Platform Augmenting and Supporting an AI Interaction

Embracing a Generative AI-Enabled Future

As organizations prepare for a future driven by Generative AI, the focus shifts towards optimizing data management practices to unlock the full potential of AI capabilities. By establishing a robust data architecture and management foundation, organizations can not only leverage Generative AI effectively but also gain a sustainable competitive edge in the evolving digital landscape.

In conclusion, the journey towards harnessing the transformative power of LLM-powered AI agents requires continuous technological advancements and a strategic focus on data management. By embracing innovative solutions and evolving data management practices, organizations can pave the way for a successful transition into a Generative AI-enabled future.