Enterprise AI: Lessons from the Early Adopters

The race to harness the transformative power of artificial intelligence (AI) has reached a fever pitch across various sectors, with businesses feverishly exploring how generative AI (gen AI) can provide them an edge. The latest evolution in this journey is predominantly shaped by large language models (LLMs), which underpin a multitude of applications including text generation, translation, and summarization. However, a significant obstacle remains for enterprise-scale adoption: the privacy and confidentiality of sensitive business data.

The Rise of Generative AI

Generative AI has emerged as a pivotal player in the business landscape. As organizations strive to leverage AI frameworks, they cannot overlook the central role played by LLMs. Capable of producing coherent and contextually relevant text, these models are driving automated solutions that can enhance productivity and efficiency in operations. From automating customer service responses to generating insightful reports, the potential applications for generative AI are boundless.

But amid these rapid advancements, corporate data security concerns loom large. Companies hold sensitive information, and integrating LLMs into their workflows often necessitates a careful balancing act between operational efficiency and safeguarding proprietary data.

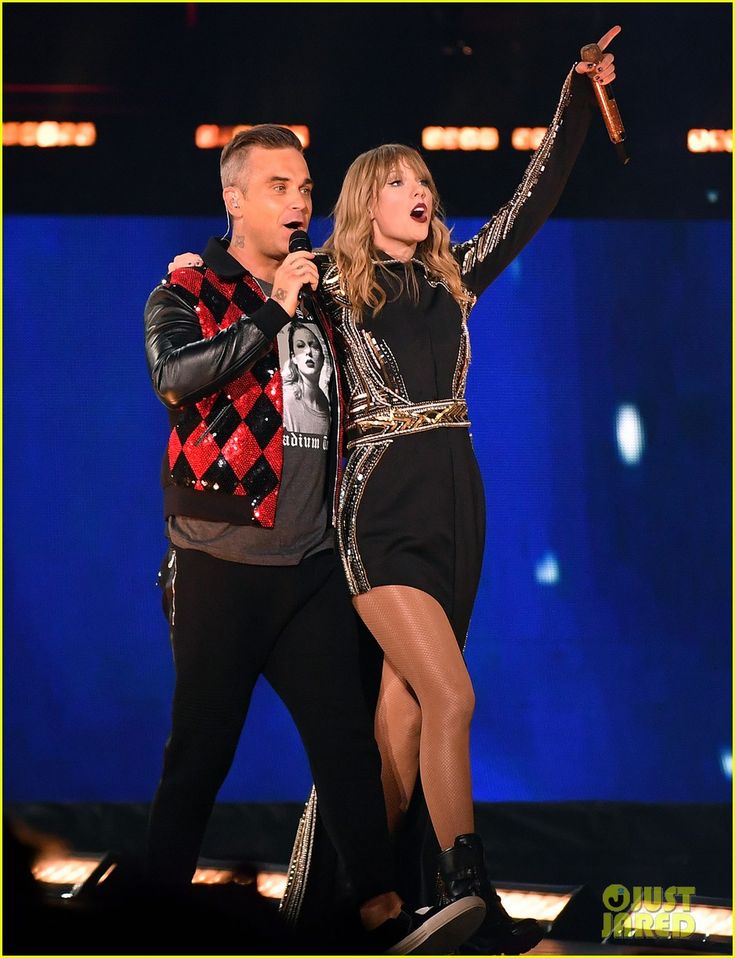

Understanding the complexities of enterprise AI adoption.

Understanding the complexities of enterprise AI adoption.

Challenges in Implementation

The confidential nature of enterprise data presents a dual challenge for organizations looking to implement LLMs. Although these tools can provide significant benefits, incorporating them without compromising data integrity involves meticulous planning. Businesses must navigate regulations such as GDPR and CCPA, which impose stringent guidelines on data use and storage, further complicating the landscape.

Moreover, as enterprises adopt AI models, they encounter issues surrounding data bias and model transparency. Ensuring that language models do not perpetuate existing biases requires a conscious effort in dataset curation and model development. Companies need to be proactive in training their AI systems on diverse datasets to avoid unintended consequences that could harm their reputation.

Case Studies: Success and Lessons Learned

Insights gleaned from early adopters of generative AI can offer essential lessons for businesses contemplating this transition. For instance, one financial institution successfully integrated an LLM into its customer support system. By efficiently handling common inquiries, the AI freed up human agents to tackle more complex cases, ultimately improving customer satisfaction levels. However, this implementation was carefully designed with rigorous data protocols to ensure that sensitive information remained protected.

Another example can be found in the retail sector, where a major company employed LLMs to forecast inventory needs based on historical customer data. Through its predictive capabilities, the organization saw a considerable drop in overstock situations, enhancing its supply chain effectiveness. These initiatives highlight the need for a robust strategy that includes a commitment to data privacy as a top priority.

Strategies for ensuring data security in AI implementation.

Strategies for ensuring data security in AI implementation.

Future Outlook

As we look ahead, the role of LLMs in business will only continue to grow, shaping how organizations operate and interact with their customers. Industry leaders must remain vigilant about the risks associated with AI adoption while simultaneously embracing the transformative potential it offers. This dual focus on innovation and security will be instrumental in achieving long-lasting success.

A collaborative approach involving IT, legal, and compliance teams is crucial to navigating the murky waters of enterprise AI. By establishing clear policies and procedures, organizations can mitigate risks while capitalizing on the advantages of generative AI and its underlying technologies.

In conclusion, the journey toward fully realizing the potential of generative AI is complex and requires careful consideration of data privacy concerns. As demonstrated by early adopters, thoughtful integration strategies can pave the way for significant advancements in operational efficiency and customer engagement without sacrificing data integrity.

Now is the time for organizations to embrace the future of AI, taking bold steps while remaining steadfast in their commitment to security. The lessons learned from those who have gone before will provide a valuable roadmap for companies eager to unlock the full potential of AI technology in the enterprise landscape.

The evolving landscape of AI in business.

The evolving landscape of AI in business.