The Future of Artificial Intelligence: Unlocking New Frontiers with Advanced Prompt Engineering

As a journalist covering the latest developments in AI, I’m thrilled to explore the rapidly evolving field of prompt engineering. From enhancing reasoning capabilities to integrating large language models (LLMs) with external tools and programs, the latest advances in prompt engineering are unlocking new frontiers in artificial intelligence.

Advanced Prompting Strategies for Complex Problem-Solving

While CoT prompting has proven effective for many reasoning tasks, researchers have explored more advanced prompting strategies to tackle even more complex problems. One such approach is Least-to-Most Prompting, which breaks down a complex problem into smaller, more manageable sub-problems that are solved independently and then combined to reach the final solution.

AI model solving complex problems

AI model solving complex problems

Integrating LLMs with External Tools and Programs

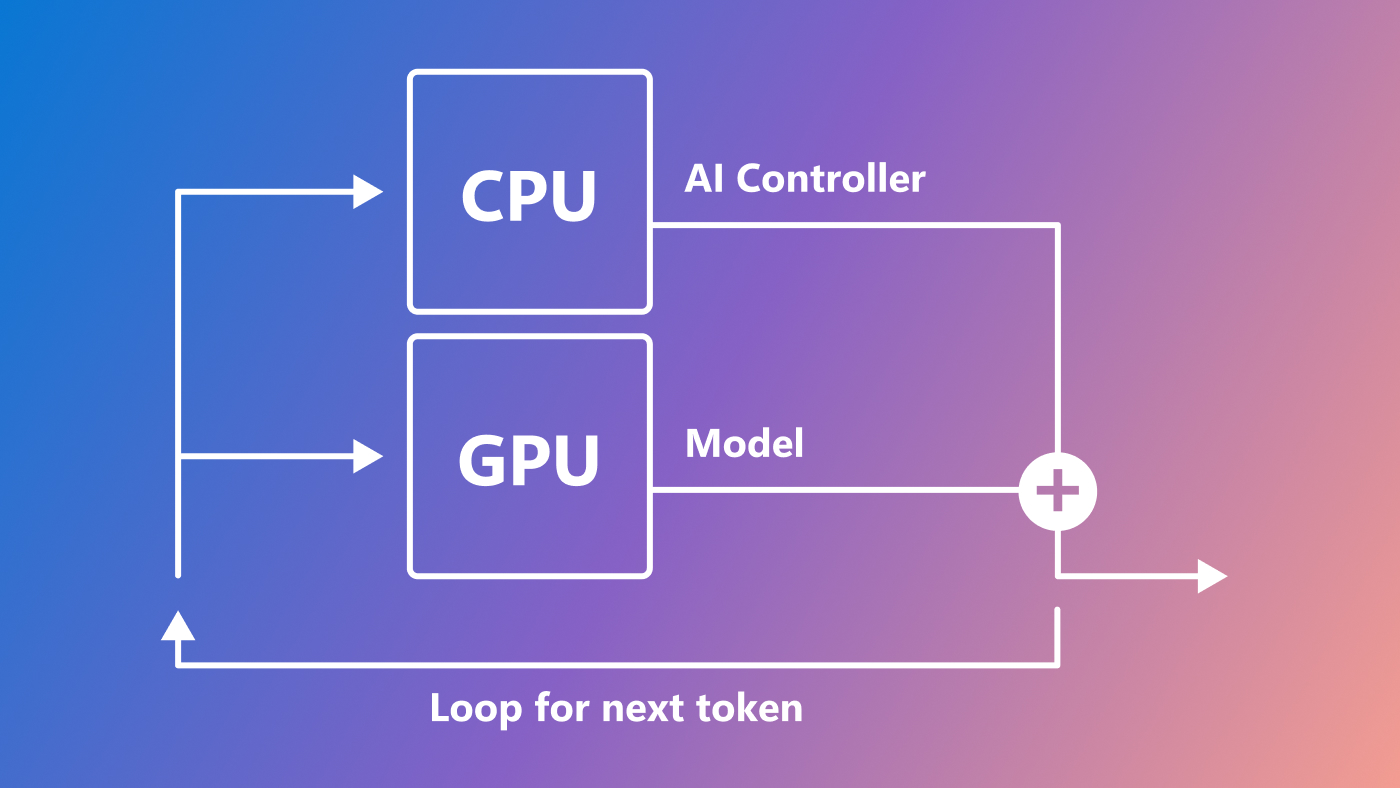

While LLMs are incredibly powerful, they have inherent limitations, such as an inability to access up-to-date information or perform precise mathematical reasoning. To address these drawbacks, researchers have developed techniques that enable LLMs to seamlessly integrate with external tools and programs.

LLM integration with external tools

LLM integration with external tools

Fundamental Prompting Strategies

Zero-Shot Prompting

Zero-shot prompting involves describing the task in the prompt and asking the model to solve it without any examples.

Few-Shot Prompting

Few-shot prompting improves upon zero-shot by including several examples of the task.

Instruction Prompting

Instruction prompting explicitly describes the desired output, which is particularly effective with models trained to follow instructions.

Recent Advances in Prompt Engineering

Auto-CoT (Automatic Chain-of-Thought Prompting)

Auto-CoT is a method that automates the generation of reasoning chains for LLMs, eliminating the need for manually crafted examples.

Complexity-Based Prompting

This technique selects examples with the highest complexity (i.e., the most reasoning steps) to include in the prompt.

Progressive-Hint Prompting (PHP)

PHP iteratively refines the model’s answers by using previously generated rationales as hints.

Decomposed Prompting (DecomP)

DecomP breaks down complex tasks into simpler sub-tasks, each handled by a specific prompt or model.

Hypotheses-to-Theories (HtT) Prompting

HtT uses a scientific discovery process where the model generates and verifies hypotheses to solve complex problems.

Tool-Enhanced Prompting Techniques

Toolformer

Toolformer integrates LLMs with external tools via text-to-text APIs, allowing the model to use these tools to solve problems it otherwise couldn’t.

Chameleon

Chameleon uses a central LLM-based controller to generate a program that composes several tools to solve complex reasoning tasks.

GPT4Tools

GPT4Tools finetunes open-source LLMs to use multimodal tools via a self-instruct approach, demonstrating that even non-proprietary models can effectively leverage external tools for improved performance.

Conclusion

The field of prompt engineering for large language models is rapidly evolving, with researchers continually pushing the boundaries of what’s possible. From enhancing reasoning capabilities with techniques like Chain-of-Thought prompting to integrating LLMs with external tools and programs, the latest advances in prompt engineering are unlocking new frontiers in artificial intelligence.

The future of AI

The future of AI