The Future of Large Language Models: Unleashing the Power of Gemini 1.5 Pro

In the realm of Large Language Models (LLMs), the landscape is evolving rapidly with the introduction of cutting-edge technologies. The latest buzz surrounds the impact of Gemini 1.5 Pro, equipped with a staggering 1M context window, on LLM application development.

The current state of LLM applications predominantly revolves around frameworks like LangChain and LlamaIndex. LangChain stands out for its prowess in creating data-aware and agent-based applications, offering seamless integration with various LLM providers. On the other hand, LlamaIndex shines in data indexing and retrieval, making it a go-to choice for applications requiring smart search functionalities.

When deciding between LangChain and LlamaIndex, the key lies in understanding your project’s specific requirements. LangChain excels in versatility and tool integration, catering to complex LLM applications. Conversely, LlamaIndex’s strength lies in efficient data search and retrieval, making it ideal for projects focused on information exploration.

The Impact of Gemini 1.5 Pro

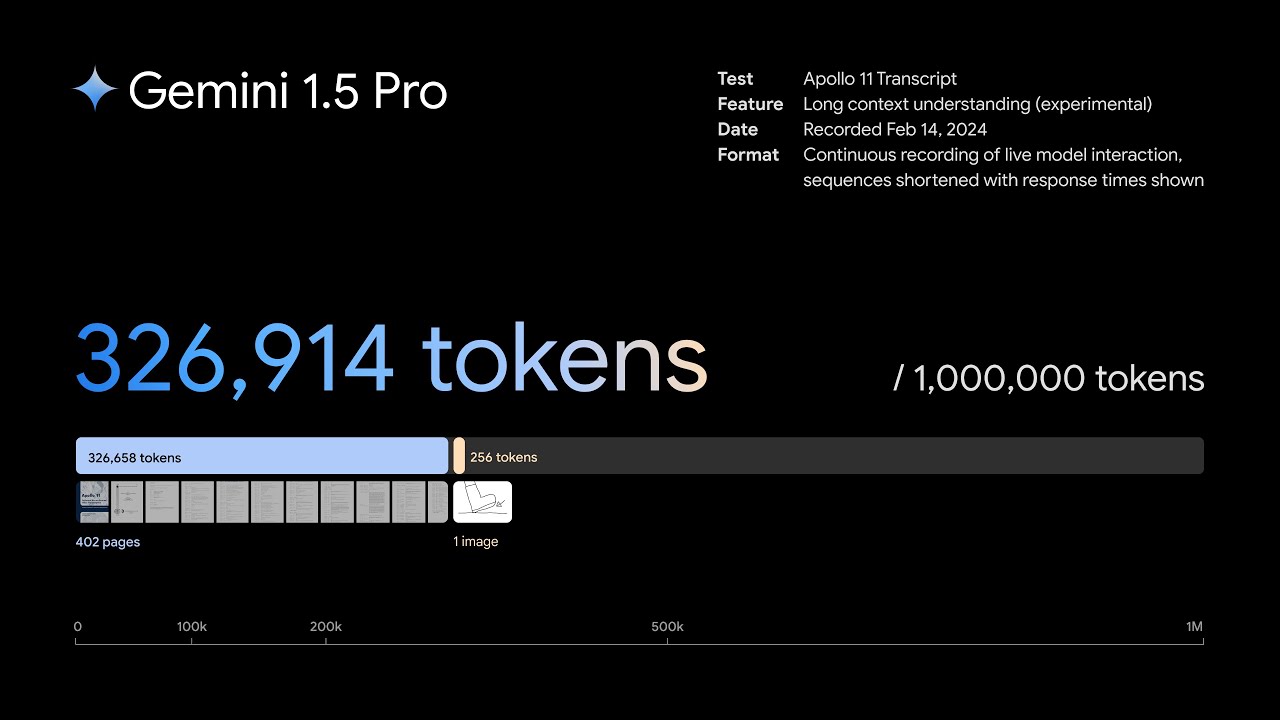

Google’s unveiling of Gemini 1.5 Pro with a colossal 1M context window marks a significant leap in LLM capabilities. The ability to process 1 million tokens in a single sweep opens doors to handling vast amounts of information, from lengthy transcripts to extensive codebases.

This enhanced processing power empowers LLMs to delve deep into data, enabling sophisticated understanding and reasoning across various modalities, including video content. The implications are profound, especially in problem-solving scenarios requiring analysis of extensive code blocks.

The Vision of Jerry Liu

Jerry Liu, the mastermind behind LlamaIndex, envisions a future where large context LLMs become the norm. As the cost of tokens decreases, a new era of LLMs with extended context capabilities is on the horizon. While long-context LLMs simplify certain aspects of the RAG pipeline, new architectures must evolve to address the emerging use cases stemming from these advancements.

Conclusion

The convergence of Gemini 1.5 Pro and the realm of LLMs heralds a new era of possibilities in application development. With enhanced processing capabilities and extended context windows, LLMs are poised to revolutionize how we interact with and extract insights from vast troves of data.