Unleashing the Power of Fact-Checking with Google DeepMind’s SAFE

In an age where misinformation spreads faster than the truth, the responsibility for verifying accuracy has fallen increasingly on technology. Enter Google DeepMind’s new innovation: the Search-Augmented Factuality Evaluator, or SAFE. This cutting-edge AI fact-checker employs a sophisticated large language model (LLM) to meticulously review responses and cross-examine information against trending search engine results. Its design elegantly mirrors the critical thinking many of us exercise daily when confronted with dubious facts.

The future of factual precision is here.

The future of factual precision is here.

BACKING UP CLAIMS WITH DATA

What sets SAFE apart is its robustness in aligning with human assessments—a remarkable 72% of the time. More impressively, it proves to be your accurate ally in 76% of cases where it diverges from human judgment. This fact raises an urgent question: how can we leverage this technology to combat the rampant misinformation that threatens public discourse?

Through empirical testing, DeepMind demonstrated that LLM agents can achieve superhuman performance in fact-checking tasks, showcasing a level of rigor that challenges both academic standards and journalistic ethics. This breakthrough is not merely an upgrade in utility; it’s potentially a paradigm shift in how we validate information. Imagine scanning through news articles or social media postings, and instantly having a technological safeguard against the fallacies that have long plagued our digital age.

OPEN SOURCE FOR COLLECTIVE PROGRESS

Emphasizing transparency, DeepMind has generously made the SAFE code open-source on GitHub, inviting developers and researchers to utilize its capabilities in diverse applications. This communal approach could catalyze further advancements in AI fact-checking, inspiring others in the tech community to join the fight against misinformation.

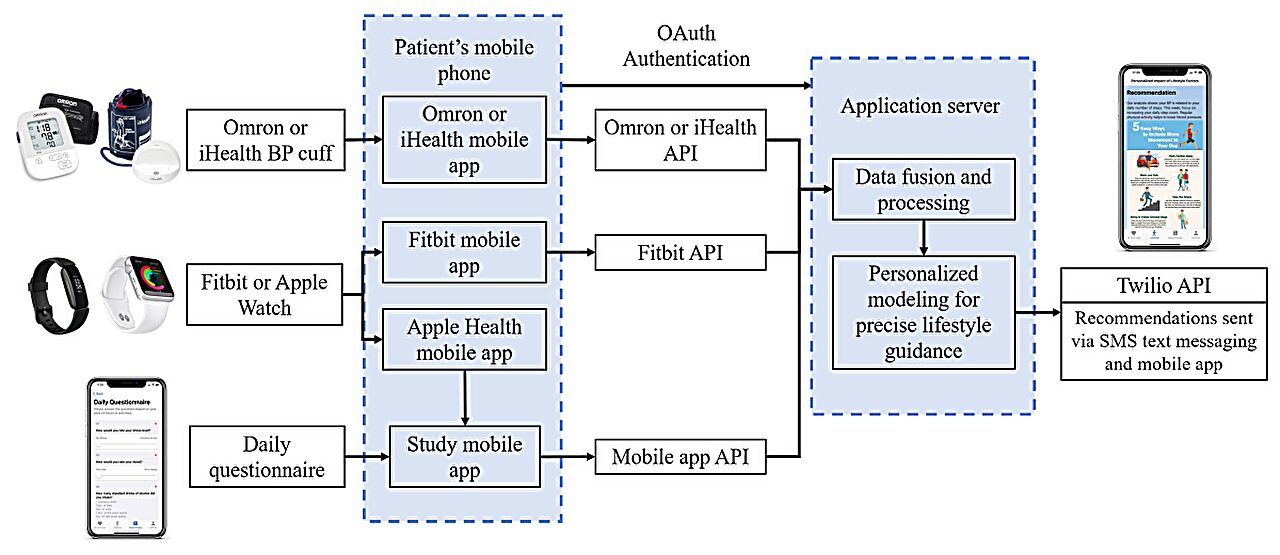

Upon reviewing SAFE’s operational mechanics, I am struck by its multi-step reasoning process. By dissecting lengthy responses into measurable, individual facts, the AI submits queries to Google Search, determining the accuracy of each point based on the validation of the results. This intricate dance between AI cognition and internet data epitomizes the central challenge of the modern information ecosystem.

Searching for accuracy in a sea of information.

Searching for accuracy in a sea of information.

A NEED FOR RELIABLE VERIFICATION TOOLS

As I reflect upon my own experiences in navigating digital content, the utility of such a tool cannot be overstated. Indeed, I often find myself lost in a rabbit hole of conflicting information, wrestling with varying narratives surrounding topics ranging from climate change to technological advancements. SAFE’s potential as a gatekeeper, filtering truth from fabrication, evokes a sense of hope. It liberates the public from the choking grip of uncertainty that pervasive misinformation creates.

The ramifications of introducing rigorous fact-checking AI into everyday platforms are profound. From academic discourse to the simple news feed on our social platforms, knowing that reliable verification tools exist could shift our collective approach to consuming information. Perhaps, we could all learn to trust again—at least in the technology designed to help us distinguish lies from truths.

RISKS AND CONSIDERATIONS

However, we must approach this innovation with caution. The digital realm is rife with challenges surrounding the nuances of language and context. While SAFE boasts impressive metrics, the “human touch” in assessing narrative nuance often gets lost in translation. We must also be wary of the unintended consequences that may arise. In a world where technology increasingly shapes our decisions, ensuring that we do not over-rely on AI for judgement is crucial. Vigilance in maintaining an equilibrium between human analysis and machine learning will be essential.

As technology evolves, so too must our frameworks for evaluating the truthfulness of the content we encounter. By embracing groundbreaking technologies such as SAFE, we can stand at the forefront of a movement aimed to keep misinformation at bay, in favor of factual integrity backed by data-driven insights.

The path ahead for responsible AI.

CONCLUSION: STRIVING FOR ACCURACY IN THE DIGITAL AGE

Ultimately, Google DeepMind’s SAFE is not just an advancement in AI technology, but a crucial step towards healthier digital communication. It possesses the power to equip individuals and institutions alike with the confidence to share information responsibly. As we move deeper into an era defined by digital interactions, tools like SAFE could prove essential, marking our ongoing commitment to ensuring accuracy in an information-abundant world. Our advocacy for fact-checking must not wane; instead, with tools at our disposal like SAFE, we can foster an environment where truth prevails.

I urge every reader to stay informed, read critically, and most importantly, question boldly. With AI leading the way, the future looks clearer, and the pursuit of truth never felt so achievable.