The Secret Sauce Behind Seamless Interactions: Understanding LLMOps

=================================================================

Large Language Models (LLMs) have revolutionized the way we interact with machines. Their ability to generate human-like text in response to a wide range of prompts and requests has made them an essential tool in various industries. But have you ever wondered how these powerful models deliver such lightning-fast responses?

The answer lies in a specialized field called LLMOps. In this article, we’ll delve into the world of LLMOps and explore its role in ensuring seamless user experiences.

The Importance of LLMOps

Imagine having a conversation with a friend. You expect a smooth exchange, with each response building upon the previous one. This is what users expect when interacting with LLMs. However, achieving this level of conversational flow requires a behind-the-scenes magic that ensures LLMs function efficiently and reliably.

LLMOps, short for Large Language Model Operations, is the secret sauce that makes this possible. It represents an advancement from the familiar MLOps, specifically designed to address the unique challenges posed by LLMs.

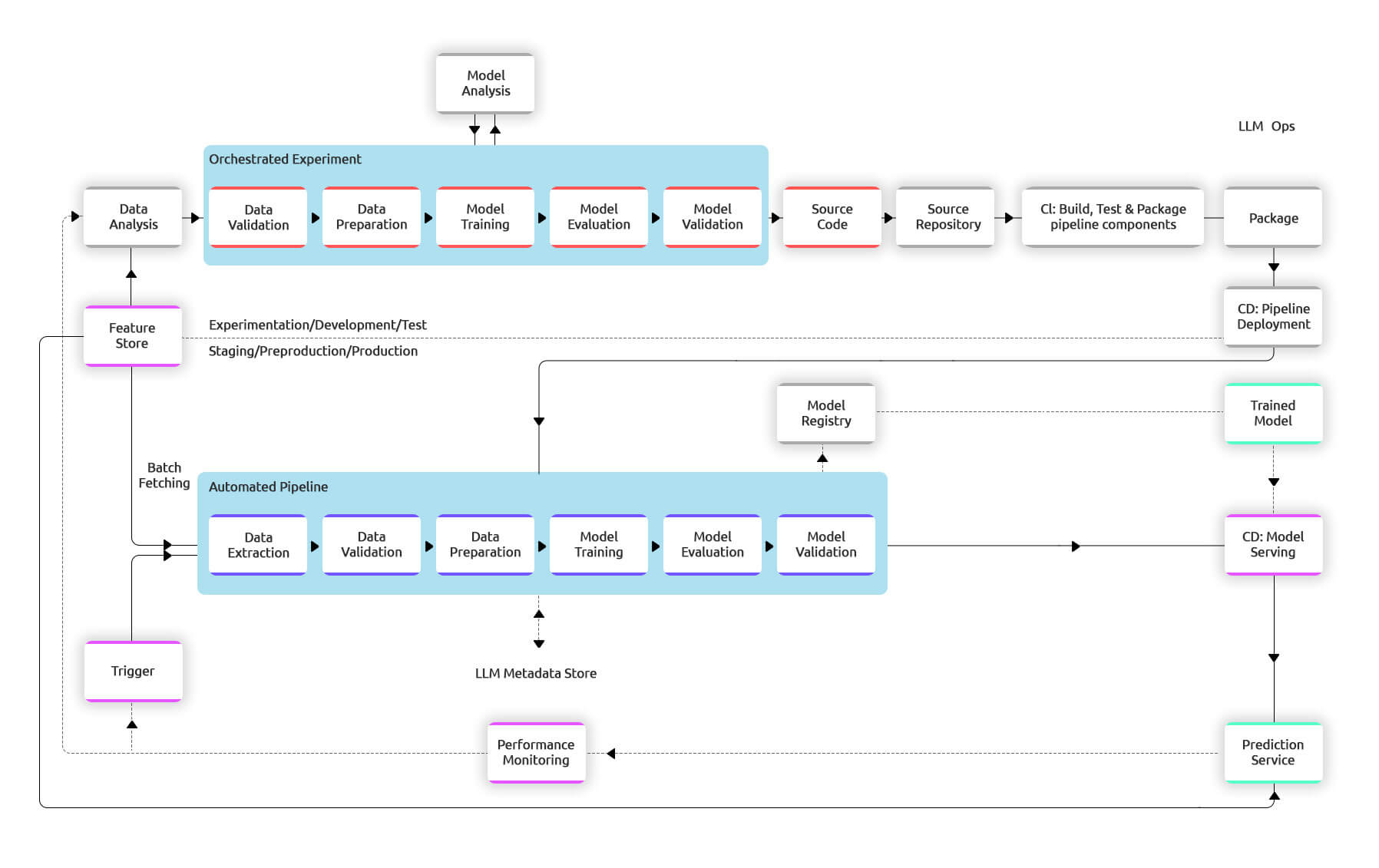

The LLMOps workflow ensures seamless interactions between users and LLMs.

The LLMOps workflow ensures seamless interactions between users and LLMs.

The LLMOps Workflow: Understanding the Magic

The LLMOps workflow involves several steps that work together to make the prompt clear and understandable for the LLM. These steps include:

- Pre-processing: The prompt is broken down into smaller pieces (tokens), cleaned up, and formatted consistently. The tokens are then embedded into numerical data so the LLM understands.

- Grounding: The system references past conversations or outside information to ensure the model understands the bigger picture. Important entities mentioned in the prompt are identified to make the response more relevant.

- Safety Check: The system checks for sensitive information or potentially offensive content, ensuring the prompt is used appropriately.

- Post-processing: The response is translated back into human-readable text, polished for grammar, style, and clarity.

LLMOps ensures seamless interactions between users and LLMs.

Key Components of a Robust LLMOps Infrastructure

A well-designed LLMOps setup involves several essential building blocks, including:

- Choosing the Right LLM: Selecting the LLM model that best aligns with specific needs and resources.

- Fine-Tuning for Specificity: Fine-tuning existing models or training custom models for unique use cases.

- Prompt Engineering: Crafting effective prompts that guide the LLM toward the desired outcome.

- Deployment and Monitoring: Streamlining deployment and continuously monitoring the LLM’s performance.

- Security Safeguards: Implementing robust measures to protect sensitive information.

LLMOps paves the way for innovative LLM applications.

The Future of LLMs is Powered by LLMOps

As LLM technology continues to evolve, LLMOps will play a critical role in the coming technological developments. By ensuring efficient, reliable, and secure operation, LLMOps will pave the way for even more innovative and transformative LLM applications across various industries.

“The future of LLMs is powered by LLMOps.” - [Author’s Name]

The future of LLMs is powered by LLMOps.

The future of LLMs is powered by LLMOps.

Photo by

Photo by