The Quest for Local AI Innovation

The AI landscape is rapidly evolving, and the quest for local AI innovation is gaining momentum. In recent weeks, I’ve been exploring the possibilities of developing AI-powered applications that work with local LLMs. This journey has led me to an exciting discovery - building a fully local (nano) DiagramGPT using Llama 3 8B and learning about inline function calling.

Local AI innovation is the future

Local AI innovation is the future

Llama 3 + Llama.cpp: The Local AI Heaven

The combination of Llama 3 and Llama.cpp is a game-changer for local AI development. By leveraging the power of these technologies, developers can create innovative applications that work seamlessly with local LLMs. This synergy has the potential to revolutionize the way we approach AI development, making it more accessible and efficient.

The future of AI development is local

The future of AI development is local

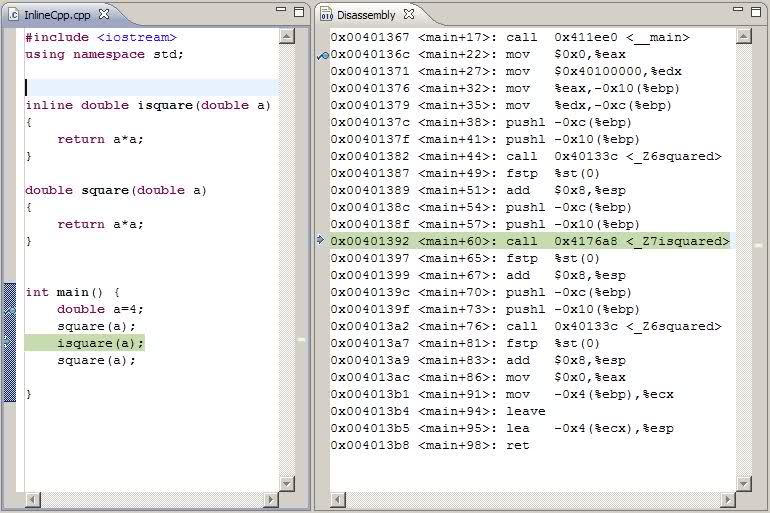

Inline Function Calling: The Key to Efficiency

One of the most significant advantages of using Llama 3 and Llama.cpp is the ability to call functions inline. This feature enables developers to optimize their code, reducing latency and improving overall performance. As we move forward in the quest for local AI innovation, inline function calling will play a crucial role in shaping the future of AI development.

Optimizing code for better performance

Optimizing code for better performance

Conclusion

The quest for local AI innovation is an exciting journey that holds immense potential for the future of AI development. By harnessing the power of Llama 3 and Llama.cpp, developers can create innovative applications that work seamlessly with local LLMs. As we continue to push the boundaries of what is possible, one thing is clear - local AI innovation is the future.

The future is local

The future is local