How AI Large Language Models Work, Explained Without Math

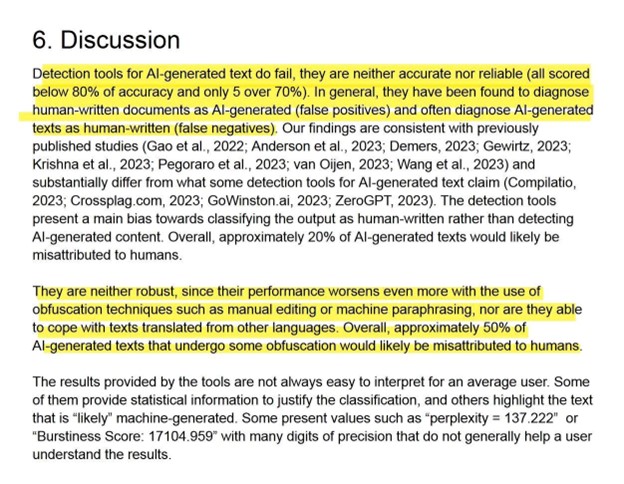

Large Language Models (LLMs) are everywhere, but have you ever wondered how they work under the hood? In this article, we’ll delve into the inner workings of LLMs, explaining their capabilities and limitations in simple terms.

At their heart, LLMs are prediction machines that work on tokens (small groups of letters and punctuation) and are capable of great feats of human-seeming communication. Most technical-minded people understand that LLMs have no idea what they are saying, and this peek at their inner workings will make that abundantly clear.

The architecture of a Large Language Model

The architecture of a Large Language Model

LLMs are trained on vast amounts of text data, which enables them to recognize patterns and make predictions about the next token in a sequence. This process is repeated millions of times, allowing the model to learn and improve its predictions. The result is a machine that can generate human-like text, but without truly understanding the meaning behind the words.

“LLMs have no idea what they are saying, and this peek at their inner workings will make that abundantly clear.”

Despite their limitations, LLMs have many practical applications, from language translation to text summarization. They can even be used to generate creative content, such as stories and poems.

An example of AI-generated text

An example of AI-generated text

If you’re interested in learning more about the technical details of LLMs, be sure to check out our coverage of training a GPT-2 LLM using pure C code. And for a visual guide to how image-generating AIs work, take a look at this illustrated guide.

In conclusion, Large Language Models are powerful tools that have many potential applications. While they may not truly understand the meaning behind the words, they are capable of generating human-like text that can be both impressive and unsettling.

The future of AI language models

The future of AI language models