The Hidden Dangers of Large Language Models

The AI Safety Institute has sounded the alarm: popular large language models (LLMs) are insecure, and their built-in safeguards are ineffective. This warning comes as AI becomes increasingly pervasive in enterprise tech stacks, amplifying cyber security anxieties.

AI security concerns are on the rise

AI security concerns are on the rise

The UK-based institute’s research found that anonymized LLMs remain highly vulnerable to basic jailbreaks, and some will provide harmful outputs even without dedicated attempts to circumvent their safeguards. This is a troubling scenario for security-conscious leaders, as the lack of internal safeguards coupled with uncertainty around vendor-embedded safety measures leaves companies exposed.

Cyber security leaders are concerned about AI safety

Cyber security leaders are concerned about AI safety

Nearly all cyber security leaders (93%) say their companies have deployed generative AI, but more than one-third of those using the technology have not erected safeguards, according to a Splunk survey. This lack of preparedness is concerning, as AI can amplify cyber issues, from the use of unsanctioned AI products to insecure code bases.

AI safety is a growing concern

AI safety is a growing concern

Vendors have responded to growing concerns by adding features and updating policies. AWS added guardrails to its Bedrock platform, while Microsoft integrated Azure AI Content Safety across its products. Google introduced its own secure AI framework, SAIF. However, vendor efforts alone are not enough to protect enterprises.

CIOs are being challenged to bring cyber pros into the conversation

CIOs are being challenged to bring cyber pros into the conversation

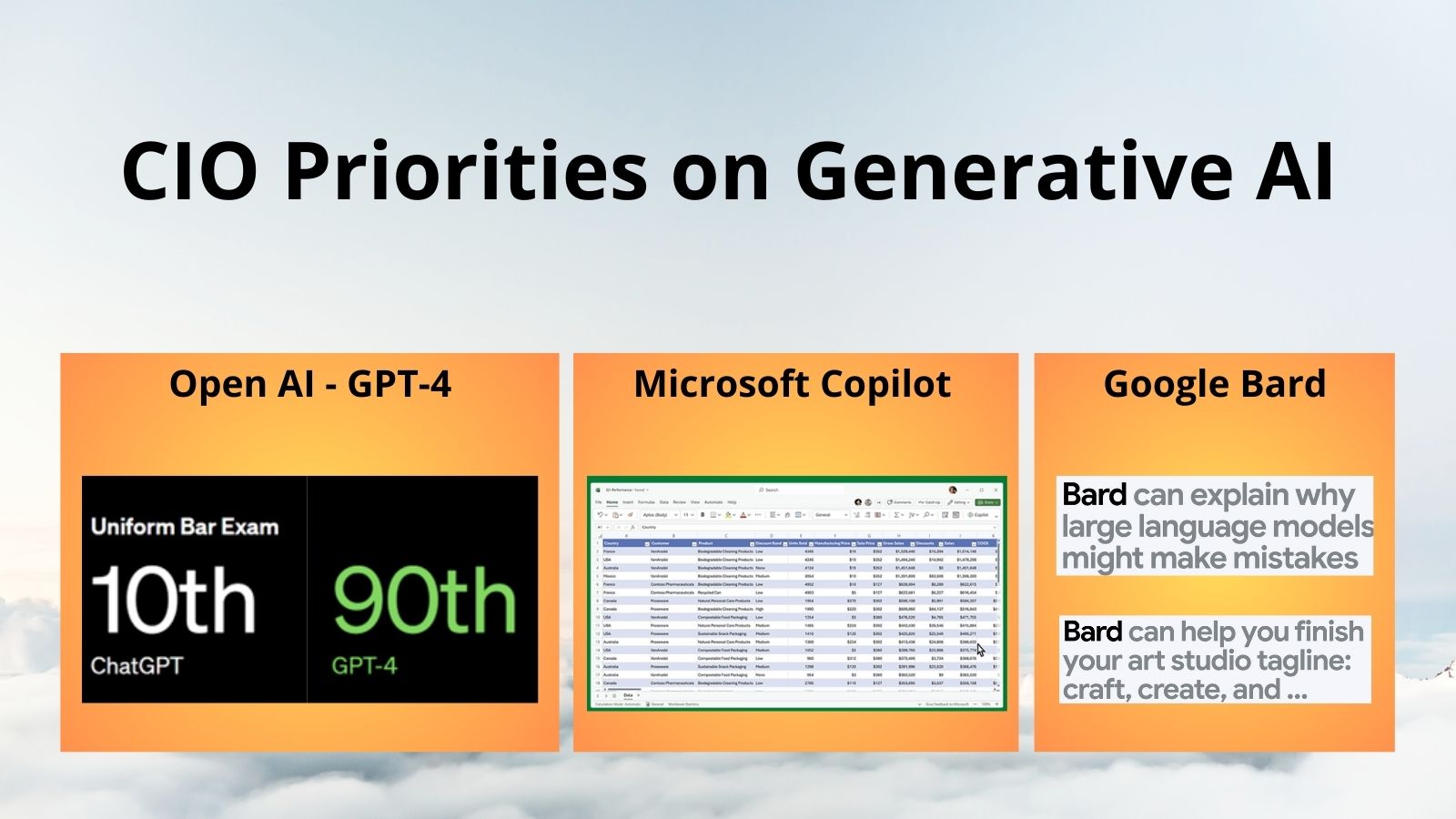

CIOs, tasked with leading generative AI efforts, are being challenged to bring cyber professionals into the conversation to help procure models and navigate use cases. But even with added expertise, it’s challenging to craft AI plans that are nimble enough to respond to research developments and regulatory requirements.

Government-led commitments to AI safety are proliferating

Government-led commitments to AI safety are proliferating

Government-led initiatives, such as the White House-led AI safety initiative, have brought together AI model providers to participate in product testing and other safety measures. The National Institutes of Standards and Technology’s US AI Safety Institute has also created an AI safety alliance, with over 200 organizations, including Google, Microsoft, Nvidia, and OpenAI, joining the effort.

The future of AI safety is uncertain

The future of AI safety is uncertain

As AI continues to evolve, one thing is clear: more expertise is needed to deal with new security challenges. CIOs, cyber security leaders, and vendors must work together to ensure the safe adoption of AI technology.