AI Evolution: Beyond the Surface

As I reflect on my interactions with AI chatbots like ChatGPT and Gemini, I often wonder about the magic behind their ability to provide such accurate and human-like responses. But have you ever stopped to think about what a large language model really is and how it can generate such excellent responses?

Defining Large Language Models

Large language models (LLMs) are the cutting-edge AI models that process information and respond in ways that feel natural. They use a specific kind of neural network architecture called a transformer to process data and react in ways that mimic human language. What truly sets them apart is their scale - these models are trained on massive datasets containing text and code, with billions or even trillions of parameters, allowing them to understand and respond to language with remarkable sophistication. This makes them excellent at answering questions, summarizing text, and generating content in different formats.

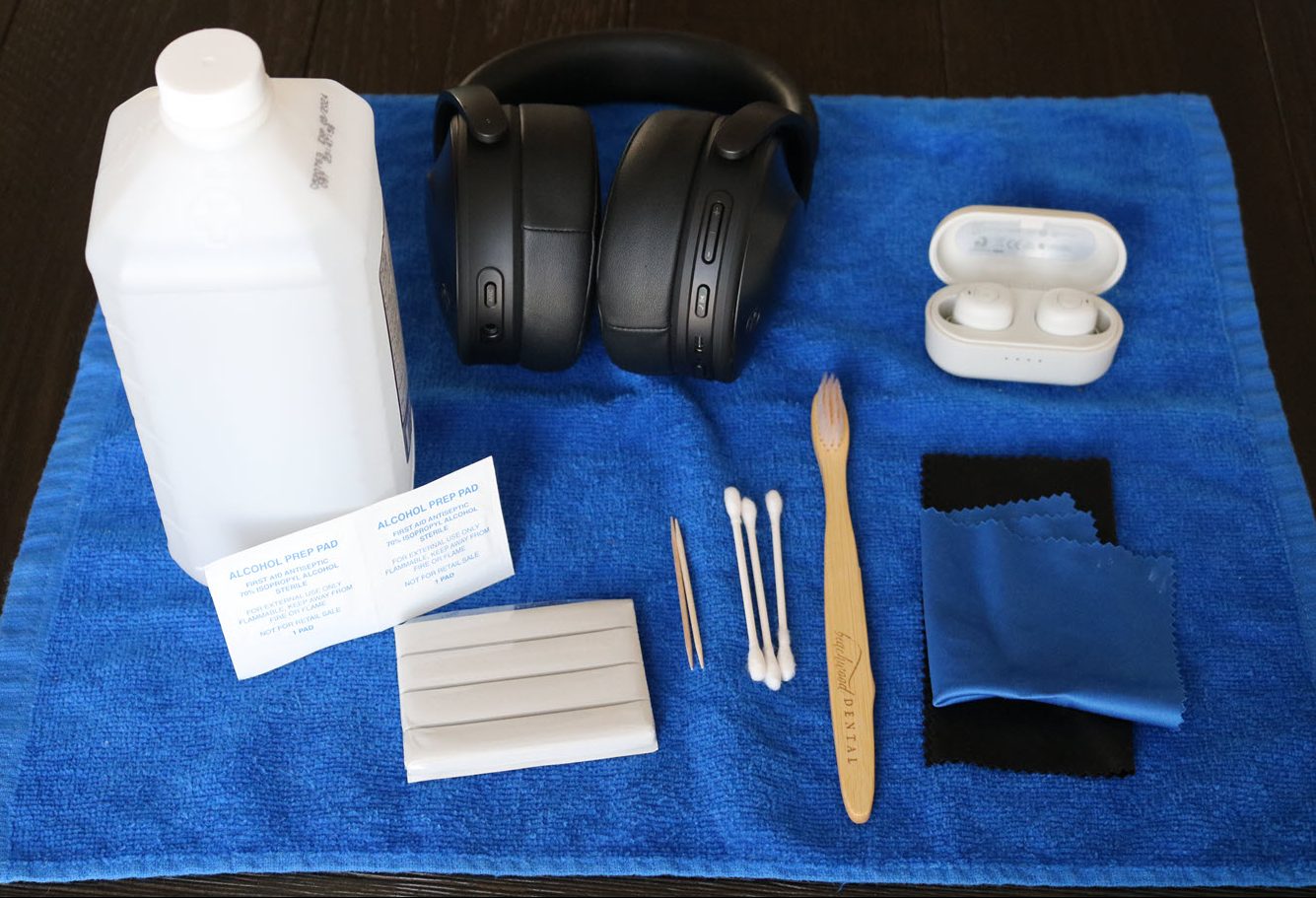

A neural network architecture

Comparing Large Language Models to Traditional Neural Networks

Unlike traditional neural networks, such as the Google Search Algorithm, that process information step-by-step, transformer networks like large language models can simultaneously analyze entire sentences or passages. This is like reading a whole paragraph and understanding the connections between all the ideas instead of reading each word one by one.

Transformer models use a technique called “self-attention” to focus on the most critical parts of the input, a method akin to a conductor of a large orchestra listening to all the instruments simultaneously, yet focusing on specific sections to create a harmonious piece of music. In language, this translates to the transformer understanding how words relate to each other and contribute to the overall meaning, even if they’re far apart in the sentence.

The History of AI

Artificial intelligence transformer models aren’t a brand-new invention. Their roots go back to the early 1990s with the concept of a “fast weight controller” that used similar attention mechanisms. However, it wasn’t until 2017 when the now-famous “Attention Is All You Need” research paper written by eight scientists working at Google introduced the transformer architecture we know today. This sparked a revolution in natural language processing, with researchers building upon the foundation to create even more powerful and versatile models like the ones powering ChatGPT and Gemini.

The Increasing Popularity of AI: OpenAI’s ChatGPT

The popularity of LLMs skyrocketed and entered the mainstream with the release of OpenAI’s ChatGPT in November 2022. This doesn’t negate the fact that many LLMs existed even before ChatGPT. Early LLMs and Chatbots were capable of impressive feats. They could generate human-quality text, translate languages, and answer questions informatively. However, they often faced limitations such as misinterpreting context, generating unnatural responses, and responding in ways that weren’t in line with the user’s needs.

What made ChatGPT stand out compared to previous GPT versions and early LLMs was the introduction of Reinforcement Learning from Human Feedback (RLHF). RLHF allows humans to provide feedback on the LLM’s responses. This feedback can be used to “train” the model to understand better what kind of responses are helpful, relevant, and sound natural, ultimately resulting in stronger and more ideal responses. Most subsequent LLMs now incorporate it.

OpenAI’s ChatGPT

Ethical Considerations of Generative AI

There have also been many controversies regarding the ethics of generative AI (of which LLMs are a part), especially concerning the use of copyrighted material for training purposes. This issue is particularly prevalent, as seen in the recent controversies surrounding computer-generated art and music. LLMs themselves can also raise copyright concerns. Their ability to generate human-quality text raises questions about originality and potential copyright infringement, primarily if they produce content that is derivative of copyrighted works.

However, ongoing discussions and research are exploring potential solutions. These include developing more precise guidelines for the fair use of copyrighted material in LLM training and investigating mechanisms for attribution or compensation when AI-generated content draws heavily on existing works.

AI and the Future of Work

The rise of LLMs has led to much discussion about the future of work. While some fear AI will automate many jobs currently performed by humans, history suggests that technological advancements often create new opportunities alongside disruption.

New job roles will be designed to develop, maintain, and interact with these AI systems. Just as LLM development necessitated specialists in natural language processing and machine learning engineering, new roles like data curator and prompt engineer are appearing. A similar trend is likely to continue as LLMs become even more sophisticated.

The Next Chapter in AI: Overcoming LLM Limitations

LLMs have continued to advance rapidly in recent years. New versions like GPT-4 boast even more parameters and capabilities, pushing the boundaries of what these models can achieve. Frameworks like LangChain explore new ways to leverage LLMs, focusing on modularity and specialization.

Additionally, advancements like Retrieval-Augmented Generation (RAG) allow models to access and process external information during text generation, leading to more informative and well-rounded responses. This rapid development promises a future where LLMs become even more helpful, nuanced, and adaptable in their interactions with the world.

Yet, these models still face challenges, such as hallucinations (i.e., generating seemingly convincing but factually incorrect information) and bias (reflecting the biases in their training data). Over time, with continued research and development, we can expect LLMs to overcome these challenges and perform even better, shaping a future filled with even more powerful and versatile language models.

Photo by

Photo by