The Dark Side of AI: How Hackers are Stealing LLM Credentials

As I delve into the world of artificial intelligence, I’m constantly amazed by the innovative applications of large language models (LLMs). However, a recent discovery has left me concerned about the security of these powerful tools. Cybersecurity researchers at Sysdig Threat Research Team have uncovered a disturbing trend: hackers are stealing login credentials to LLM services, selling them on the dark web, and making a hefty profit.

The Rise of LLMjacking

The campaign, dubbed LLMjacking, exploits a vulnerability in the Laravel Framework (CVE-2021-3129) to gain access to Amazon Web Services (AWS) credentials for LLM services. The attackers then use these credentials to access local LLM models hosted by cloud providers, including Anthropic’s Claude (v2/v3) model.

New Methods of Abuse

The researchers discovered a Python script used by the attackers to generate requests that invoke the models. The script checks credentials for ten AI services, including AI21 Labs, Anthropic, AWS Bedrock, Azure, ElevenLabs, MakerSuite, Mistral, OpenAI, OpenRouter, and GCP Vertex AI. The attackers don’t run legitimate LLM queries; instead, they do just enough to find out what the credentials are capable of and any quotas.

The Cost of LLM Abuse

The use of LLM services can be expensive, depending on the model and the amount of tokens being fed to it. By maximizing the quota limits, attackers can block the compromised organization from using models legitimately, disrupting business operations. The bill can be staggering, reaching up to $46,000 a day for LLM use.

The cost of LLM abuse can be staggering

The cost of LLM abuse can be staggering

A New Era of Cybersecurity Threats

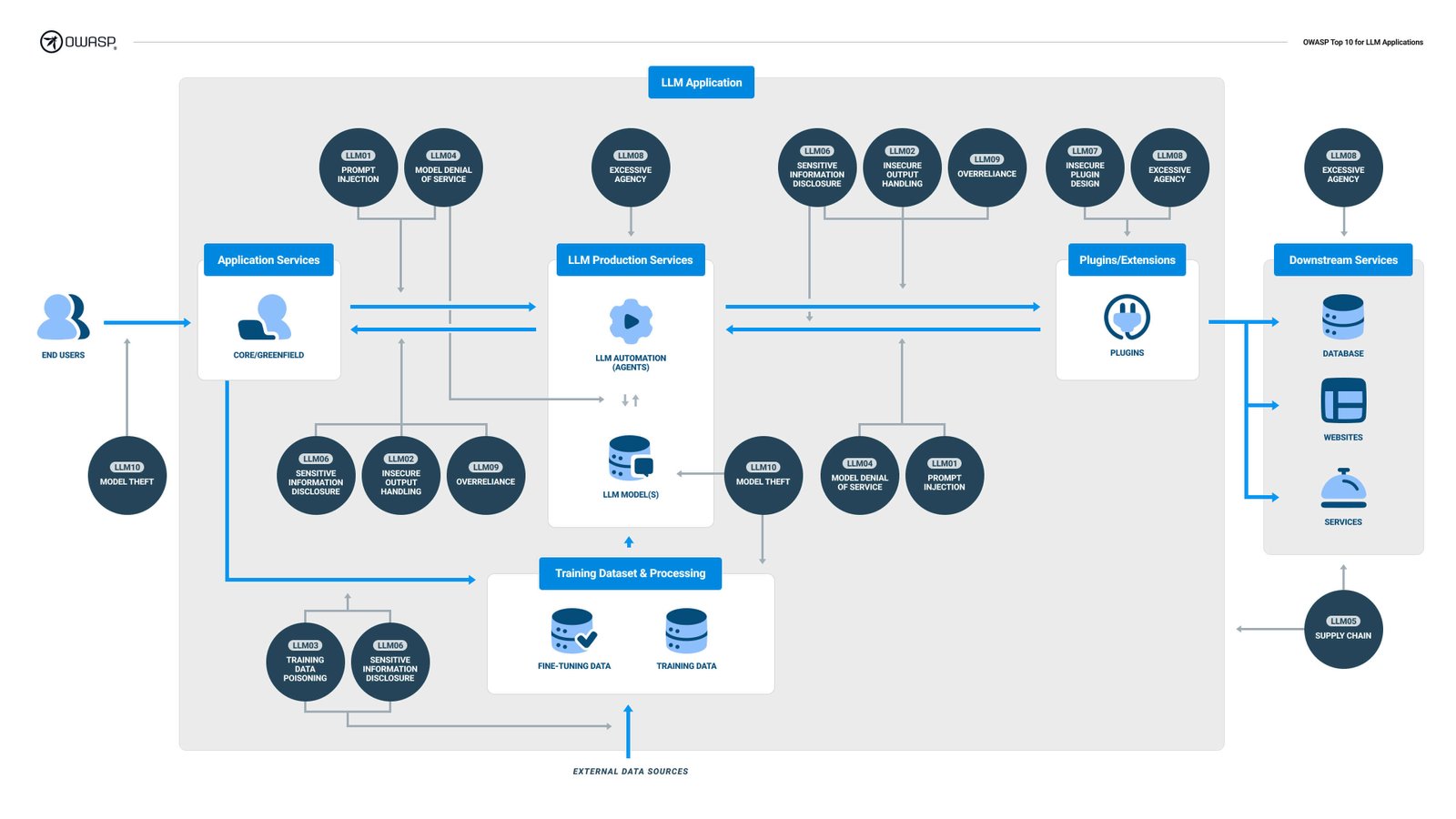

The LLMjacking campaign is evidence that hackers are finding new ways to weaponize LLMs, beyond prompt injections and model poisoning. By monetizing access to LLMs, hackers can reap significant financial benefits while leaving victims with a hefty bill.

The era of LLM-powered cybersecurity threats has begun

The era of LLM-powered cybersecurity threats has begun

Conclusion

As we continue to push the boundaries of AI innovation, it’s essential to acknowledge the darker side of LLMs. The LLMjacking campaign serves as a stark reminder of the importance of robust cybersecurity measures to protect these powerful tools. As we move forward, it’s crucial that we prioritize security and develop strategies to combat these emerging threats.

The future of AI security is uncertain

The future of AI security is uncertain