Large Language Models: The Broken Promise of AI

The allure of artificial intelligence (AI) has captivated the world, with large language models (LLMs) like ChatGPT and Google Bard leading the charge. However, beneath the surface of these AI systems lies a plethora of problems, from inconsistent reasoning to fabricating information. The question remains: can we truly trust these models to make rational decisions?

The Inconsistencies of Large Language Models

Recent studies have highlighted the glaring inconsistencies in LLMs. When subjected to cognitive psychology tests, these models exhibited irrational behavior, often providing varying responses to the same question. The Wason task, a classic test of rationality, saw models like GPT-4 and Google Bard struggle to provide correct answers. In some cases, they even mistook consonants for vowels, leading to incorrect responses.

The Fabrication of Information

LLMs have also been known to fabricate information, a trait that raises concerns about their reliability. In an era where AI-generated content is becoming increasingly prevalent, the potential for misinformation is staggering. The consequences of relying on these models for decision-making are dire, as they may lead to catastrophic outcomes.

The Price War on LLMs

In a surprising turn of events, Zhipu AI, a Chinese tech unicorn, has slashed the prices of its LLM services by 90%. This move has sparked a price war in the LLM market, with other companies like Tencent Holdings and iFlytek following suit. While this may seem like a boon for consumers, it raises questions about the long-term sustainability of these models.

The Human Factor

At the heart of the LLM conundrum lies the human factor. As we strive to create machines that think like humans, we must confront our own biases and flaws. Do we want machines that mirror our imperfections or strive for perfection? The answer to this question will shape the future of AI and our relationship with these machines.

Conclusion

The allure of LLMs is undeniable, but we must not be blinded by their potential. As we move forward, it is essential to acknowledge the flaws in these systems and work towards creating more reliable and rational AI models. Only then can we truly harness the power of AI to transform our world.

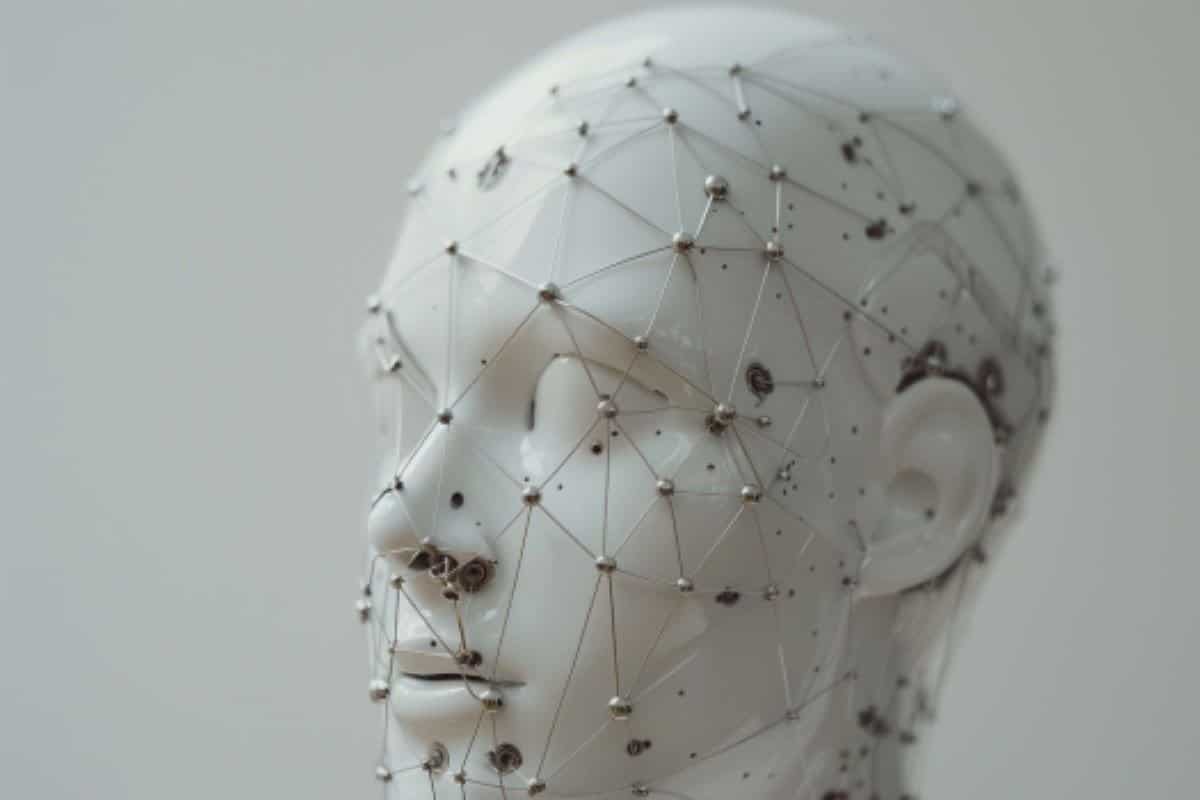

Image: Large Language Models and Reasoning

Image: Large Language Models and Reasoning

Image: Zhipu AI’s GLM series of LLMs

Image: Zhipu AI’s GLM series of LLMs

Image: ChatGPT and the Broken Promise of AI

Image: ChatGPT and the Broken Promise of AI