The State of Cybersecurity and Cybercriminals a Year After the Explosion of LLMs

It’s been a year since large language models (LLMs) dramatically improved, and threat actors quickly exploited generative AI for malicious purposes, while security teams began using AI to enhance their defenses.

AI is a double-edged sword in cybersecurity, as threat actors and blue teamers have much to gain from them.

AI is a double-edged sword in cybersecurity, as threat actors and blue teamers have much to gain from them.

In the past year, the capabilities of large language models (LLMs) have expanded dramatically. OpenAI released the largest language model, GPT-4, in March 2023, which can, in some contexts, perform close to human capabilities. A blessing and a curse in cybersecurity.

“Threat actors swiftly began exploiting generative AI to bolster their malicious activities, while security teams and cybersecurity companies were also quick to embrace generative AI to enhance their security operations.” - Nick Ascoli, senior product strategist at Flare.

Cybercriminals and LLMs

Generative AI and LLMs are a double-edged sword, as threat actors and blue teamers have much to gain from them. Cybercriminals have abused open-source models and created malicious versions of AI chatbots such as DarkBard, FraudGPT, and WormGPT. Last year, researchers started identifying open-source LLMs that had been tweaked for cybercrime and were sold on the dark web.

Relevant generative AI applications for threat actors include:

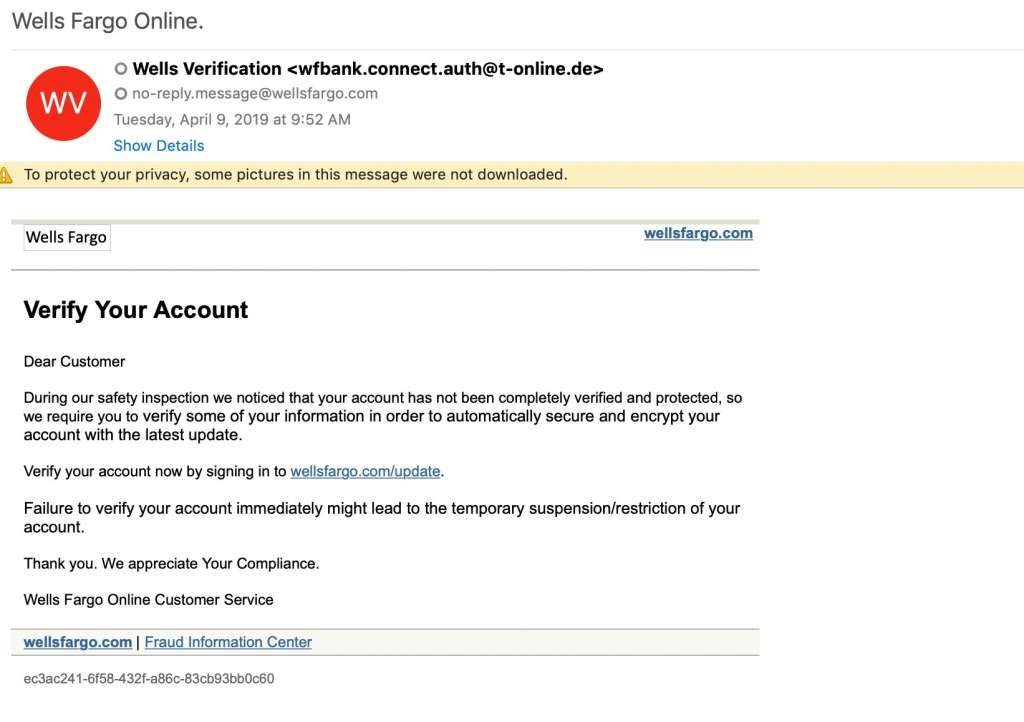

- Writing variations of convincing phishing emails.

- Social engineering with voice phishing (vishing).

- Speeding up previously manual elements of cybercrime to increase their attacks in rate and scale. For example, threat actors can buy stealer logs that contain login credentials and can use an LLM to speed up the rate of trying each login and password.

The use of open-source LLMs to facilitate targeted phishing is of particular concern because studies have shown that spear-phishing attacks are dramatically more effective than generic phishing emails. In fact, spear phishing emails account for less than 0.1% of all emails sent yet cause 66% of all breaches, according to Barracuda’s 2023 Phishing Trends Report.

Phishing emails are a major concern in cybersecurity, and LLMs can make them even more effective.

Phishing emails are a major concern in cybersecurity, and LLMs can make them even more effective.

Even within days of GPT-4’s release, threat actors were testing out how to use the LLM for malicious purposes, including:

- Voice spoofing to get MFA (multi-factor authentication) access or OTP codes

- Bypassing safeguards built into LLMs and jailbreaking them

- Outsmarting Threat Actors

Though threat actors can and do use AI and LLMs in nefarious ways, security teams can and must evolve ahead of cybercriminals’ AI applications to protect their organizations better.

The cybersecurity industry, in general, faces challenges with secure coding, bad documentation, and a lack of guardrails and training. These issues, though preventable, can make organizations’ attack surfaces even more vulnerable to threat actors.

However, AI can boost efforts and help secure entry points across the organization. AI is particularly useful for analyzing and synthesizing large amounts of information. This can improve an organization’s security posture and strengthen its infrastructure against threat actors’ attacks. Some ways AI can help cybersecurity teams include:

- Creating up-to-date and consistent documentation makes it harder for attackers to exploit vulnerabilities.

- Writing development guides with security in mind helps reduce the attack surface for malicious actors.

- Implementing and deploying custom code across the organization helps flag potential security risks in real-time, allowing developers to address them immediately.

- Identifying external threats faster than ever with automated risk monitoring before threat actors act on them.

With greater consistency in building coding infrastructure, fewer areas can be vulnerable to threat actor exploitation.

A Peek into the Future of AI

New technology makes the future exciting but uncertain, and there are possibilities for short and medium-term AI risks that organizations should be aware of and implement protections for.

Short term risks

- Data leakage risks: Employees using LLMs may unintentionally disclose sensitive data into the models, potentially losing control of this information. Cybercriminals could exploit compromised accounts to access the user’s interaction history with the model and sell this data on the dark web. Security teams should conduct workshops explaining how LLMs work, their data practices, and the potential risks of entering proprietary information. They can also develop guidelines outlining what data is off-limits for LLMs, including examples like formulas, client details, or financial data.

Data leakage risks are a major concern in the short term, and security teams must take action to prevent them.

Data leakage risks are a major concern in the short term, and security teams must take action to prevent them.

Medium-term risks

In the coming years, agential models could significantly alter the threat landscape. As increasingly capable models are integrated to create AI “agents,” language models could automate traditionally manual tasks, such as:

- Vulnerability scanning: Language models can actively search for vulnerabilities and steal logs containing corporate access credentials and secrets on platforms like GitHub faster and more comprehensively than humans. The good news is that security teams can, too. Instead of falling behind with manual monitoring, security teams can monitor for external threats to remediate them before threat actors can exploit them.

Vulnerability scanning is a major concern in the medium term, and security teams must stay ahead of threat actors.

Vulnerability scanning is a major concern in the medium term, and security teams must stay ahead of threat actors.

Cybersecurity professionals can build a stronger defense by understanding how LLMs are being weaponized. With strategies including continuous monitoring for suspicious activity, user education on phishing tactics, and leveraging AI-powered security solutions specifically designed to detect LLM-generated content, security professionals can ensure they remain a step ahead of malicious actors in the ever-changing digital landscape.