The AI Bug That Nobody Wants to Fix

Imagine a brand new and nearly completely untested technology, capable of crashing at any moment under the slightest provocation without explanation – or even the ability to diagnose the problem. No self-respecting IT department would have anything to do with it, keeping it isolated from any core systems.

The Rise of Mid-Tier AI

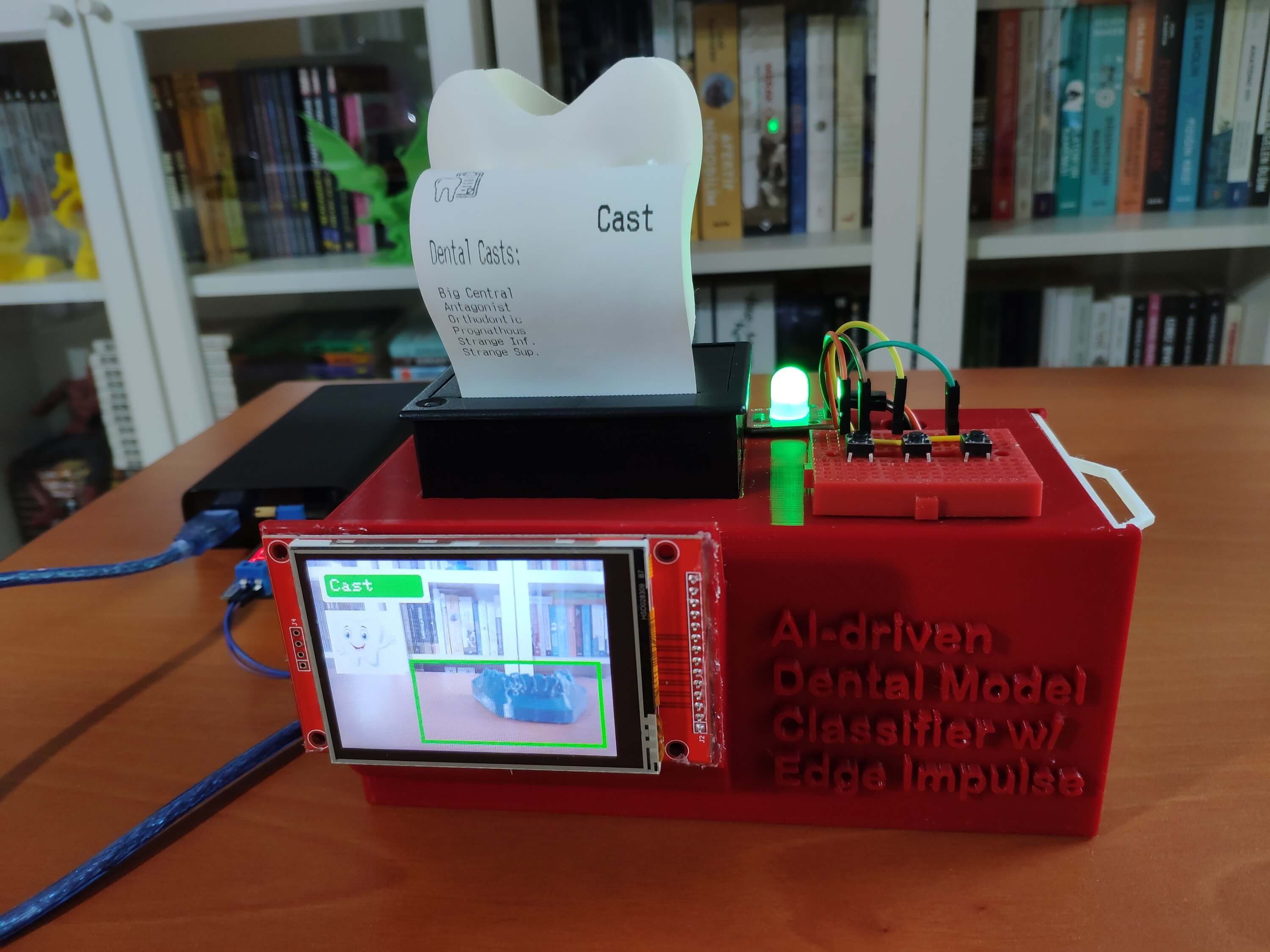

Neural nets with flaws can be harmless, yet dangerous. So why are reports of problems being roundly ignored? The age of AI has ushered in the age of “mid” – not great, but not horrid either. This sort of AI-driven classifier lands perfectly in that mid.

A mid-tier AI classifier, not great but not horrid either.

A mid-tier AI classifier, not great but not horrid either.

The LLM Kryptonite

I stumbled upon LLM Kryptonite – and no one wants to fix this model-breaking bug. It’s a bug that affects nearly every LLM tested, excepting Anthropic. I’d need to contact every LLM vendor in the field to report this issue.

The LLM Kryptonite bug, a model-breaking issue.

The LLM Kryptonite bug, a model-breaking issue.

External Confirmation

That external confirmation – Groq had been able to replicate my finding across the LLMs it supports – changed the picture completely. It meant I wasn’t just imagining this, nor seeing something peculiar to myself.

External confirmation of the LLM Kryptonite bug.

External confirmation of the LLM Kryptonite bug.

A Bug or a Suggestion?

We’ve looked over your report, and what you’re reporting appears to be a bug/product suggestion, but does not meet the definition of a security vulnerability. Reaching out to a certain very large tech company, I asked a VP-level contact for a connection to anyone in the AI security group. A week later I received a response to the effect that – after the rushed release of that firm’s own upgraded LLM – the AI team found itself too busy putting out fires to have time for anything else.

A busy AI team, too busy to address the LLM Kryptonite bug.

A busy AI team, too busy to address the LLM Kryptonite bug.