By Kai Tanaka

Social Learning for LLMs

Large language models (LLMs) have revolutionized natural language processing, pushing the boundaries of what machines can achieve in understanding and generating human language. A recent paper titled “Social Learning: Towards Collaborative Learning with Large Language Models” delves into the realm of social learning among these models. The study investigates the potential for LLMs to enhance their capabilities by learning from each other, akin to how individuals learn in social settings.

In this framework of social learning, LLMs share knowledge with each other in a privacy-aware manner using natural language. Unlike traditional approaches that rely on gradients for collaborative learning, this innovative method focuses on agents teaching each other purely through language, opening new avenues for mutual growth and improvement.

Synthetic Examples

One intriguing aspect of this study is the introduction of synthetic examples as a learning tool. In this model, teacher LLMs generate new examples for a given task and share them with student LLMs. The rationale behind this approach is to provide fresh, educational examples that differ from the original data while maintaining effectiveness. Surprisingly, the experiments demonstrate that these synthetic examples perform comparably to the original data across various tasks, highlighting the potential for novel learning strategies in the LLM domain.

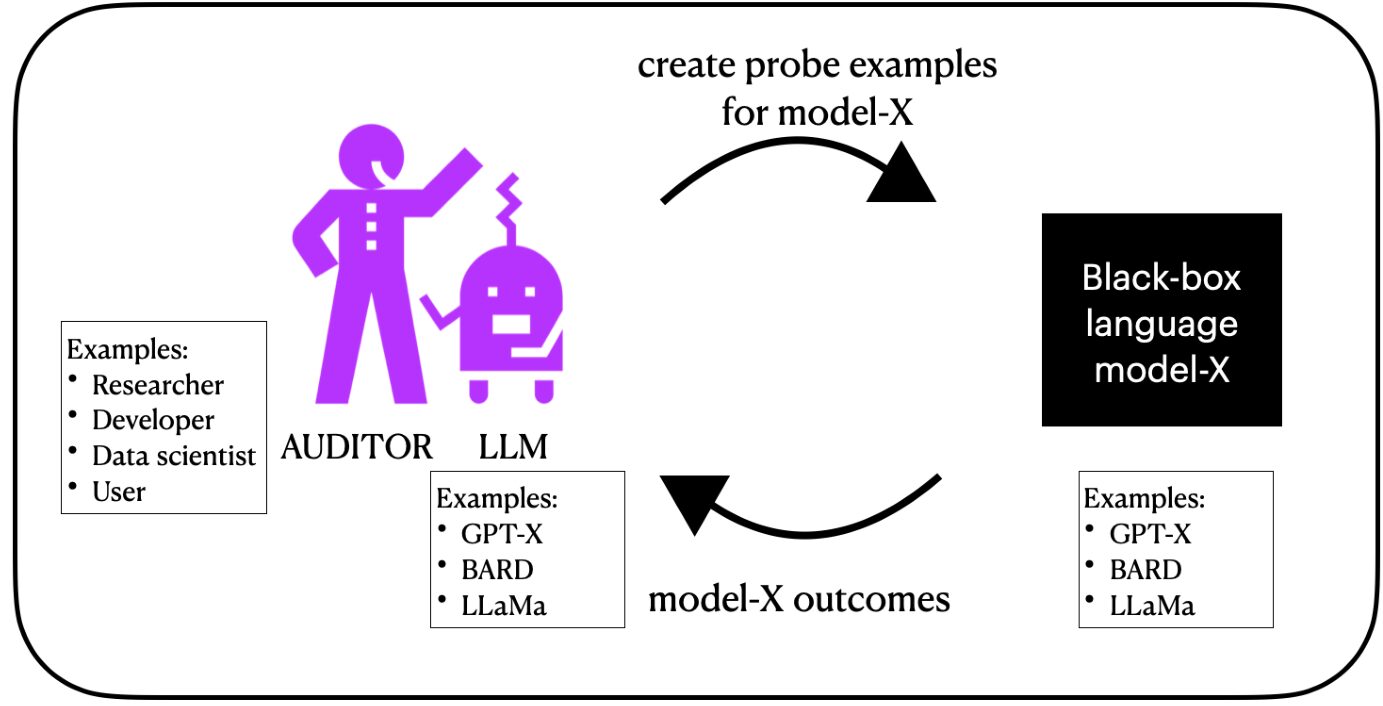

Illustration of LLMs sharing synthetic examples for collaborative learning.

Illustration of LLMs sharing synthetic examples for collaborative learning.

Synthetic Instruction

Building on the success of language models in following instructions, the study explores the concept of synthetic instruction. Teachers generate task instructions in natural language for the students, aiming to enhance performance through guided learning. Results indicate that providing generated instructions significantly boosts performance, showcasing the adaptability and efficacy of this approach. However, challenges arise in cases where the teacher model struggles to provide suitable instructions, emphasizing the need for further refinement in instructional generation processes.

Memorization of Private Examples

Maintaining data privacy while facilitating effective learning is a crucial aspect of social learning for LLMs. To address this, the study evaluates the memorization of private examples by teacher models when instructing students. By quantifying the extent to which the student model retains information from shared examples, the research highlights the importance of data confidentiality in the learning process. Results suggest that the student’s confidence in the shared examples is marginally higher than in similar, non-shared instances, underscoring the nuanced balance between knowledge transfer and data privacy.

Conclusion and Future Directions

The framework introduced in this study lays the foundation for collaborative learning among LLMs, emphasizing the significance of knowledge transfer through textual communication while upholding data privacy. By identifying sharing examples and instructions as fundamental models, the research sets the stage for further advancements in social learning paradigms for language models. Moving forward, the exploration of feedback loops and iterative processes promises to enhance the teaching dynamics among LLMs, paving the way for more sophisticated collaborative learning strategies beyond text-based modalities.