Securing AI Development: Addressing Vulnerabilities from Hallucinated Code

The advent of Large Language Models (LLMs) has transformed the software development landscape, offering unprecedented support for developers. However, recent discoveries have raised concerns about the reliability of code generated by these models. The emergence of AI “hallucinations” is particularly troubling, as they can facilitate cyberattacks and introduce novel threat vectors into the software supply chain.

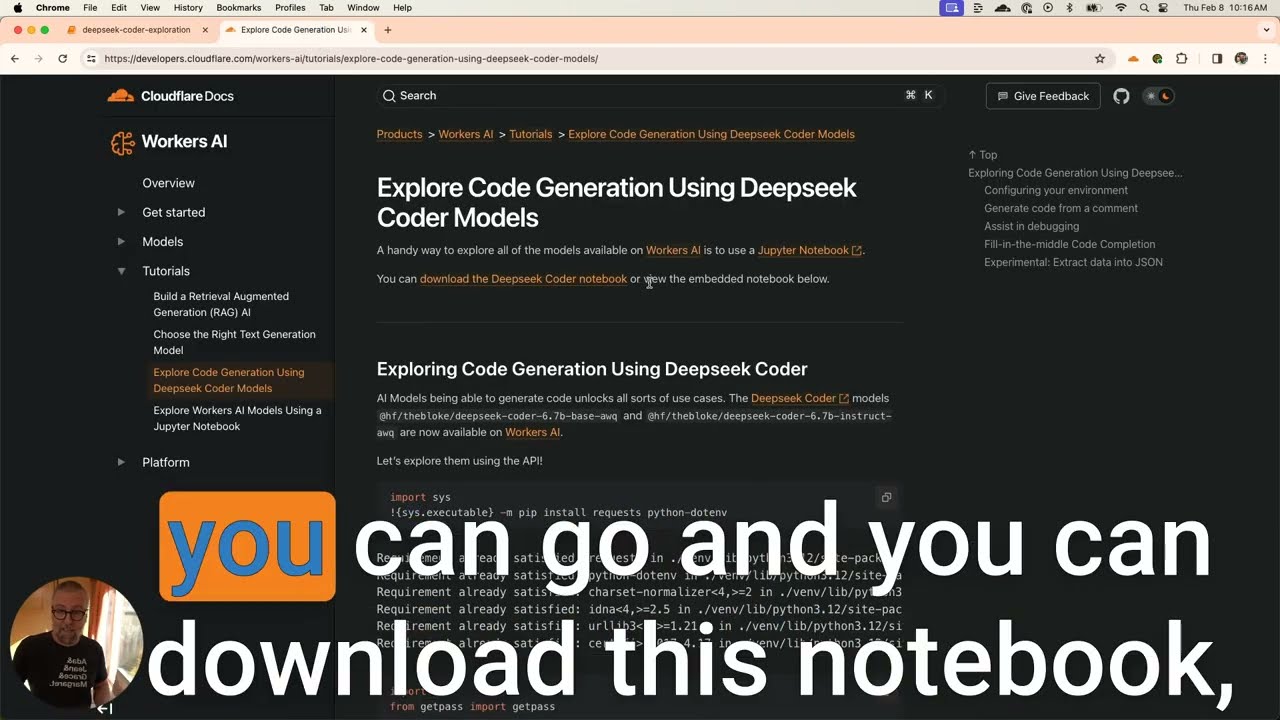

AI-generated code recommendations can pose significant security risks

AI-generated code recommendations can pose significant security risks

Security researchers have conducted experiments that reveal the alarming reality of this threat. By presenting common queries from Stack Overflow to AI models like ChatGPT, they observed instances where non-existent packages were suggested. Subsequent attempts to publish these fictitious packages confirmed their presence on popular package installers, highlighting the immediate nature of the risk.

The widespread practice of code reuse in modern software development exacerbates the risk

The widespread practice of code reuse in modern software development exacerbates the risk

Understanding Hallucinated Code

Hallucinated code refers to code snippets or programming constructs generated by AI language models that appear syntactically correct but are functionally flawed or irrelevant. These “hallucinations” emerge from the models’ ability to predict and generate code based on patterns learned from vast datasets.

AI models can generate code that lacks a true understanding of context or intent

AI models can generate code that lacks a true understanding of context or intent

The Impact of Hallucinated Code

Hallucinated code poses significant security risks, making it a concern for software development. One such risk is the potential for malicious code injection, where AI-generated snippets unintentionally introduce vulnerabilities that attackers can exploit.

Hallucinated code can lead to security breaches and financial repercussions

Hallucinated code can lead to security breaches and financial repercussions

Current Mitigation Efforts

Current mitigation efforts against the risks associated with hallucinated code involve a multifaceted approach aimed at enhancing the security and reliability of AI-generated code recommendations.

Integrating human oversight into code review processes is crucial

Future Strategies for Securing AI Development

Future strategies for securing AI development encompass advanced techniques, collaboration and standards, and ethical considerations.

Emphasis is required on enhancing training data quality over quantity

Emphasis is required on enhancing training data quality over quantity

The Bottom Line

In conclusion, the emergence of hallucinated code in AI-generated solutions presents significant challenges for software development, ranging from security risks to economic consequences and trust erosion. Current mitigation efforts focus on integrating secure AI development practices, rigorous testing, and maintaining context-awareness during code generation.

Ensuring the security and reliability of AI-generated code is crucial

Ensuring the security and reliability of AI-generated code is crucial