Neurobiological Inspiration for AI: The HippoRAG Framework for Long-Term LLM Memory

The current advancements in Large Language Models (LLMs) still require improvement to incorporate new knowledge without losing previously acquired information, a problem known as catastrophic forgetting. Current methods, such as retrieval-augmented generation (RAG), have limitations in performing tasks that require integrating new knowledge across different passages since it encodes passages in isolation, making it difficult to identify relevant information spread across different passages.

Inspired by neurobiological principles, particularly the hippocampal indexing theory, HippoRAG, a retrieval framework, has been designed to address these challenges. It enables deeper and more efficient knowledge integration.

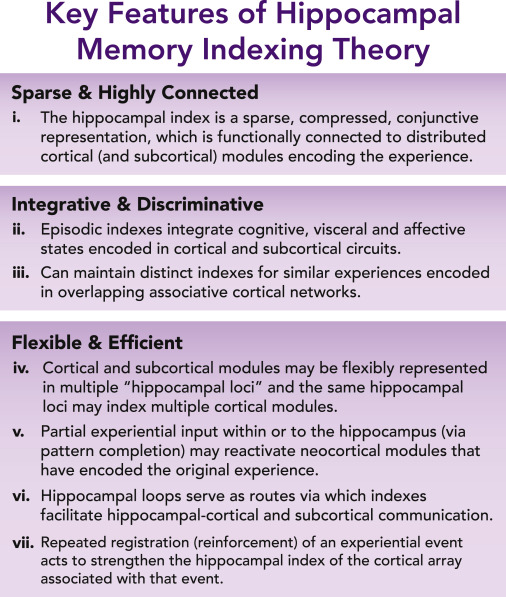

The hippocampal indexing theory inspires the HippoRAG framework.

The hippocampal indexing theory inspires the HippoRAG framework.

Current RAG methods provide long-term memory to LLMs, thus updating the model with new knowledge. However, they fall short in aiding knowledge integration of information spread across multiple passages, as they encode each passage in isolation. This limitation hinders their effectiveness in complex tasks like scientific literature reviews, legal case briefings, and medical diagnoses, which demand the synthesis of information from various sources.

HippoRAG: A Novel Approach

A team of researchers from Ohio State University and Stanford University introduces HippoRAG, a unique approach that leverages the associative memory functions of the human brain, particularly the hippocampus. This novel method utilizes a graph-based hippocampal index to create and utilize a network of associations, enhancing the model’s ability to navigate and integrate information from multiple passages.

A graph-based hippocampal index enables HippoRAG to build a comprehensive web of associations.

A graph-based hippocampal index enables HippoRAG to build a comprehensive web of associations.

HippoRAG’s Methodology

HippoRAG’s methodology involves two main phases: offline indexing and online retrieval. The indexing process of HippoRAG involves a meticulous procedure of processing passages using an instruction-tuned LLM and a retrieval encoder. By extracting named entities and utilizing Open Information Extraction (OpenIE), HippoRAG constructs a graph-based hippocampal index that captures the relationships between entities and passages.

During the retrieval process, HippoRAG utilizes a 1-shot prompt to extract named entities from a query, encoding them with the retrieval encoder. By identifying query nodes with the highest cosine similarity to the query-named entities, HippoRAG efficiently retrieves relevant information from its hippocampal index.

Superior Performance

When tested on multi-hop question answering benchmarks, including MuSiQue and 2WikiMultiHopQA, HippoRAG demonstrated its superiority by outperforming state-of-the-art methods by up to 20%. Notably, HippoRAG’s single-step retrieval achieved comparable or better performance than iterative methods like IRCoT while being 10-30 times cheaper and 6-13 times faster.

HippoRAG outperforms state-of-the-art methods in knowledge-intensive NLP tasks.

Conclusion

In conclusion, the HippoRAG framework significantly advances large language models (LLMs). It is not just a theoretical advancement but a practical solution enabling deeper and more efficient integration of new knowledge. Inspired by the associative memory functions of the human brain, HippoRAG improves the model’s ability to retrieve and synthesize information from multiple sources. The paper’s findings demonstrate the superior performance of HippoRAG in knowledge-intensive NLP tasks, highlighting its potential for real-world applications that require continuous knowledge integration.