Revolutionizing LLM Evaluation: The Arena Learning Breakthrough

As I delve into the realm of large language models (LLMs), I’m reminded of the immense potential they hold for transforming the way we interact with technology. However, assessing their effectiveness has always been a daunting task, especially when it comes to post-training evaluation. The gold standard, human-annotated battles in an online Chatbot Arena, is not only costly but also time-consuming. This hindrance has long plagued the enhancement of LLMs. But what if I told you that a novel approach has been devised to simulate these arena battles, paving the way for continuous model improvement?

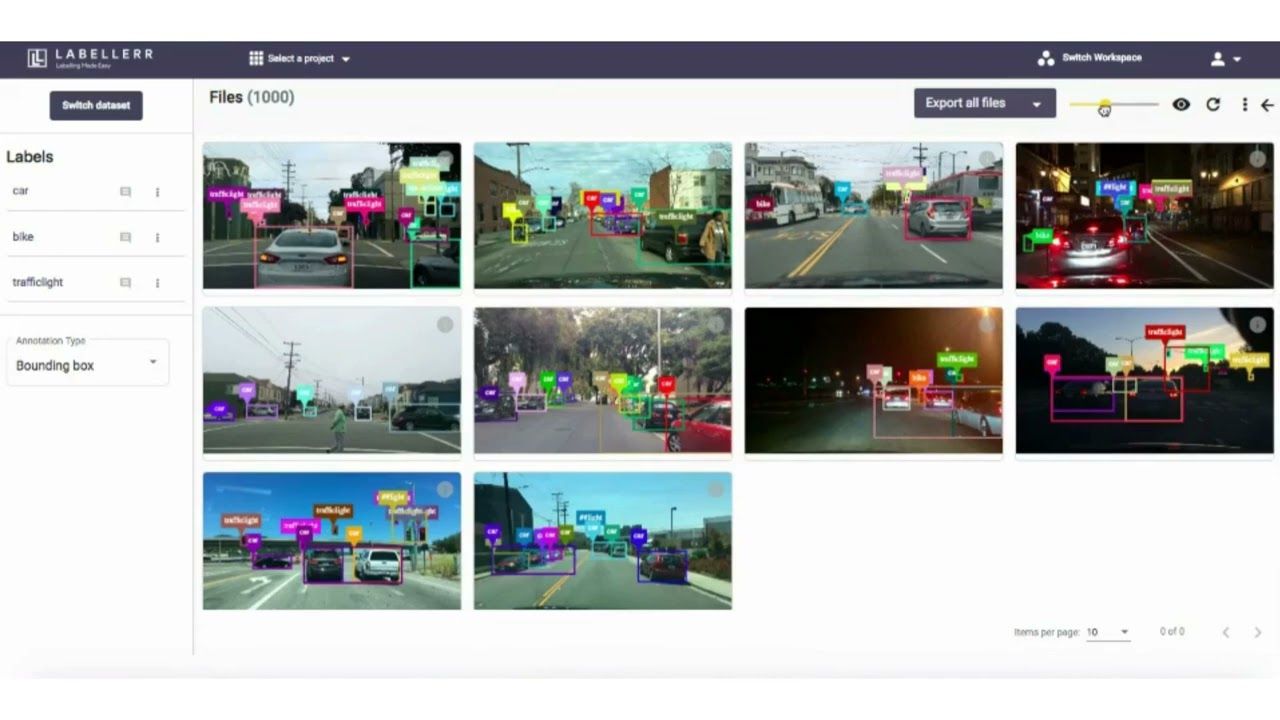

Assessing battle outcomes with AI-driven annotations

Assessing battle outcomes with AI-driven annotations

In this article, I’ll explore the innovative concept of ‘Arena Learning,’ which leverages simulated battles and AI-driven annotations to evaluate LLMs more efficiently. This strategy has the potential to revolutionize the way we fine-tune and reinforce our language models.

The Need for Efficient Evaluation

The rapid growth of large model applications has led to an increased demand for LLM services. As users’ intentions and instructions evolve, these models must adapt to stay relevant. Building an efficient data flywheel is crucial for next-generation AI research, enabling models to continuously collect feedback and improve their capabilities.

A data flywheel for continuous model improvement

Simulating Arena Battles

The conventional method of human-annotated battles is plagued by high costs and time constraints. Arena Learning circumvents these limitations by simulating these battles offline. This approach employs a comprehensive set of instructions for simulated battles, using AI-driven annotations to assess battle outcomes. The target model can then be fine-tuned and reinforced through both supervised learning and reinforcement learning.

Simulating arena battles for efficient evaluation

Simulating arena battles for efficient evaluation

The Future of LLM Evaluation

Arena Learning has the potential to transform the way we evaluate and improve LLMs. As I see it, this innovative approach will pave the way for more efficient and effective model development. The implications are far-reaching, from enhancing language model services to driving next-generation AI research.

“Building an efficient data flywheel has become a key direction for next-generation AI research.” – A renowned AI researcher

The future of LLM evaluation with Arena Learning

Conclusion

In conclusion, Arena Learning is a groundbreaking approach that simulates arena battles, enabling efficient evaluation and continuous improvement of LLMs. As we move forward, I’m excited to see the impact this innovation will have on the AI research community and the numerous applications that will benefit from it.