Unleashing the Power of NVIDIA NIM and Amazon SageMaker for Optimized LLM Inference

As the world of artificial intelligence continues to evolve, the collaboration between NVIDIA NIM microservices and Amazon SageMaker has opened up new possibilities for deploying and optimizing large language models (LLMs). By leveraging cutting-edge technologies like NVIDIA TensorRT and Triton Inference Server on NVIDIA GPUs within the SageMaker environment, the process of deploying state-of-the-art LLMs has been revolutionized.

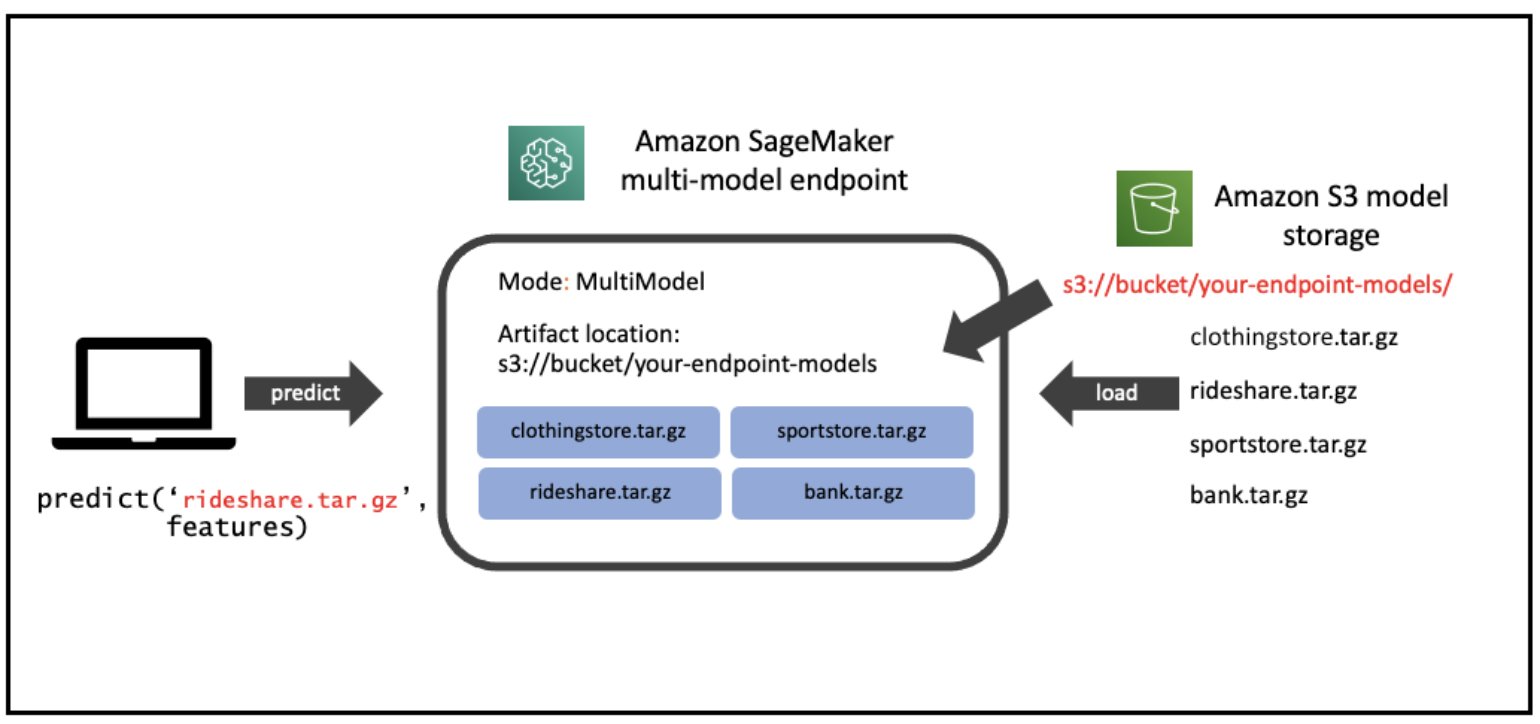

Image for illustrative purposes

Image for illustrative purposes

Enhancing Model Performance and Cost Efficiency

The integration of NVIDIA NIM with Amazon SageMaker brings forth a seamless experience in deploying industry-leading LLMs. With the power of NVIDIA TensorRT-LLM and optimized model performance, the deployment time has been significantly reduced from days to mere minutes. This advancement not only enhances the performance of LLMs but also ensures cost efficiency in the deployment process.

Introduction to NVIDIA NIM

NVIDIA NIM stands out as a pivotal component of the NVIDIA AI Enterprise software platform available on the AWS marketplace. This set of inference microservices empowers applications with state-of-the-art LLM capabilities, enabling natural language processing and understanding. By utilizing pre-built NVIDIA containers optimized for specific NVIDIA GPUs, users can effortlessly deploy popular LLMs or create custom containers using NIM tools.

Streamlining LLM Inference with NIM

For models not included in NVIDIA’s curated selection, NIM offers essential utilities like the Model Repo Generator. This tool simplifies the creation of TensorRT-LLM-accelerated engines and NIM-format model directories through a user-friendly YAML file. Additionally, the integrated vLLM community backend supports cutting-edge models, ensuring seamless integration into the optimized stack.

Advanced Hosting Technologies

NIM goes beyond inference optimization by providing advanced hosting technologies such as in-flight batching. This technique optimizes scheduling by evicting finished sequences from a batch immediately, allowing for continuous processing of new requests. Such innovations maximize compute instance and GPU utilization, enhancing overall system efficiency.

Future Prospects and Customization

Looking ahead, NIM is set to introduce Parameter-Efficient Fine-Tuning (PEFT) methods like LoRA and P-tuning, offering users enhanced customization options. The platform’s roadmap includes expanding LLM support by integrating Triton Inference Server, TensorRT-LLM, and vLLM backends, further solidifying its position as a frontrunner in LLM deployment.

Conclusion

In conclusion, the synergy between NVIDIA NIM and Amazon SageMaker presents a compelling solution for organizations seeking optimized LLM inference capabilities. By combining performance enhancements with cost efficiency, deploying LLMs has never been easier. Stay tuned for the upcoming advancements in LLM deployment facilitated by NIM’s commitment to innovation.