The Future of Large Language Models: A Novel Approach to Pruning

As Large Language Models (LLMs) continue to grow in size and complexity, the need for efficient deployment and practical utilization becomes increasingly important. Recent advancements in LLMs have led to models containing billions or even trillions of parameters, achieving remarkable performance across domains. However, their massive size poses challenges in practical deployment due to stringent hardware requirements.

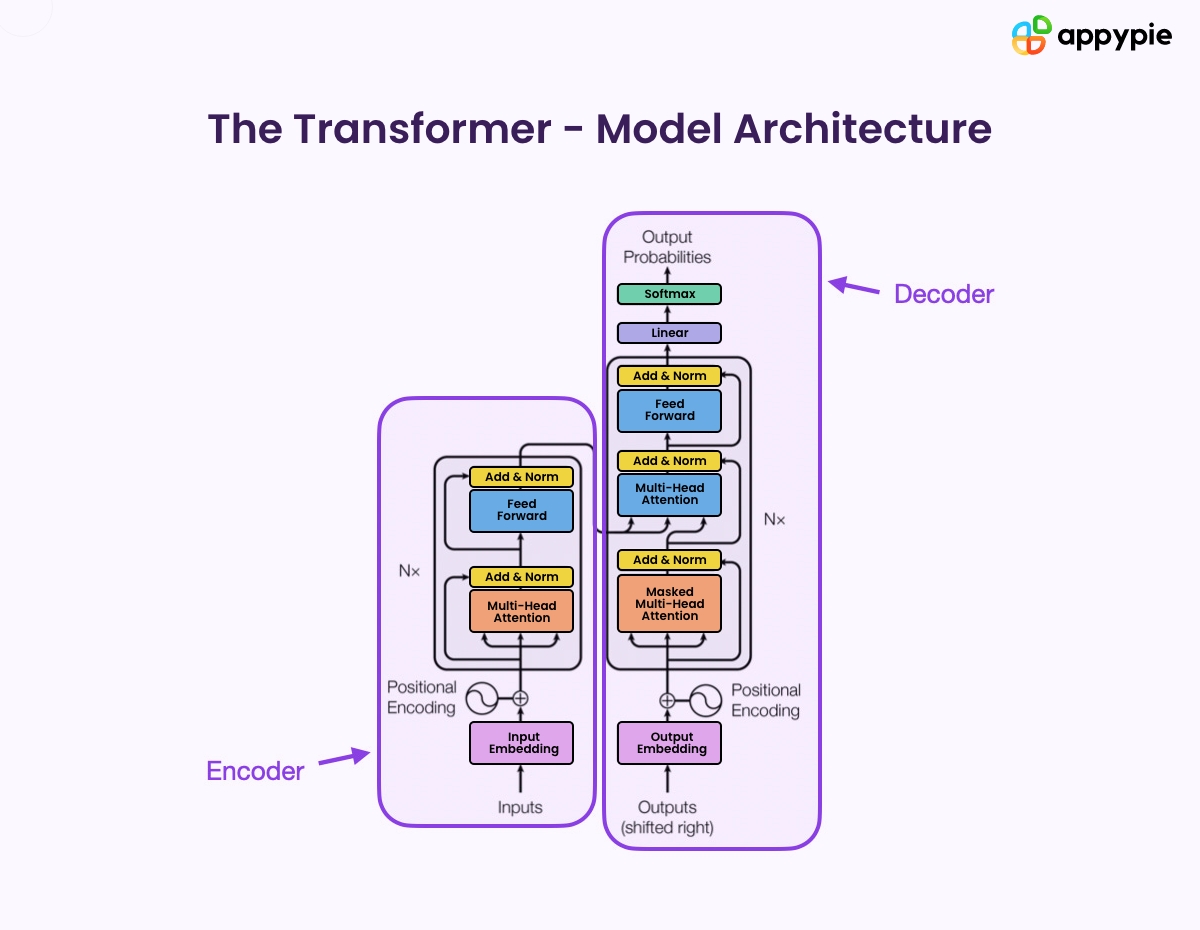

Illustration of a Large Language Model architecture

Illustration of a Large Language Model architecture

Research has focused on scaling models to enhance performance, guided by established scaling laws. This escalation underscores the need to address hardware limitations to facilitate the widespread utilization of these powerful LLMs. Prior works address the challenge of deploying massive trained models by focusing on model compression techniques. These techniques, including quantization and pruning, aim to reduce inference costs.

“The proposed method’s comparative experiments against benchmarks (including MMLU, CMMLU, and CMNLI) and baseline techniques (including LLMPru, SliceGPT, and LaCo) are commonly used in LLM evaluation.”

The researchers from Baichuan Inc. and the Chinese Information Processing Laboratory Institute of Software, Chinese Academy of Sciences, present a unique approach, ShortGPT, to analyze layer-wise redundancy in LLMs using Block Influence (BI), measuring hidden state transformations. Their method significantly outperforms previous complex pruning techniques by identifying and removing redundant layers based on BI scores.

Illustration of layer-wise redundancy in LLMs

Illustration of layer-wise redundancy in LLMs

The proposed LLM layer deletion approach begins by quantifying layer redundancy, particularly in Transformer-based architectures. BI metric assesses each layer’s impact on hidden state transformations during inference. Layers with low BI scores, indicating minimal impact, are removed to reduce inference costs without compromising model performance.

In conclusion, the researchers from Baichuan Inc. and the Chinese Information Processing Laboratory Institute of Software, Chinese Academy of Sciences present ShortGPT, a unique LLM pruning approach based on layer redundancy and attention entropy. Results show significant layer-wise redundancy in LLMs, enabling the removal of minimally contributing layers without compromising performance.

Illustration of the ShortGPT architecture

The proposed strategy maintains up to 95% of model performance while reducing parameter count and computational requirements by around 25%, surpassing previous pruning methods. This approach, simple yet effective, suggests depth-based redundancy in LLMs and offers compatibility with other compression techniques for versatile model size reduction.