Revolutionizing Language Accessibility: The Future of Multilingual Large Language Models

The recent release of the European LLM Leaderboard by the OpenGPT-X team marks a significant milestone in the development and evaluation of multilingual language models. This project, supported by TU Dresden and a consortium of ten partners from various sectors, aims to advance language models’ capabilities in handling multiple languages, thereby reducing digital language barriers and enhancing the versatility of AI applications across Europe.

The digital processing of natural language has seen advancements in recent years, largely due to the proliferation of open-source Large Language Models (LLMs). These models have demonstrated remarkable capabilities in understanding and generating human language, making them indispensable tools in various fields such as technology, education, and communication. However, most of these benchmarks have traditionally focused on the English language, leaving a gap in the support for multilinguality.

Language models can understand and generate human language in various languages

Language models can understand and generate human language in various languages

Recognizing this need, the OpenGPT-X project was launched in 2022 under the auspices of the BMWK. The project brings together business, science, and media experts to develop and evaluate multilingual LLMs. The recent publication of the European LLM Leaderboard is a pivotal step towards achieving the project’s goals. This leaderboard compares several state-of-the-art language models, each comprising approximately 7 billion parameters, across multiple European languages.

The primary aim of the OpenGPT-X consortium is to broaden language accessibility and ensure that AI’s benefits are not limited to English-speaking regions. To this end, the team conducted extensive multilingual training and evaluation, testing the developed models on various tasks, such as logical reasoning, commonsense understanding, multi-task learning, truthfulness, and translation.

Several benchmarks have been translated and employed in the project to assess the performance of multilingual LLMs: ARC and GSM8K focus on general education and mathematics. HellaSwag and TruthfulQA test the ability of models to provide plausible continuations and truthful answers. MMLU provides a wide range of tasks to assess the models’ capabilities across different domains. FLORES-200 aims to assess machine translation skills. Belebele focuses on understanding and answering questions in multiple languages.

In conclusion, the European LLM Leaderboard by the OpenGPT-X team addresses the need for broader language accessibility and provides robust evaluation metrics. The project paves the way for more inclusive and versatile AI applications. This progress is particularly crucial for languages traditionally underrepresented in natural language processing.

Expanding the Capabilities of Large Language Models with Grounding and Retrieval-Augmented Generation

Generative AI is revolutionizing the ability to quickly access knowledge. Organizations aiming to improve operations are taking note. According to IDC FutureScape: Worldwide Generative Artificial Intelligence 2024 Predictions, IDC, October 2023, “By 2025, two-thirds of businesses will use a combination of gen AI and retrieval augmented generation (RAG) to power domain-specific self-service knowledge discovery, improving decision efficacy by 50%.”

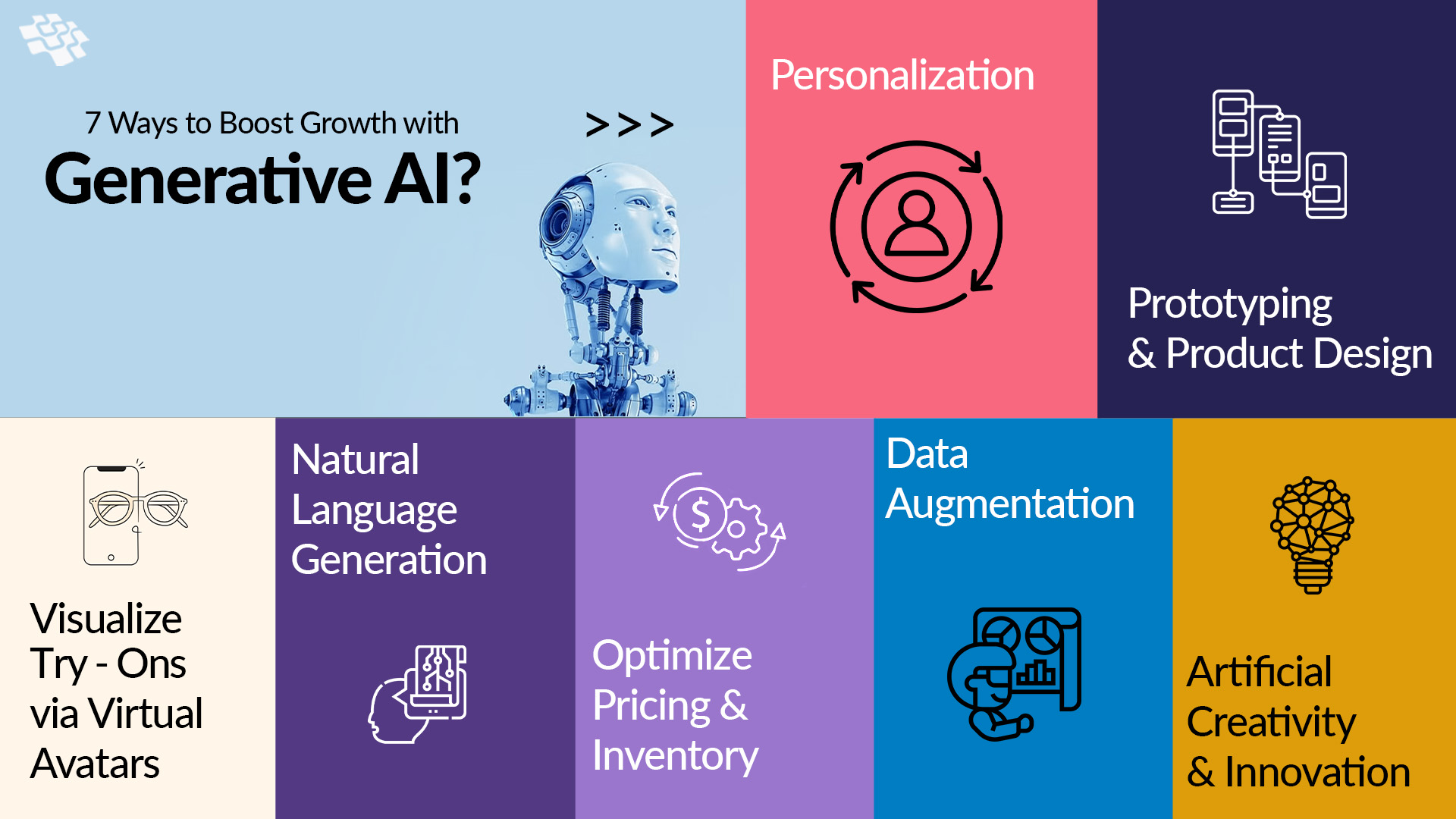

Generative AI is changing the way we access knowledge

Generative AI is changing the way we access knowledge

To actualize this, organizations need gen AI capabilities, such as natural language question-answering systems and enterprise search, to support self-service knowledge discovery for employees, customers, and more. Documents have become the new data flow for gen AI use cases.

Through the prompting process, large language models (LLMs) can provide more accurate and specific responses. With IBM watsonx.ai, companies are starting to do just that. AI developers and model builders are augmenting foundation models with the help of RAG frameworks to give users access to the documents and data they need for informed, data-driven decisions and insights.

Expanding the capabilities of watsonx.ai Prompt Lab, a new feature known as Chat with Documents allows AI developers to augment an LLM’s knowledge base by grounding it with documents. This feature enables users to ask questions and receive answers based on the document content.

Large language models can be grounded with documents for more accurate responses

Large language models can be grounded with documents for more accurate responses

One technique to reduce hallucination is model grounding, where documents are provided to ‘ground’ the conversation and complete missing knowledge. Because documents are often larger than the model’s context window, a RAG pattern is used to work around this limitation.

The integration of LangSmith into WordSmith’s operations has enhanced the lifecycle of its product, from prototyping to debugging and evaluation, significantly improving the performance and reliability of WordSmith’s LLM-powered features. LangSmith’s hierarchical tracing feature has been instrumental in this evolution, providing transparent insights into what the LLM receives and produces at each step, allowing engineers to iterate quickly and confidently.

This forward-thinking approach could lead to a highly personalized and efficient RAG experience for each customer, setting a new standard in legal AI operations.