Breakthrough in AI Training Speed and Energy Efficiency

In a recent development, London-based spinout Oriole Networks has secured a significant investment of £10 million to revolutionize the way AI is trained. The company aims to build optical networks between GPUs in AI clusters, potentially speeding up training processes by up to 100 times while drastically reducing energy consumption.

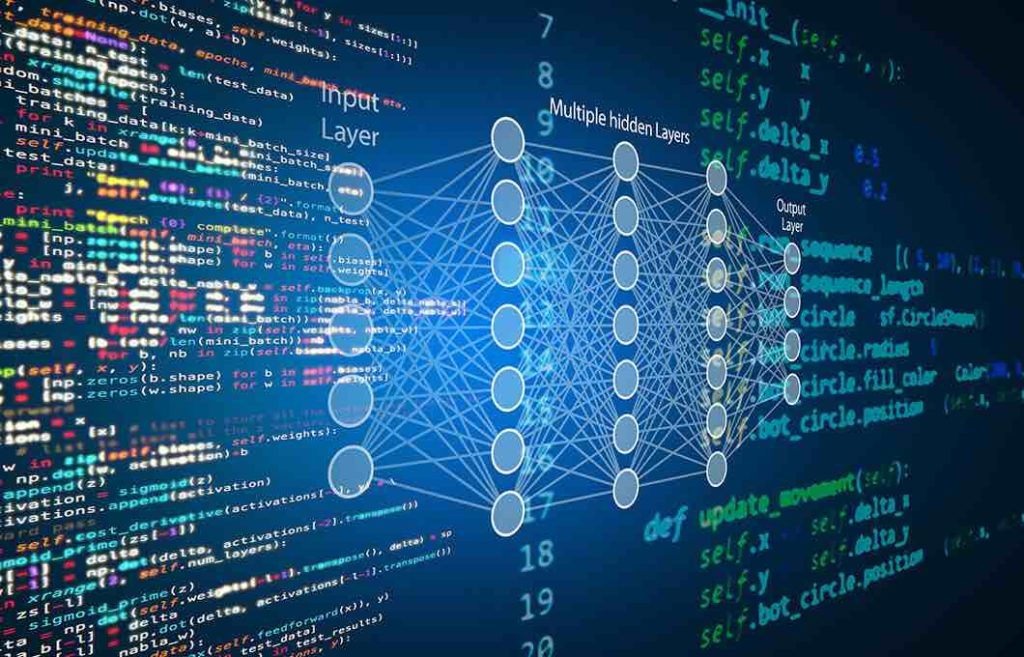

AI Training

AI Training

Tackling the Energy Challenge

The energy consumption of training AI models has been a growing concern, with the demand for more powerful models increasing rapidly. According to a study published in the energy journal Joule, integrating generative AI into every search at Google would result in a substantial rise in electricity usage. This highlights the urgent need for more energy-efficient solutions in the AI industry.

Oriole’s Innovative Solution

Oriole Networks, led by CEO James Regan and co-founded by Professor George Zervas from University College London, has developed a groundbreaking approach to connecting GPUs using light beams through optical fibers. This breakthrough technology not only accelerates data transfer speeds but also significantly reduces energy consumption compared to traditional ethernet networks.

Commercialization and Future Prospects

With plans to outsource hardware manufacturing and focus on selling their networking system, Oriole is poised to enter the market with a game-changing product. The company’s innovative approach has the potential to reshape the landscape of AI training, making it more efficient and sustainable in the long run.

Stay tuned for more updates on Oriole Networks and the future of AI training!

By Poppy Sullivan

AI Unveiled: Beyond Boundaries of Code and Consciousness. The latest news and updates on the large language modelling ecosystem.

Poppy is a coffee enthusiast turned tech reporter. When not typing away at her computer, she can be found chasing after her mischievous tabby cat who always manages to get into trouble.