Advancements in Natural Language Processing

The field of natural language processing (NLP) has been revolutionized by the emergence of Large Language Models (LLMs) like GPT and LLaMA. These models have become indispensable tools for various tasks, leading to a growing demand for proprietary LLMs. However, the resource-intensive nature of LLM development poses a challenge for many. To address this, researchers have proposed knowledge fusion of LLMs as an alternative approach to building powerful models while reducing development costs.

Introducing FUSECHAT: A Novel Approach to LLM Integration

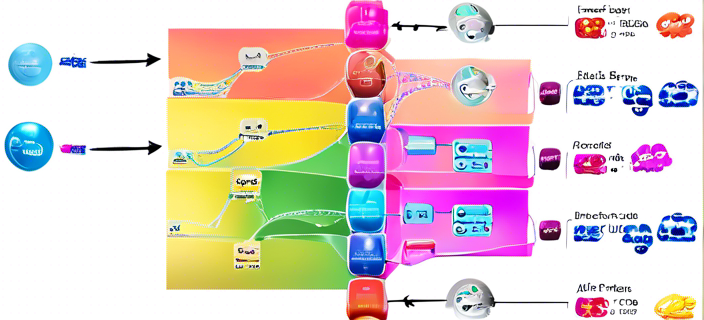

FUSELLM introduced a groundbreaking paradigm for knowledge fusion by utilizing probability distribution matrices from multiple source LLMs to transfer collective knowledge into a target LLM through lightweight continual training. Building upon this concept, FUSECHAT is specifically designed for fusing chat LLMs with varying architectures and scales. This innovative method proceeds in two main stages: knowledge fusion of source LLMs and merging within the parameter space to incorporate collective knowledge.

Illustration of knowledge fusion in FUSECHAT

Illustration of knowledge fusion in FUSECHAT

The VARM Merging Method: A Game-Changer in LLM Integration

FUSECHAT introduces the Variation Ratio Merge (VARM) method, a novel approach for determining combining weights based on the variation ratio of parameter matrices before and after fine-tuning. This method allows for fine-grained merging without the need for additional training efforts, enhancing the efficiency of the integration process.

Empirical Evaluation and Performance

Empirical evaluations of FUSECHAT using open-source chat LLMs have demonstrated its effectiveness. Results on the MT-Bench benchmark, which assesses multi-turn dialogue ability, show that FUSECHAT outperforms individual source LLMs and fine-tuned baselines across different scales. The VARM merging method, in particular, has shown superior performance, underscoring the effectiveness of merging weights based on variation ratios.

Implications and Future Prospects

The development of FUSECHAT marks a significant advancement in the integration of multi-model LLMs, especially in the realm of chat-based applications. By leveraging knowledge fusion techniques, FUSECHAT offers a practical and efficient approach to combining diverse chat LLMs, addressing the challenges of resource-intensive model development. With its scalability and flexibility, coupled with the effectiveness of the VARM merging method, FUSECHAT is poised to drive innovation in dialogue systems and meet the increasing demand for sophisticated chat-based AI solutions.