Oracle Unleashes HeatWave GenAI: Revolutionizing Generative AI Applications

Oracle has announced the general availability of HeatWave GenAI, a groundbreaking innovation that brings the industry’s first in-database large language models (LLMs) and automated in-database vector store. This game-changing technology empowers customers to build generative AI applications without needing AI expertise, data movement, or additional costs.

HeatWave GenAI is now available across all Oracle Cloud regions, Oracle Cloud Infrastructure (OCI) Dedicated Region, and other clouds at no extra cost to HeatWave customers.

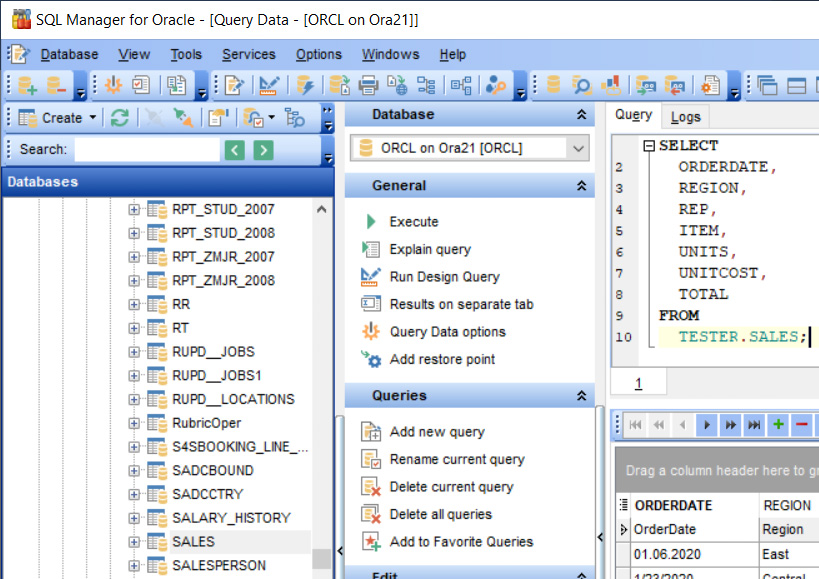

With HeatWave GenAI, users can create a vector store for enterprise unstructured content with a single SQL command, leveraging built-in embedding models. Moreover, natural language searches can be performed in one step using either in-database or external LLMs, enhancing performance and data security while reducing application complexity.

Innovative Features of HeatWave GenAI

HeatWave GenAI introduces several innovative features that simplify the development of generative AI applications at a lower cost. These features include:

- In-database LLMs: Enabling customers to search data, generate or summarize content, and perform retrieval-augmented generation (RAG) with HeatWave Vector Store. Integration with OCI Generative AI service allows access to pre-trained models from leading LLM providers.

- Automated In-database Vector Store: Facilitating the use of generative AI with business documents without moving data to a separate vector database. All steps to create a vector store and vector embeddings are automated, enhancing efficiency and ease of use.

- Scale-out Vector Processing: Delivering fast semantic search results without loss of accuracy, using a new native VECTOR data type and optimized distance function. This allows semantic queries with standard SQL and combines semantic search with other SQL operators for comprehensive data analysis.

- HeatWave Chat: A Visual Code plug-in for MySQL Shell that provides a graphical interface for HeatWave GenAI, enabling natural language or SQL queries. This feature maintains context and allows users to verify the source of generated answers.

Benchmarking results demonstrate HeatWave GenAI’s exceptional performance, outpacing competitors in speed and cost.

Benchmarking results demonstrate HeatWave GenAI’s exceptional performance, outpacing competitors in speed and cost.

Performance Benchmarks

HeatWave GenAI has demonstrated significant performance advantages in creating a vector store for documents in various formats. Benchmarks show HeatWave GenAI is 23 times faster than Knowledge base for Amazon Bedrock and a quarter of the cost. Additionally, it is 30 times faster than Snowflake and costs 25% less, 15 times faster than Databricks and costs 85% less, and 18 times faster than Google BigQuery and costs 60% less.

Customer and Analyst Insights

“HeatWave GenAI has reduced application complexity and costs significantly,” said Vijay Sundhar, CEO of SmarterD.

“HeatWave’s support for in-database LLMs and vector stores has enabled new capabilities and improved performance for our customers,” emphasized Safarath Shafi, CEO of EatEasy.

“HeatWave’s integrated approach to generative AI provides cost-effectiveness and performance advantages,” noted Holger Mueller of Constellation Research.

HeatWave Overview

HeatWave is the only cloud service offering automated and integrated generative AI and machine learning for transactions and lakehouse-scale analytics. It is available on OCI, Amazon Web Services, Microsoft Azure via Oracle Interconnect for Azure, and in customer data centers with OCI Dedicated Region and Oracle Alloy.

Oracle Database is now more powerful than ever with HeatWave GenAI.

Oracle Database is now more powerful than ever with HeatWave GenAI.