Transforming AI Architecture: The Rise of MatMul-Free Language Models

The field of artificial intelligence is on the brink of a remarkable transformation as researchers explore innovative architectures that strip back some of the most resource-intensive components of large language models (LLMs). One such recent breakthrough comes from a collaboration between the University of California, Santa Cruz, Soochow University, and the University of California, Davis, which has introduced a new architecture that forgoes matrix multiplications, a critical yet computationally demanding aspect of traditional Transformer models.

The Challenge of Matrix Multiplication in LLMs

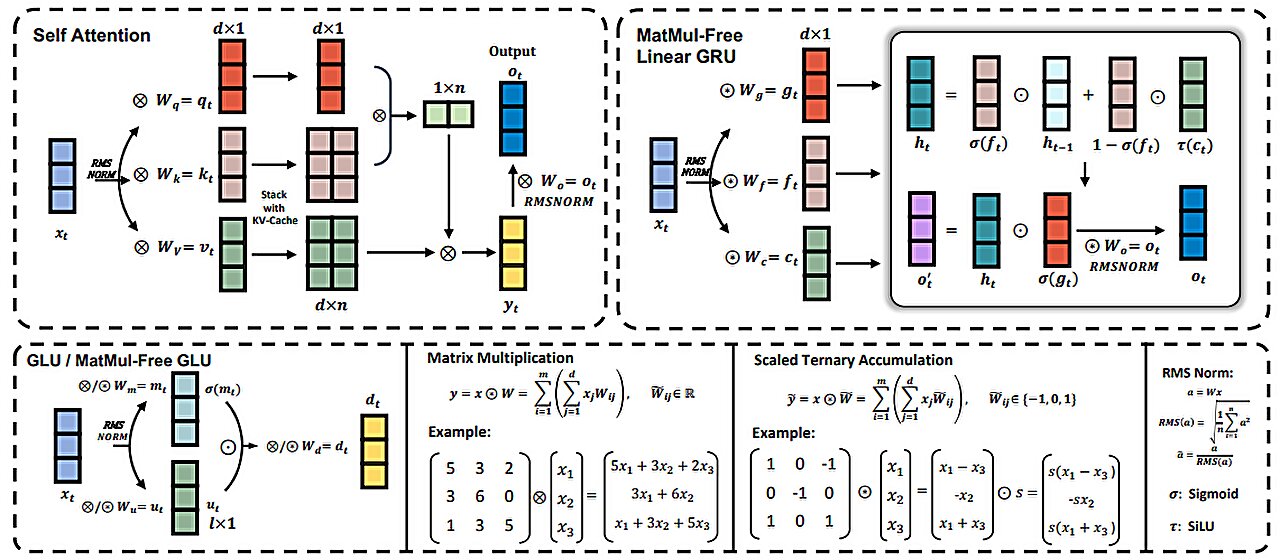

Matrix multiplications (MatMul) are essential operations in the realm of deep learning, serving to combine data with model weights. As LLMs have evolved into larger and more complex systems, the overhead associated with MatMul operations has surged, leading to increased memory usage and significant latency in both training and inference. This burgeoning computational requirement has made high-performance GPUs indispensable, further complicating access to cutting-edge AI technologies. Thus, minimizing the dependency on such expensive hardware has become paramount in the pursuit of broader AI accessibility.

A Novel Solution: MatMul-Free Language Models

The transformative work led by researchers from multiple universities aims to tackle this issue directly. They have proposed an architecture that completely eliminates the use of matrix multiplications. Instead, they introduce the concept of ternary operations, utilizing 3-bit weights—each of which can take on values of -1, 0, or +1—in lieu of conventional 16-bit floating-point numbers. This shift not only reduces computation needs but also maintains performance standards equivalent to those of high-end Transformer models.

Advantages of Ternary Weights and Additive Operations

By employing additive operations and 8BitLinear layers with ternary weights, the newly proposed models exhibit a significant reduction in their memory footprint while delivering competitive performance metrics. The researchers evaluated two variants of their MatMul-free LMs against the advanced Transformer++ architecture utilized by Llama-2, revealing that their new implementation can effectively challenge existing state-of-the-art systems.

Exploring new weight structures in AI architectures.

Exploring new weight structures in AI architectures.

Optimized Implementations and Expected Outcomes

In parallel with the model development, the research team created optimized implementations for both GPUs and custom FPGA configurations designed explicitly for MatMul-free language models. Notably, their GPU implementation realized a 25.6% acceleration in training times and reduced memory consumption by up to a remarkable 61% compared to unoptimized versions. While these results are promising, the researchers acknowledge the limitations of their testing, having not yet explored the architecture’s performance on models with over 100 billion parameters.

They hope this pioneering research will inspire other institutions to invest in lightweight models capable of scaling efficiently, thus broadening the research landscape in LLM development and accessibility.

Embracing the Future: Cognizant’s Healthcare AI Solutions

As the AI landscape continues to evolve, the application of these breakthroughs spans multiple sectors, including healthcare. Recently, Cognizant has unveiled a suite of healthcare LLM solutions built on Google Cloud’s generative AI technologies, showcasing the critical role of large language models in revolutionizing industry practices. These solutions aim to streamline various high-cost administrative workflows, thereby improving operational efficiencies and overall care delivery.

LLM Solutions Tailored for Healthcare

Cognizant’s healthcare solutions leverage Google Cloud’s Vertex AI and Gemini platforms to redesign administrative processes that often burden healthcare organizations. From marketing operations to provider management and contracting, these generative AI-powered systems are poised to optimize crucial workflows, like appeals management and contract lifecycle management.

- Appeals Resolution Assistant: This solution automates the process of data retrieval and analysis, significantly enhancing productivity and accuracy in managing appeals, which has historically been a manual and error-prone task.

- Contract Management Solution: By automating contract review and generation, this tool addresses common inefficiencies that can prolong negotiation cycles and increase the risk of mistakes.

- Marketing Content Assistant: This assistant enhances content generation for payer marketing teams, ensuring that communications remain timely and effective in reaching consumers.

- Health Plan Shopper: Empowering healthcare members, this tool uses individual patient data to streamline plan selection, improving user experience.

The Benefits of GenAI in Healthcare

The integration of these LLM-driven solutions is not merely educational; it heralds a shift towards more responsive and unified healthcare experiences. As Amy Waldron, Director of the Healthcare and Life Sciences Industry at Google Cloud, noted, “These tailored solutions reduce administrative burden and enhance access to care.” Such innovations offer a pathway to both increased efficiency and improved satisfaction among patients.

Innovative AI technology applied to healthcare challenges.

Innovative AI technology applied to healthcare challenges.

Expanding AI Research with TEXTGRAD

Another compelling narrative in the ongoing AI advancement saga involves researchers from Stanford University introducing TEXTGRAD, a framework enabling automatic differentiation via text. This initiative harnesses the power of LLMs to optimize complex AI systems through automatic feedback, minimizing human error and manual adjustments that have traditionally plagued optimization tasks.

Leveraging LLMs for Complex System Optimization

TEXTGRAD transforms conventional AI systems into computation graphs, allowing users to apply LLM reasoning across various tasks without the need for intricate tuning. The versatility of TEXTGRAD was demonstrated in several domains: it improved coding performance on platforms like LeetCode, achieved superior zero-shot accuracy in question-answering tasks, and even designed new drug molecules with enhanced properties.

In summary, TEXTGRAD’s flexibility and power illustrate that a new era of AI optimization frameworks is emerging—one that promises to accelerate the development of next-generation AI applications.

Exploring the frontiers of AI optimization.

Conclusion: A Bright Future for AI Development

The confluence of innovations in AI architecture, healthcare applications, and robust optimization frameworks like TEXTGRAD paints an optimistic picture for the future of artificial intelligence. As researchers and industry leaders continue to dismantle barriers to access and performance, it is clear that the landscape of AI is rapidly evolving—propelling us into unprecedented realms of capability and application.

By harnessing the power of LLMs while diverging from traditional, resource-intensive methods, the AI community stands at the precipice of a transformative revolution, one that promises to redefine accessibility, efficiency, and efficacy in technology across various sectors.