Rapid Progress in AI Research and Development Faces Hurdles

Major Chinese tech companies have announced extensive price cuts for their large language model (LLM) products used for generative artificial intelligence, a move that experts say is tipped to speed up the application of AI models in the domestic market and in research.

Chinese developers continue to make progress in the commercialisation of AI technology.

Chinese developers continue to make progress in the commercialisation of AI technology.

While price wars are not uncommon in the world of LLMs, this comes as Chinese developers continue to make progress in the commercialisation of AI technology in recent months. The latest statistics from China’s Cyberspace Administration show that, as of March this year, a total of 117 generative AI services were registered in China. Currently, the number of LLMs is estimated to exceed 200, of which over 100 are with more than one billion parameters (the variables a model learns during training).

“Although outstanding young scientists in the field of artificial intelligence in top universities have the potential to be world-class players, they suffer from a lack of computing power resources and cannot fully participate in the mainstream research of large models.” - Wang Yanfeng, deputy dean of the School of Artificial Intelligence at Shanghai Jiao Tong University.

Need for Localised Models

While many industry players have adopted localisation strategies, training and providing LLMs customised for Chinese users, this is hampered by a shortage of Chinese text to train the models. With OpenAI’s ChatGPT, the proportion of Chinese-language data is less than 0.01% of data, while English-language data accounts for more than 92.6%. Recent studies have found that limited Chinese data for training means that models developed in the West may be lacking in exposure to Chinese concepts and terminology, limiting their application in fields such as traditional Chinese medicine, for example.

Filling the Data Gap

Lin Yonghua, deputy director and chief engineer of the Beijing Academy of Artificial Intelligence (BAAI), a leading non-profit in AI research and development, said the “bottleneck” around training data is looming large. Government-sponsored entities like BAAI are spearheading efforts to fill the widening gap. On 26 April, the Academy officially released the Chinese Corpora Internet (CCI) 2.0, following its first version made public in November last year. The platform is about 500GB in size and covers 125 million web pages, the result of cleaning, filtering, and processing eight terabytes of raw data, according to the Academy.

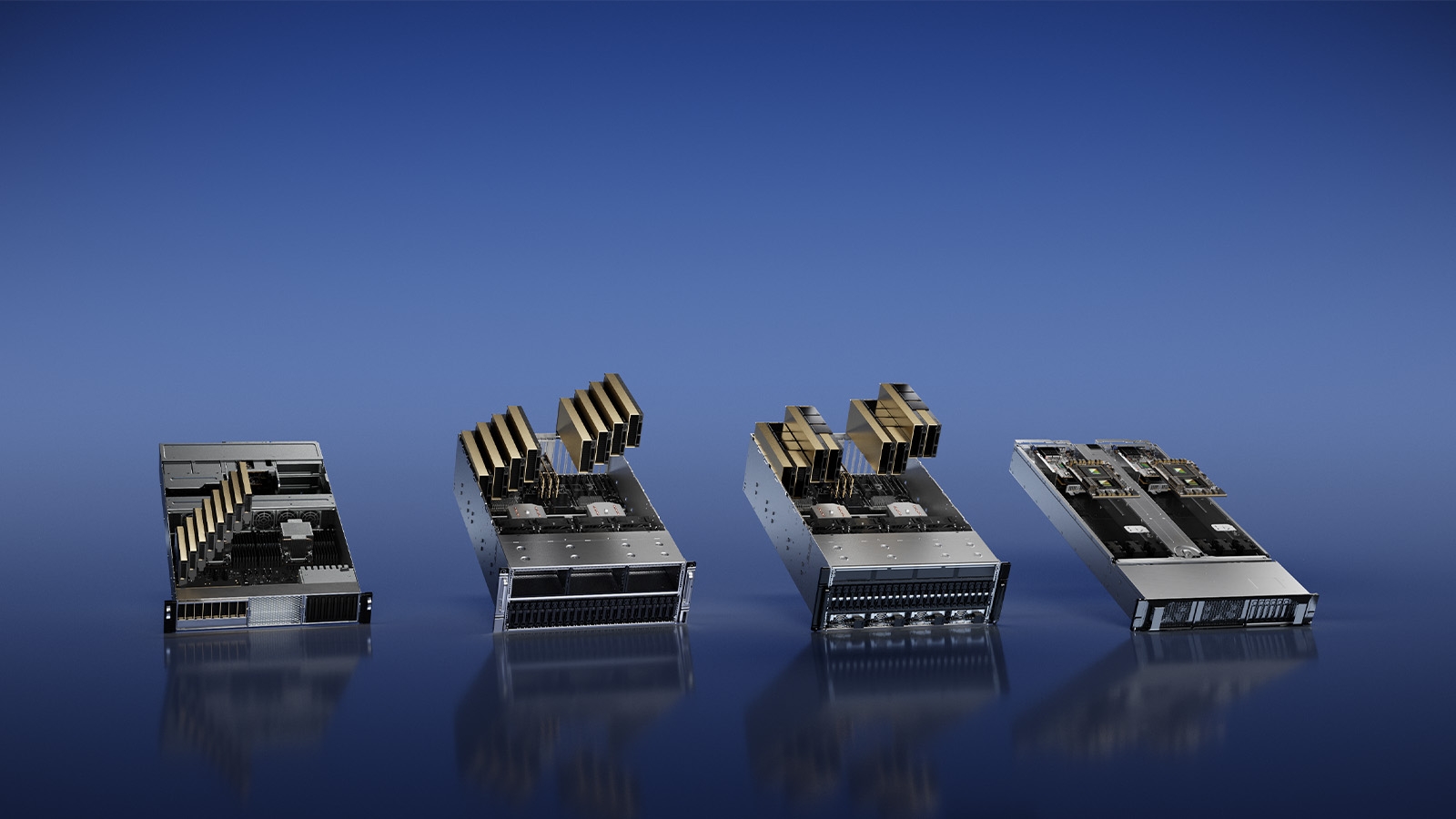

The GPU Bottleneck

Industry discussions also focus on the strong demand for computing power. Lack of computing power inhibits the implementation of LLMs and delays product deployment, they say. According to data from GPU Utils, which tracks the global market for computer graphic processing units (GPUs) powered by high-performance chips, the shortfall in global supply of high-end chips produced by US company NVIDIA that are required to power LLM models has reached 430,000 chips.

A new startup backed by Tsinghua University in Beijing is seeking to fill the widening gap in computing power. In March, Wuwen Xinqiong, an AI infrastructure company, released its Infini-AI large model development and service platform, which uses GPU inference and acceleration technology to provide a complete tool chain for LLM development, training, operation, and application.

Wuwen Xinqiong occupies the middle ground between LLMs and chips.

Wuwen Xinqiong occupies the middle ground between LLMs and chips.

However, Xia said US moves to curb China’s AI sector meant anxiety over computing power may be here to stay. In January, US Commerce Secretary Gina Raimondo said Washington had begun the process of asking American cloud companies to report foreign entities who use their computing power to train LLMs. Although no countries were named, it is widely believed to be targeting China.

Nonetheless, LLMs are predicted to enter a stage of rapid development in China, fuelled by strong backing from the government at all levels, a surge in user demand, and the push of tech companies and research institutions.

More and more innovative AI application scenarios and product forms are expected to be seen in 2024.

More and more innovative AI application scenarios and product forms are expected to be seen in 2024.