RaiderChip Revolutionizes Edge AI with its Generative AI Hardware Accelerator for LLM Models on Low-Cost FPGAs

The advent of large language models (LLMs) has transformed the AI landscape, enabling unprecedented capabilities in natural language processing and generation. However, the computational requirements for these models have posed significant challenges for deployment on edge devices. RaiderChip, a pioneering startup, has addressed this limitation with its innovative Generative AI hardware accelerator for LLM models on low-cost FPGAs.

“Our design’s efficiency edge allows customers to run unquantized LLM models at full interactive speed, on limited memory bandwidths where competitors are more than 20% slower, especially faster than CPU-based inference solutions.” - RaiderChip’s team

The GenAI v1 IP core, RaiderChip’s flagship solution, leverages 32-bit floating-point arithmetic to preserve the full intelligence and reasoning capabilities of raw LLM models. This full precision is coupled with real-time AI LLM inference speeds, making it an attractive solution for edge AI applications.

Plug-and-Play IP Cores for Seamless Integration

RaiderChip’s IP cores are designed to be target-agnostic, allowing for implementation on various FPGA vendor devices. The plug-and-play nature of these IP cores enables easy integration, using only the minimal number of industry-standard AXI interfaces. This simplicity makes the GenAI v1 a simple peripheral, fully controllable from the customer’s software.

FPGAs: The Ideal Platform for Local AI Inference

The introduction of FPGAs for Generative AI acceleration expands the available options for local AI inference of LLM models. The reprogrammable nature of FPGAs makes them ideal in the context of explosive innovation in the AI field, where new models and algorithmic upgrades appear on a weekly basis. FPGAs allow for field updates of already deployed systems, ensuring that edge devices can keep pace with the rapid evolution of AI capabilities.

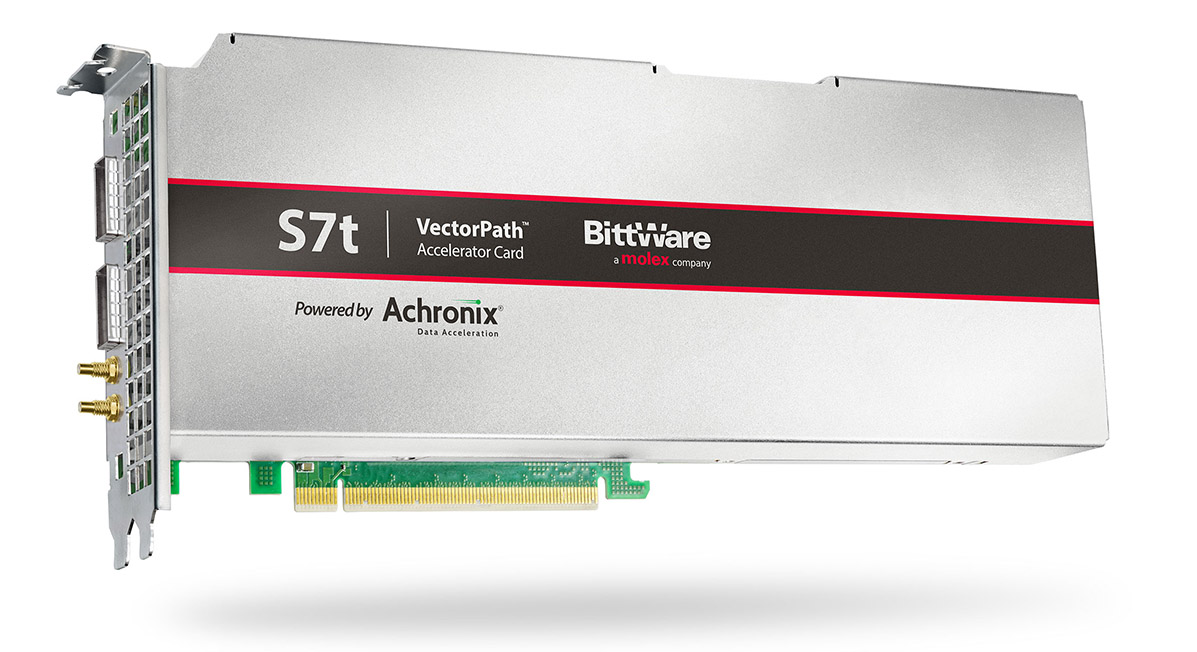

FPGA-based AI acceleration

FPGA-based AI acceleration

RaiderChip’s innovative solution has opened up new possibilities for edge AI applications, enabling the deployment of LLM models on low-cost FPGAs. As the AI landscape continues to evolve, RaiderChip’s GenAI v1 IP core is poised to play a pivotal role in shaping the future of edge AI.

RaiderChip GenAI v1

More information on RaiderChip’s innovative solutions can be found at RaiderChip.