NVIDIA Launches Nemotron-4 340B: A Game Changer in Synthetic Data Generation for LLMs

NVIDIA has once again made headlines with its ambitious announcement of the Nemotron-4 340B, a groundbreaking open-source AI model unveiled on June 14, 2024. This innovative model is designed to address the critical scarcity of high-quality learning data essential for training advanced AI systems. As demands rise, Nemotron-4 340B may prove to be a pivotal development in the generation of synthetic data for large-scale language models (LLMs) and can even cater to commercial applications.

The new Nemotron-4 340B model by NVIDIA promises to transform synthetic data generation.

The new Nemotron-4 340B model by NVIDIA promises to transform synthetic data generation.

Unpacking the Nemotron-4 340B Model

NVIDIA’s latest offering consists of three fundamental models: Base, Instruct, and Reward. These models collaborate to form a robust synthetic data generation pipeline that has been optimized for use with the NVIDIA NeMo open-source learning framework and the NVIDIA TensorRT-LLM library, which enables high-speed inference. With a staggering 9 trillion tokens and the capability to support over 50 natural languages and 40 programming languages, Nemotron-4 340B has emerged as a frontrunner in its category, poised to outstrip competitors like Meta’s Llama3-70B and Anthropic’s Claude 3 Sonnet.

Performance and Features

According to reports, Nemotron-4 340B is positioned to rival the capabilities of GPT-4, underscoring NVIDIA’s commitment to remaining at the vanguard of AI innovation. The model is characterized by its ability to harness diverse and realistic synthetic data, utilizing the Instruct model as the initial generator, followed by evaluations from the Reward model. The Reward model operates on five critical attributes: usefulness, accuracy, consistency, complexity, and redundancy, ensuring rigorous quality control through iterative improvements.

“NVIDIA has once again solidified its position as the undisputed leader in AI innovation with the release of Nemotron-4 340B, which revolutionizes the generation of synthetic data to train LLMs.” - A statement from industry experts.

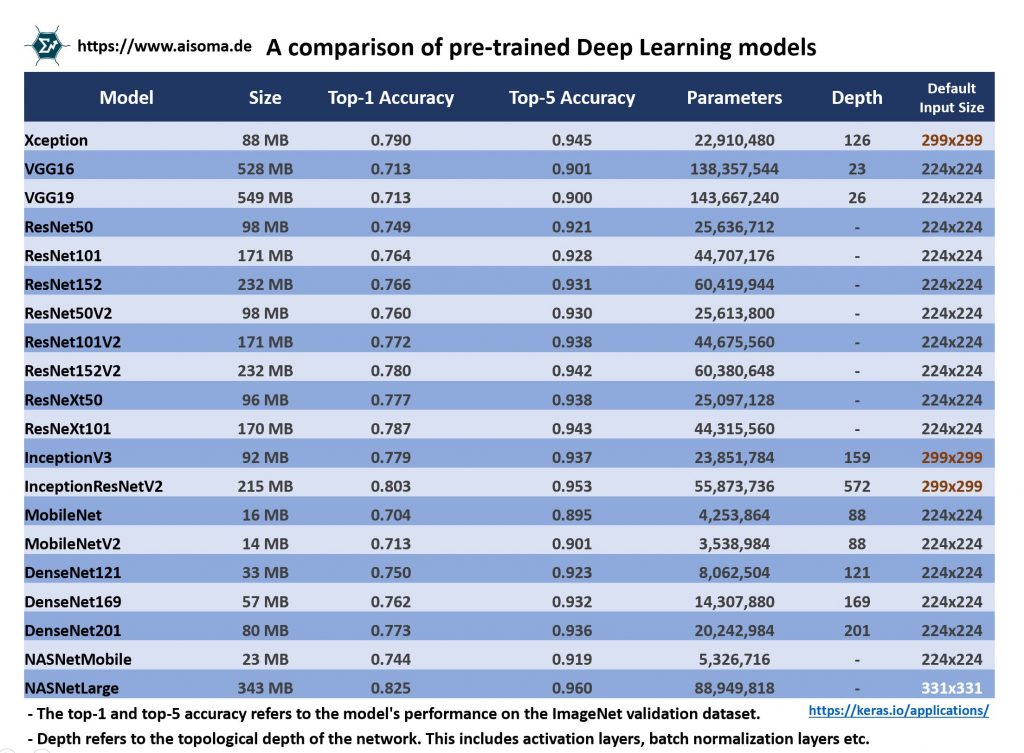

Comparative capabilities of leading AI models demonstrate Nemotron-4’s strength.

Comparative capabilities of leading AI models demonstrate Nemotron-4’s strength.

Availability and User Feedback

The exciting news for developers and researchers is that Nemotron-4 340B is already available on Hugging Face and set to launch on NVIDIA’s official website soon. Early adopters have reported overwhelmingly positive feedback through platforms such as LMSYS Org’s Chatbot Arena, praising the model’s robust performance and the breadth of expertise it encompasses.

Implications for the Future of LLMs

The introduction of Nemotron-4 340B has significant implications for the future of LLM training and development. As organizations strive to build more capable and versatile AI systems, the demand for synthetic data generation methodologies that are both efficient and high-quality will only increase. The advantage of open-source models such as Nemotron-4 lies in their accessibility, allowing broader adoption and collaboration across the AI community.

The landscape of AI development is shifting with open-source innovations like Nemotron-4.

The landscape of AI development is shifting with open-source innovations like Nemotron-4.

Related Developments in AI

NVIDIA’s announcement is set against a backdrop of a rapidly evolving AI landscape, with notable developments emerging from various players in the field. For instance, Meta’s release of ‘Code Llama’, aims to provide coding support AI commercially, while Bloomberg’s introduction of ‘BloombergGPT’ promises to aid financial analysts in generating rich content with AI efficiency. Furthermore, recent news reveals that Anthropic’s ‘Claude 3 Opus’ has outperformed GPT-4 in some evaluations, marking a significant competitive shift.

In a rapidly changing world where AI capabilities are advancing at a breakneck pace, NVIDIA’s Nemotron-4 340B clearly stands out as a model poised to revolutionize how synthetic data is leveraged for training large language models. As we look to the future, the collaboration between AI researchers and developers will be crucial in unlocking the full potential of such innovations.

Conclusion

As synthetic data generation becomes an ever more critical component of AI training, the unveiling of NVIDIA’s Nemotron-4 340B is both timely and significant. By not only addressing the current limitations in high-quality data but also setting new performance benchmarks, NVIDIA continues to pave the way for future advancements in the field of AI.