NVIDIA Sets New Records in Generative AI with MLPerf Training v4.0

NVIDIA has achieved new performance records in the generative AI domain, solidifying its dominance in AI training benchmarks, particularly in large language models (LLMs) and generative AI.

MLPerf Training v4.0: The Industry-Standard Benchmark

MLPerf Training, developed by the MLCommons consortium, is the industry-standard benchmark for evaluating end-to-end AI training performance. The latest version, v4.0, introduces two new tests to reflect popular industry workloads. The first test measures the fine-tuning speed of Llama 2 70B using the low-rank adaptation (LoRA) technique. The second test focuses on graph neural network (GNN) training, based on an implementation of the relational graph attention network (RGAT).

MLPerf Training v4.0: The Industry-Standard Benchmark

MLPerf Training v4.0: The Industry-Standard Benchmark

NVIDIA’s Record-Breaking Performance

In the latest MLPerf Training round, NVIDIA achieved remarkable performance using a full stack of its hardware and software solutions. The company’s optimized software stack, combined with its Hopper GPUs, fourth-generation NVLink interconnect, and NVIDIA Quantum-2 InfiniBand networking, enabled NVIDIA to break previous records.

NVIDIA Hopper GPUs

For instance, NVIDIA improved its GPT-3 175B training time from 10.9 minutes using 3,584 H100 GPUs to just 3.4 minutes using 11,616 H100 GPUs, demonstrating near-linear performance scaling.

Generative AI and LLM Fine-Tuning

NVIDIA also set new records in LLM fine-tuning, particularly with the Llama 2 70B model developed by Meta. Utilizing the LoRA technique, a single DGX H100 with eight H100 GPUs completed the fine-tuning in just over 28 minutes. The NVIDIA H200 Tensor Core GPU further reduced this time to 24.7 minutes.

LLM Fine-Tuning

Advancements in Visual Generative AI

MLPerf Training v4.0 also includes a benchmark for text-to-image generative AI based on Stable Diffusion v2. NVIDIA’s submissions delivered up to 80% more performance at the same scales through extensive software enhancements.

Stable Diffusion v2

Stable Diffusion v2

Graph Neural Network Training

NVIDIA set new records in GNN training as well. Using 8, 64, and 512 H100 GPUs, the company achieved a record time of just 1.1 minutes in the largest-scale configuration.

GNN Training

Key Takeaways

NVIDIA continues to lead in AI training performance, showcasing the highest versatility and efficiency across a range of AI workloads. The company’s ongoing optimization of its software stack ensures more performance per GPU, reducing training costs and enabling the training of more demanding models.

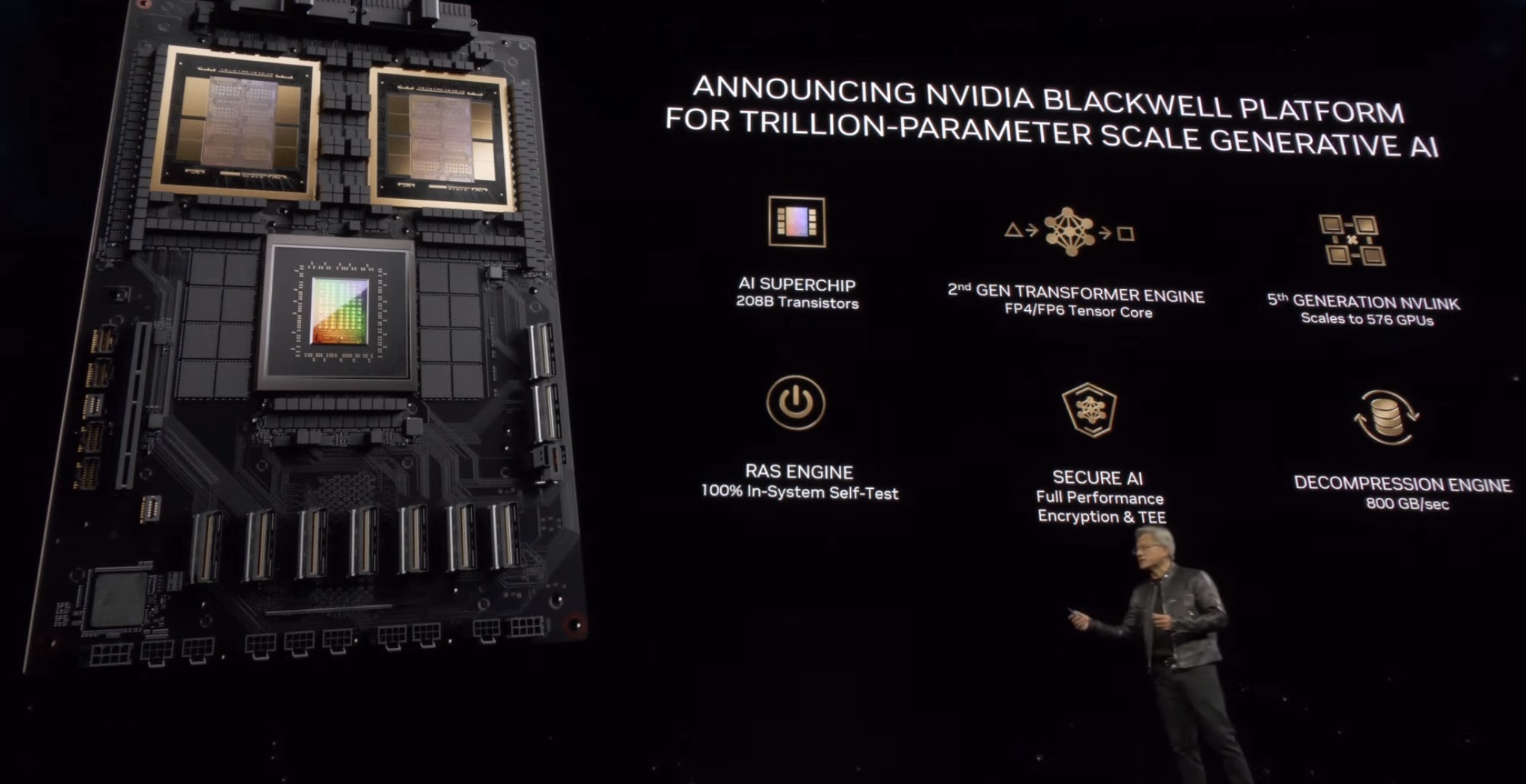

NVIDIA Blackwell Platform

NVIDIA Blackwell Platform

Looking ahead, the NVIDIA Blackwell platform, announced at GTC 2024, promises to democratize trillion-parameter AI, delivering up to 30x faster real-time trillion-parameter inference and up to 4x faster trillion-parameter training compared to NVIDIA Hopper GPUs.