NIST Launches Ambitious Effort to Assess LLM Risks

The National Institute of Standards and Technology (NIST) has announced an extensive effort to test large language models (LLMs) to improve understanding of artificial intelligence’s capabilities and impacts. The Assessing Risks and Impacts of AI (ARIA) program aims to assess the societal risks and impacts of artificial intelligence systems, including ascertaining what happens when people interact with AI regularly in realistic settings.

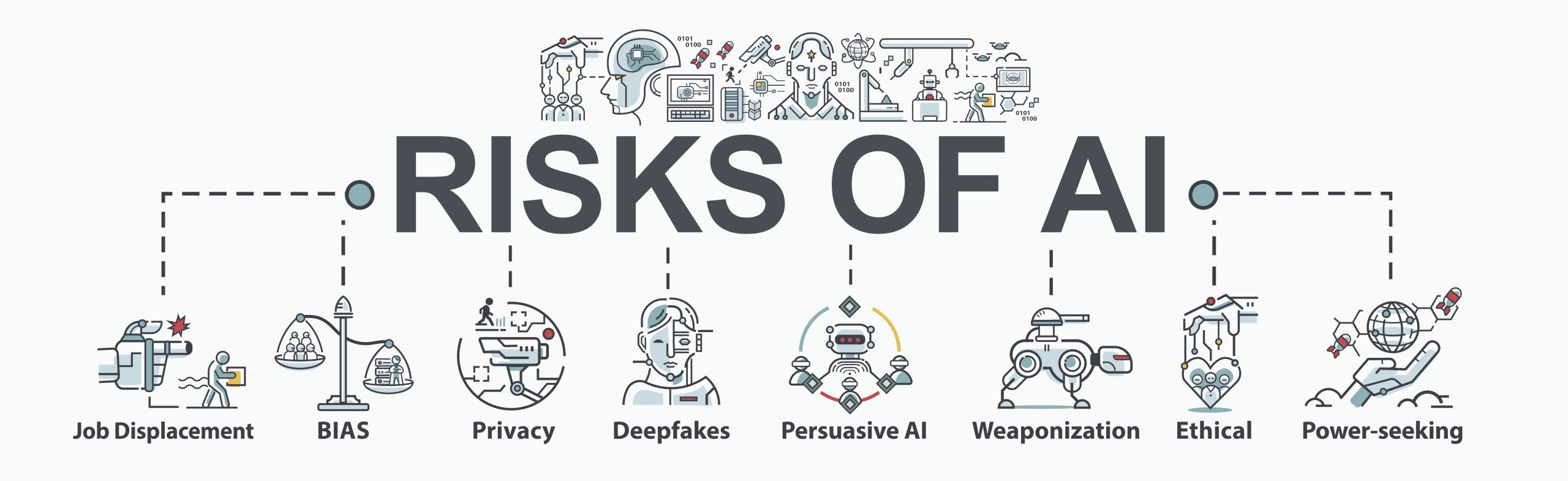

Caption: NIST’s ARIA program focuses on assessing the societal risks and impacts of AI systems.

The NIST effort will include a testing, evaluation, validation, and verification (TEVV) program intended to help improve understanding of artificial intelligence’s capabilities and impacts. The program will initially explore three LLM capabilities: controlled access to privileged information, personalized content for different populations, and synthesized factual content.

“NIST’s new ARIA program focuses on assessing the societal risks and impacts of AI systems in order to offer guidance to the broader industry. Guidance, over regulation, is a great approach to managing the safety of a new technology,” said Michiel Prins, co-founder of HackerOne.

Industry experts have welcomed the NIST move, saying that its end goal is to deliver guidance, not regulation. Prins added that NIST’s efforts are not significantly different from what many in the industry are already doing.

Caption: AI security is a critical concern for organizations.

Caption: AI security is a critical concern for organizations.

The Biden Administration is focused on keeping up with constantly evolving technology, which is something that many administrations have struggled with, arguably unsuccessfully. Brian Levine, a managing director at Ernst & Young, said that he sees some current efforts, especially with generative AI, potentially going in the opposite direction, with US and global regulators digging in too early, while the technology is still very much in flux.

“In security, a workforce needs to be trained on security best practices, but that doesn’t negate the value of anti-phishing software. The same logic applies to AI safety and security: These issues are big and need to be addressed from a lot of different angles,” said Prins.

Crystal Morin, a cybersecurity strategist at Sysdig and a former analyst at Booz Allen Hamilton, said she saw the NIST effort as a very delicate and challenging concept to undertake.

“AI security is not a one-size-fits-all endeavor. What is considered a societal risk will vary depending on the region, sector, and size of a given business,” Morin said.

The NIST statement also quoted some government officials stressing the importance of getting a handle on LLM risks and benefits.

“In order to fully understand the impacts AI is having and will have on our society, we need to test how AI functions in realistic scenarios — and that’s exactly what we’re doing with this program,” said US Commerce Secretary Gina Raimondo.

Caption: Understanding AI risks is crucial for organizations.

Caption: Understanding AI risks is crucial for organizations.

Reva Schwartz, NIST Information Technology Lab’s ARIA program lead, spoke of the criticality of testing AI functions in controlled laboratory settings and applying real-world factors.

“Measuring impacts is about more than how well a model functions in a laboratory setting. ARIA will consider AI beyond the model and assess systems in context, including what happens when people interact with AI technology in realistic settings under regular use. This gives a broader, more holistic view of the net effects of these technologies,” Schwartz said.