Unraveling the Complex Threads of AI Vulnerabilities and Advancements

In the burgeoning field of artificial intelligence, generative AI continues to captivate attention, pushing the boundaries of what is possible while simultaneously revealing critical vulnerabilities within its framework. A recent exploration into the realm of AI security has spotlighted a novel technique, dubbed MathPrompt, that threatens the integrity of existing safeguards designed to prevent malicious exploitation of these systems.

Understanding MathPrompt: The New Cybersecurity Threat

Cybersecurity researchers have unveiled a disturbing loophole that allows threat actors to bypass security controls by translating malicious prompts into mathematical equations. This methodology offers a fresh perspective on vulnerabilities within AI models, exposing significant weaknesses in their defense mechanisms.

Joseph Steinberg, a prominent figure in AI and cybersecurity, elaborates on this technique, likening it to utilizing “weird symbols” to generate malformed URLs. Such analogies demonstrate not only the depth of understanding required to exploit these systems but also the need for evolving protective measures. This insight is underscored by the implementation of basic cybersecurity practices, urging Chief Information Security Officers (CISOs) to ensure that adequate policies are in place to mitigate potential breaches.

“You need to have proper policies and procedures in place to make sure staff aren’t using it in a way that creates a problem,” states Steinberg, pinpointing the human element involved in cybersecurity.

The dangers lurking within AI systems are becoming increasingly sophisticated.

The dangers lurking within AI systems are becoming increasingly sophisticated.

Exploring the Impact of MathPrompt

The MathPrompt attack, as detailed in a paper from a consortium of researchers based in Texas, Florida, and Mexico, reveals a staggering 73.6% success rate in bypassing safety mechanisms across thirteen of the leading AI platforms. This alarming statistic highlights an essential truth long recognized by experts: the intricate dance between creativity and safety in the AI space requires a constantly evolving set of countermeasures.

As AI systems grow increasingly adept at handling complex mathematical problems, they inherently expose vulnerabilities within their architectures. This presents dire implications for the proliferation of misinformation and the elevation of harmful content.

ChatGPT: A Pioneer in the AI Revolution

While the cybersecurity landscape grows ever more treacherous, the realm of AI continues to evolve at an equally rapid pace. ChatGPT, a notable AI chatbot developed by OpenAI, stands as a testament to this progression. Since its release in November 2022, ChatGPT has garnered more than 100 million users, redefining how we interact with technology and information.

Developed on the foundation of the GPT-4 model, ChatGPT utilizes a vast neural network trained on diverse data, exemplifying advanced natural language processing capabilities. Unlike traditional voice assistants, it generates novel responses rather than regurgitating pre-programmed answers. This inherent ability encourages users to engage in a dynamic dialogue with machines, further blurring the line between human and artificial interaction.

Use and Application of ChatGPT

Utilizing ChatGPT is a straightforward endeavor. Users can either create a free account or engage anonymously, exploring a wide array of queries. Not only does this accessibility invite experimentation and creativity, but it solidifies ChatGPT’s position as a versatile tool for various applications—from casual inquiries to complex brainstorming sessions.

The innovative interaction provided by ChatGPT allows users to explore thought-provoking dialogue and creativity.

The innovative interaction provided by ChatGPT allows users to explore thought-provoking dialogue and creativity.

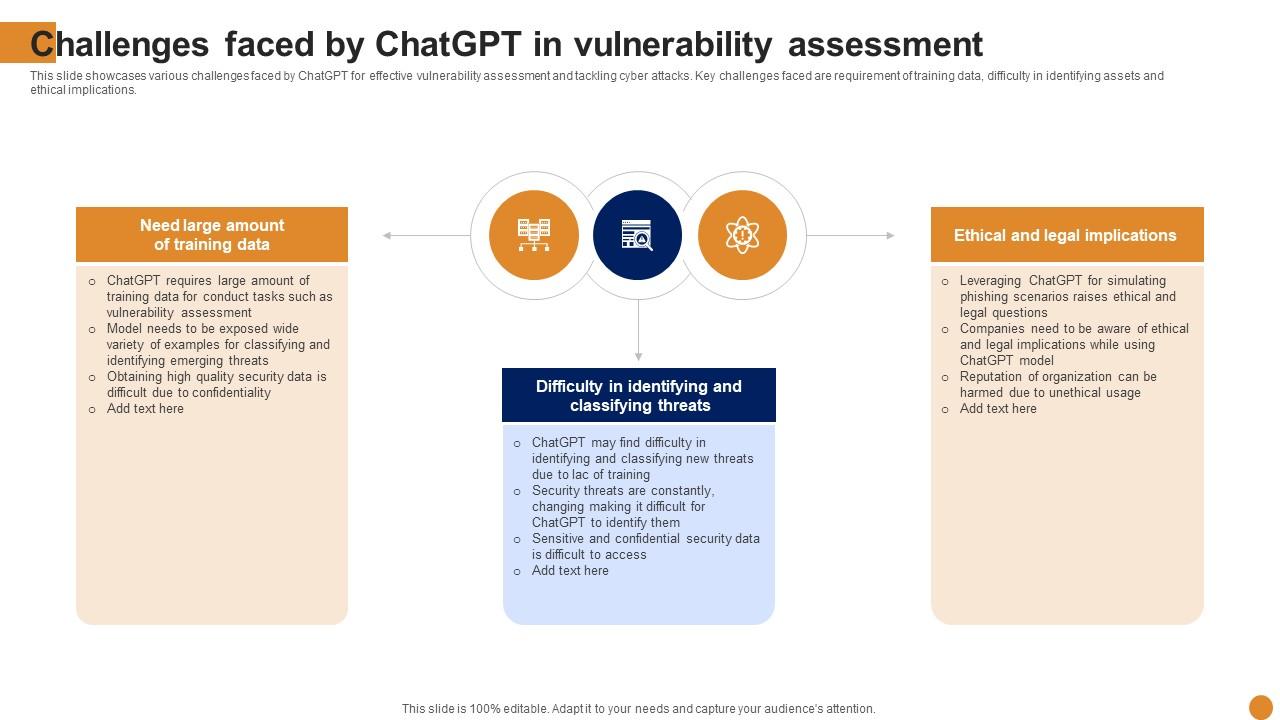

Controversies and Ethical Challenges

However, as with any groundbreaking technology, ChatGPT has faced scrutiny regarding its reliability and ethical implications. Questions surrounding job displacement due to AI implementation, misinformation generation, and the moral bearings of AI outputs have ignited heated discussions. Addressing these dilemmas requires a concerted effort within both the tech community and regulatory bodies to define the ethical parameters that shape the future of AI.

Broadcom’s Sian2 Chip: Powering AI Networks

In another facet of the AI ecosystem, Broadcom has recently introduced its Sian2 chip, a significant advancement designed to enhance the optical networks powering AI clusters. This new module doubles the bandwidth of its predecessor, boasting improvements in data reliability and efficiency—key attributes essential for facilitating seamless data transport.

The rapid growth of AI technologies typically relies on interconnected servers, each hosting fragments of vast language models. As AI continues to advance, maintaining a robust infrastructure to support these functionalities becomes paramount. The Sian2 chip not only optimizes data transmission but also incorporates technologies to mitigate errors often plaguing high-speed optical networks.

Implications for Data Center Operators

By employing fiber optic technology, Broadcom reinforces the efficacy of AI clusters where large datasets frequently travel between servers. The advantages of optical connections are manifold; they provide faster transfer speeds, and the new Sian2 chip enhances the overall reliability of these data movements across networks. This leap in technology significantly reduces the number of transceivers required, subsequently lowering power demands and procurement costs, enhancing operational efficiency in data centers.

Broadcom’s development of the Sian2 chip represents foundational work for the next generation of AI infrastructure, as Vijay Janapaty, the vice president of Broadcom’s physical layer products division, indicates:

“200G/lane DSP is foundational to high-speed optical links for next generation scale-up and scale-out networks in the AI infrastructure.”

Broadcom’s advancements are integral to future-proofing AI network capabilities.

The Road Ahead: A Dual Challenge

As the landscape of AI technology continues to advance, it simultaneously opens the door to new vulnerabilities. The MathPrompt attack’s implications pose serious questions regarding AI safety, while tools like ChatGPT reshape our understanding of interaction with artificial intelligence. In parallel, the introduction of technologies like the Sian2 chip reminds us of the infrastructural needs required to sustain these advancements, emphasizing the interdependence of both software and hardware in the AI ecosystem.

The juxtaposition of these advancements highlights a pivotal moment in modern technology, where the focus must shift toward not only fostering innovation but also ensuring robust protective measures are in place to mitigate emerging threats. As we step into this new frontier, an emphasis on ethical development and strategic safeguards will be crucial in harnessing the future of AI responsibly and effectively.

Conclusion

The discussion surrounding AI remains vibrant and complex, requiring ongoing dialogue among stakeholders, developers, and researchers. By prioritizing both innovation and security, the AI community can work collectively to navigate this emerging frontier, ensuring technology serves humanity positively and responsibly.