Navigating the New Challenges: Wikipedia’s Response to AI Threats

In an era where artificial intelligence (AI) is transforming the landscape of information sharing, Wikipedia finds itself at a critical juncture. With over 16 billion visits each month, the online encyclopedia is not just a repository of human knowledge but also a target for misinformation campaigns and promotional content, heightened by the rise of AI-generated text. Volunteers at Wikipedia are now tasked with magnifying their vigilance to combat these challenges actively.

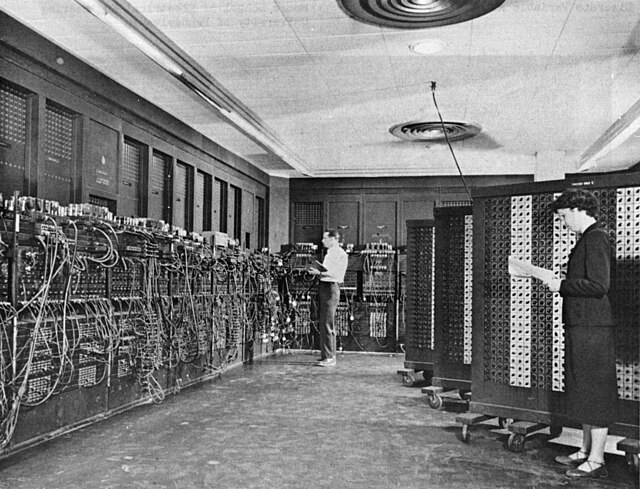

The intersection of human knowledge and AI-generated content

The intersection of human knowledge and AI-generated content

A Legacy of Vigilance Against Misinformation

For years, Wikipedia has dealt with the issue of fake articles. A notable instance involved a page falsely claiming a Northern Irish radio presenter had been a promising break-dancer, a clear case of trolling. However, the stakes have risen dramatically with AI’s ability to produce credible textual content effortlessly, breathlessly inserting potential misinformation into its vast databanks. Wikipedia co-founder, Jimmy Wales, acknowledges AI as “both an opportunity and a threat,” highlighting the delicate balance the platform must maintain in its approach to user-generated content.

Thus far, a dedicated community of 265,000 volunteers has been central in mitigating such risks. Their role extends beyond just editorial tasks; they actively moderate and ensure that contributions adhere to Wikipedia’s rigorous standards.

The AI Challenge: A Surge in Content Creation

The launch of tools like ChatGPT has sparked a noticeable uptick in submissions that exhibit hallmarks of AI generation. As noted by Miguel Ángel García, a Wikimedia Spain partner, the patterns of new editors have shifted; they tend to contribute extensive blocks of content atypical for new volunteers, who generally build articles gradually.

“Since artificial intelligence has existed, we’ve seen new volunteers providing comprehensive text that often contains redundancy typical of AI writing,” García remarked, noting how the community’s collective expertise remains pivotal in identifying less-than-valuable contributions.

Wikipedia’s dedicated volunteers are the guardians of editorial integrity

A Community-Driven Model For Quality Control

As Wikipedia’s model is predicated on community engagement, the detection and removal of low-quality content fall to these volunteers. Chris Albon, Director of Machine Learning at the Wikimedia Foundation, highlights the community’s resilience in upholding the encyclopedia’s quality, stating that each text must cite credible secondary sources. When dubious articles arise—especially those lacking substantiating references—they face swift deletion. García further explains that articles without sources are quickly flagged and often eradicated within days unless supporting evidence is provided.

Adapting to AI: Policies and Community Oversight

The community’s efforts to cultivate a robust framework for AI usage on the platform have already borne fruit. Albon emphasizes the necessity of “learning to live with these tools,” advocating for a model where volunteers continually edit and enhance AI-generated contributions. Significantly, the site does not penalize the integration of artificial intelligence per se; rather, it targets texts that fail to meet established quality standards.

Looking Ahead: The Relationship Between AI and Knowledge Sharing

As AI continues to evolve, so too does the potential for collision with platforms like Wikipedia. A notable concern arises when widely-used AI chatbots distill information from Wikipedia without genuine engagement with the articles themselves. Albon warns, “If there’s a disconnect between where knowledge is generated and where it’s consumed, we risk losing a generation of volunteers.”

Indeed, Wikipedia finds itself at the intersection of knowledge generation and consumption. There’s an urgent need for attribution in AI outputs to ensure the integrity of sourced information—without effective measures, the likelihood of misinformation proliferating grows markedly.

The ongoing challenge of information authenticity in the AI landscape

Conclusion: A Call for Community Resilience

In conclusion, Wikipedia stands as both a monument to human knowledge and a battleground for misinformation. As AI technologies continue to gain traction, the encyclopedia’s reliance on a robust community remains paramount. The intention is not to eliminate AI tools, but to integrate them responsibly within Wikipedia’s ecosystem—ensuring that the pursuit of accurate and reliable information endures in this new age of artificial intelligence.

For those interested in further developments on this topic, explore the Wikipedia page.