Meta AI’s Llama 3: Revolutionizing AI Capabilities

The dawn of a new era in artificial intelligence has arrived with the introduction of Meta AI’s Llama 3. This open-source wonder is not just an upgrade, but a significant leap forward in AI capabilities. In this article, we’ll delve into the features and implications of Llama 3, exploring what sets it apart from its predecessors and how it will transform the AI landscape.

What is Llama 3?

Llama 3 is the latest generation of open-source large language models developed by Meta. It represents a significant advancement in artificial intelligence, building on the foundation laid by its predecessors, Llama 1 and Llama 2. The new evaluation set includes 1,800 prompts across 12 key use cases, such as asking for advice, brainstorming, classification, and more.

Key Features of Llama 3

- Model sizes: 8 billion parameters: A smaller yet highly efficient version of Llama 3, suitable for a broad range of applications. 70 billion parameters: A larger, more powerful model that excels in complex tasks and demonstrates superior performance on industry benchmarks.

- Training data: 15 trillion tokens: The model was trained on an extensive dataset consisting of over 15 trillion tokens, which is seven times larger than the dataset used for Llama 2. 4x more code: The training data includes four times more code compared to Llama 2, enhancing its ability to handle coding and programming tasks. 30+ languages: Includes high-quality non-English data covering over 30 languages, making it more versatile and capable of handling multilingual tasks.

- Training infrastructure: 24K GPU Clusters: The training was conducted on custom-built clusters with 24,000 GPUs, achieving a compute utilization of over 400 TFLOPS per GPU. 95% effective training time: Enhanced training stack and reliability mechanisms led to more than 95% effective training time, increasing overall efficiency by three times compared to Llama 2.

Function Calling in Llama 3

The function calling feature in Llama 3 allows users to execute functions or commands within the AI environment by invoking specific keywords or phrases. This feature enables users to interact with Llama 3 in a more dynamic and versatile manner, as they can trigger predefined actions or tasks directly from their conversation with the AI.

What Can Llama 3 Do That Llama 1 and Llama 2 Can’t?

Llama 3 introduces significantly improved reasoning capabilities compared to its predecessors, Llama 1 and Llama 2. This enhancement allows the model to perform complex logical operations and understand intricate patterns within the data more effectively.

Llama 3 excels in code generation thanks to a training dataset with four times more code than its predecessors. It can automate coding tasks, generate boilerplate code, and suggest improvements, making it an invaluable tool for developers.

What’s More?

Future Llama 3 models with over 400 billion parameters promise greater performance and capabilities, pushing the boundaries of natural language processing. Upcoming versions of Llama 3 will support multiple modalities and languages, expanding its versatility and global applicability.

Meta’s decision to release Llama 3 as open-source software fosters innovation and collaboration in the AI community, promoting transparency and knowledge sharing.

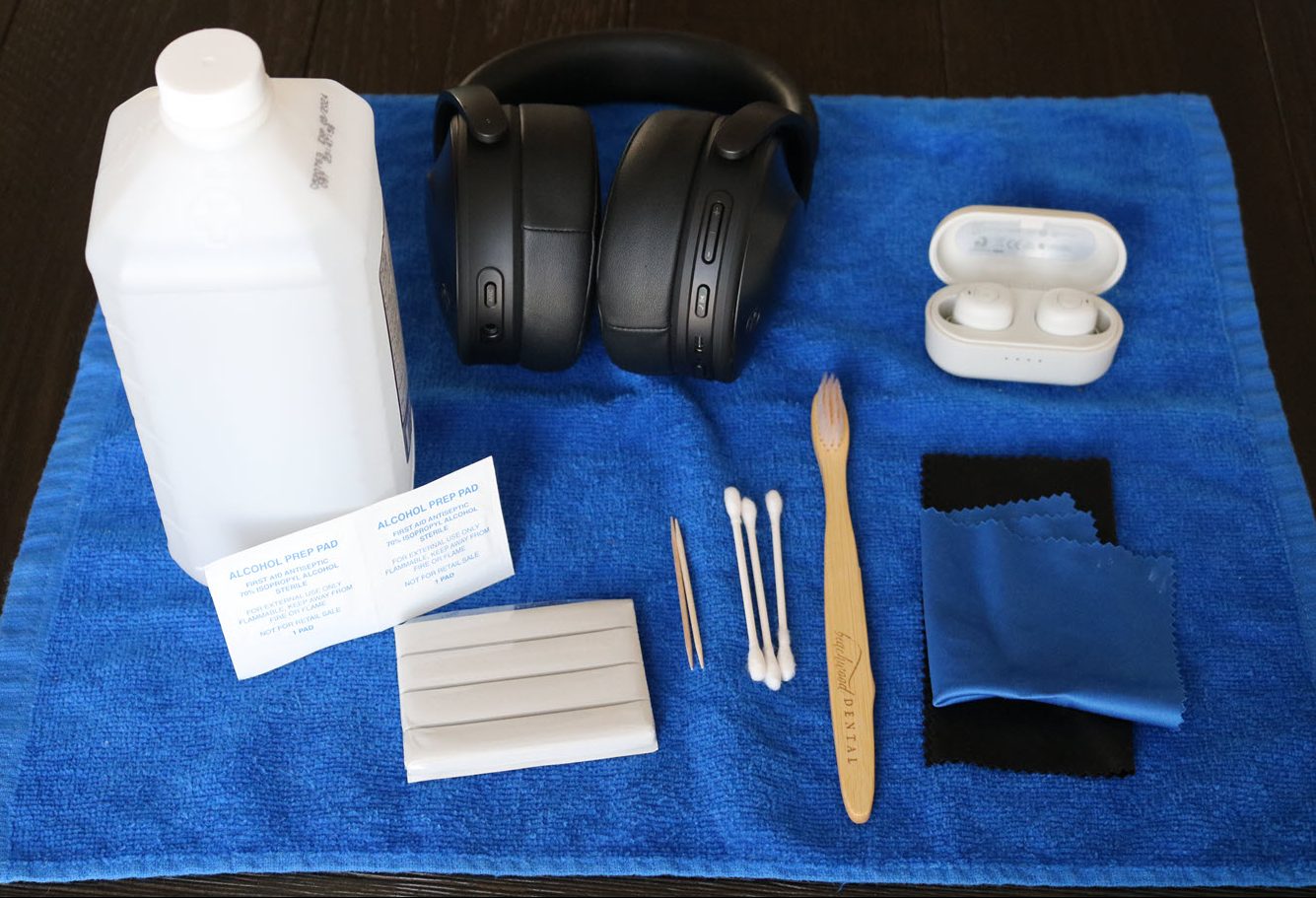

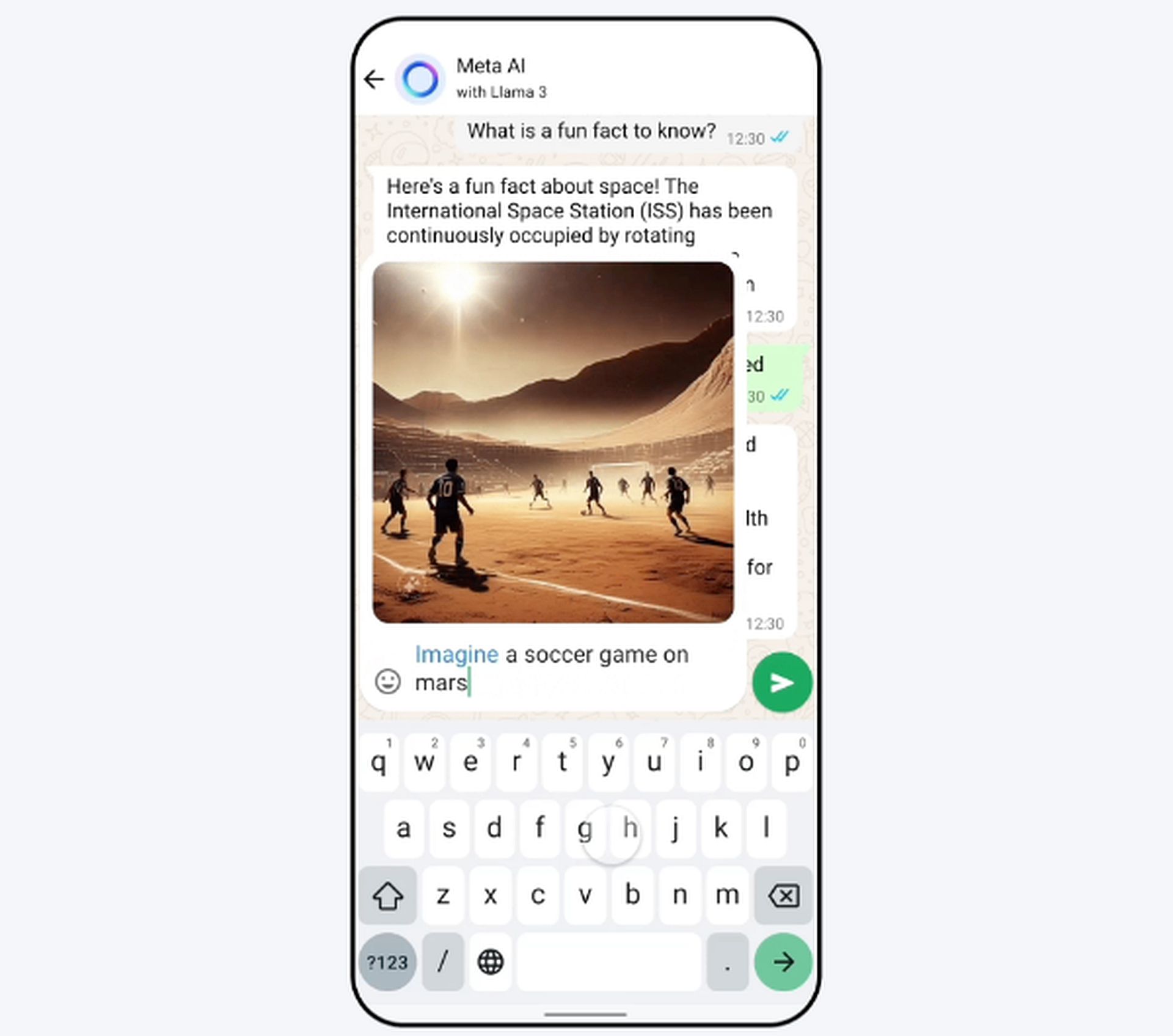

Caption: Meta Llama 3

Caption: Meta Llama 3

AI Safety Institute Releases LLM Safety Results

The UK Government’s Institute for AI Safety has published its first AI testing results. Tests screened for cyber, chemical, biological agent capabilities and safeguards effectiveness for five leading models.

The Institute assessed AI models against four key risk areas, including how effective the safeguards that developers have installed actually are in practice. As part of the findings, the Institute’s tests have found that:

- Several LLMs demonstrated expert-level knowledge of chemistry and biology. Models answered over 600 private expert-written chemistry and biology questions at similar levels to humans with PhD-level training.

- Several LLMs completed simple cyber security challenges aimed at high-school students but struggled with challenges aimed at university students.

- Two LLMs completed short-horizon agent tasks (such as simple software engineering problems) but were unable to plan and execute sequences of actions for more complex tasks.

- All tested LLMs remain highly vulnerable to basic jailbreaks, and some will provide harmful outputs even without dedicated attempts to circumvent their safeguards.

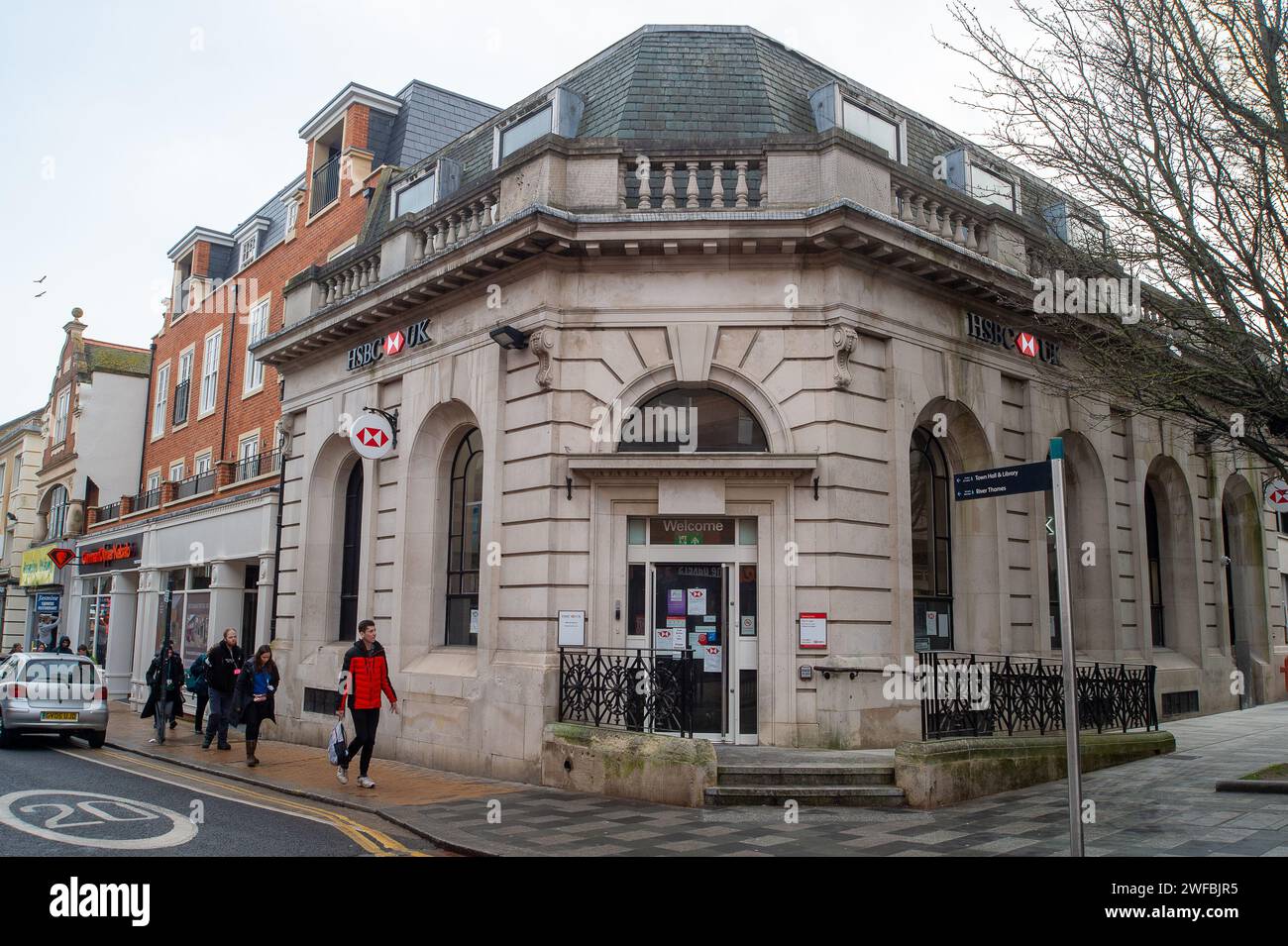

Caption: AI Safety Institute

Caption: AI Safety Institute

The results will likely be discussed at the upcoming Seoul Summit this week, which will be co-hosted by the UK and the Republic of Korea.

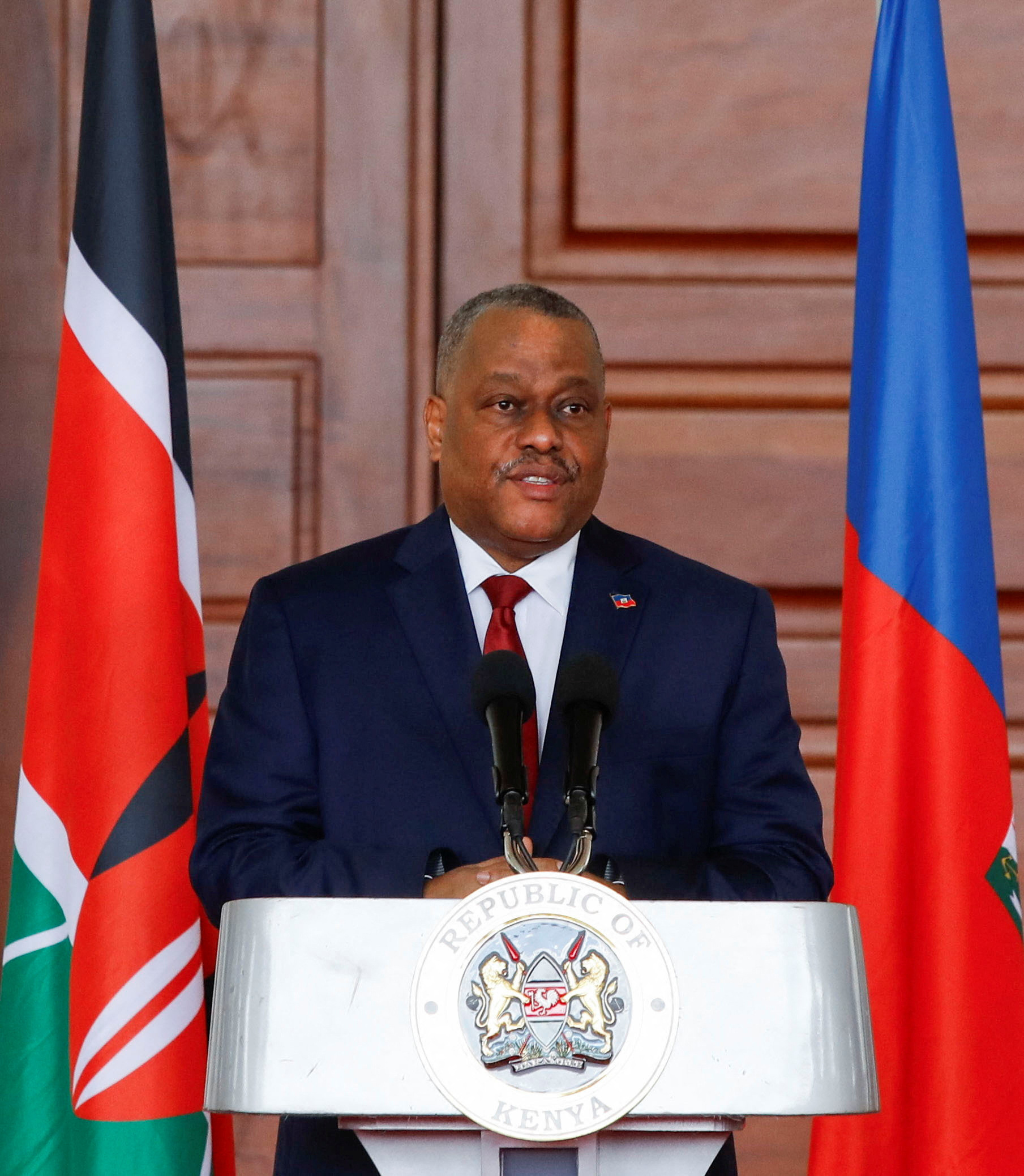

Caption: Seoul Summit

Caption: Seoul Summit