Llama 13B: The Military’s New Unlikely Ally in AI

In a striking development, researchers in China have leveraged Meta Platforms Inc.’s Llama 13B artificial intelligence model to create a chatbot explicitly designed for military purposes. Detailed by various sources, including credible academic papers, this novel application of AI showcases the transformative potential and ethical challenges surrounding advanced technology in military contexts.

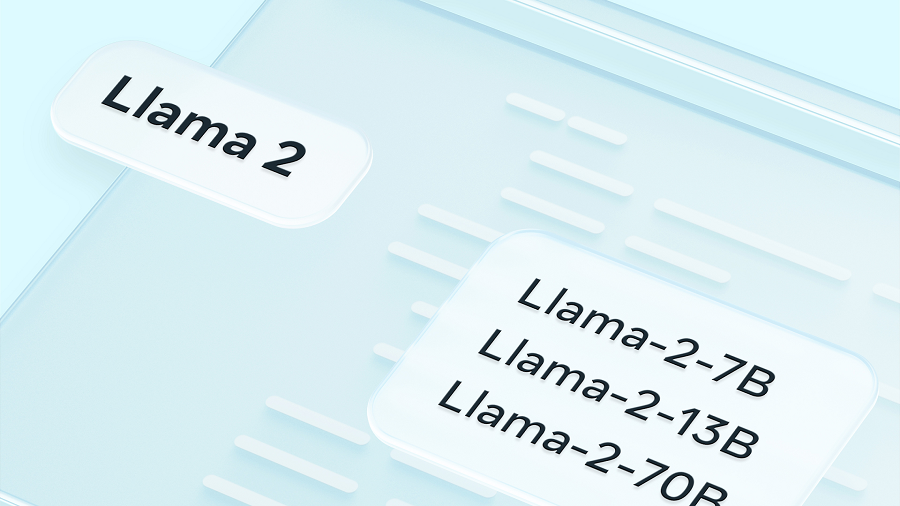

An intriguing look into the Llama AI ecosystem.

An intriguing look into the Llama AI ecosystem.

Launched in February 2022, the Llama series is an array of open-source large language models that promises unparalleled access and freedom for developers engaged in both research and commercial endeavors. However, it’s worth noting that Meta has explicitly prohibited military applications under its licensing terms. This raises significant ethical questions: how should AI usage be regulated, especially in contexts that can lead to violence or warfare?

The chatbot, dubbed ChatBIT, has been customized by a team of six researchers from three Chinese institutions. Notably, two of these institutions are affiliated with the Academy of Military Science, the leading research entity for the People’s Liberation Army. The academic paper emerging from this research highlights that ChatBIT is “optimized for dialogue and question-answering tasks in the military field,” indicating a calculated move to integrate sophisticated AI into defense mechanisms.

The Technology Behind ChatBIT

ChatBIT is built on Meta’s Llama 13B, a model rooted in the Transformer neural network architecture. This technology has been spruced up with performance optimizations aimed at improving comprehension of extended dialogues. The implications of Llama in military contexts could prove significant, considering the need for efficient communication in tactical scenarios.

Researchers have further augmented ChatBIT’s capabilities by customizing certain parameters tailored to military use cases and providing it access to extensive datasets, including over 100,000 military dialogue records. This infusion of data into machine learning models can enhance understanding, adaptability, and pragmatism in responses, pivotal for military applications where precision is non-negotiable.

![]() Innovations in military AI are reshaping traditional paradigms.

Innovations in military AI are reshaping traditional paradigms.

Simultaneously, another study published by researchers from an aviation company aligned with the People’s Liberation Army has explored Llama 2 for training airborne electronic warfare strategies. Such advancements signal a formidable shift where AI doesn’t merely assist but actively participates in strategy formulation in conflict zones.

The Larger Landscape of AI Development

The advancements do not stop with ChatBIT. The evolution of Meta’s Llama series continues with iterations like Llama 2, which was released last July, trained on significantly larger datasets and capable of processing longer prompts. For instance, Llama 2 employs a technique known as grouped-query attention (GQA), which alleviates infrastructure burdens while expediting inference speeds — crucial for applications in both commercial and military settings.

Moreover, Meta recently introduced Llama 3.1 (405B), which stands as the most advanced model in the Llama series to date. The improvements in reasoning and data processing capabilities foretell a future where AI could play even more intricate roles in decision-making processes, whether civilian or military.

Every progression in this field necessitates scrutiny. As Chief Executive Mark Zuckerberg indicated, the forthcoming Llama 4, presently in development, is being trained on an extensive AI setup utilizing over 100,000 H100 graphics processing units. What does this mean for society? The implications could be profound, expanding our reliance on AI in ways we may not anticipate yet.

Navigating Ethical Waters

As we watch these developments unfold, it’s imperative to reflect on the ethical dimensions of deploying AI in wartime applications. The fine line between innovation and moral responsibility becomes increasingly blurred as more countries recognize the potential of AI in military strategies. This transformation may allow for enhanced operational capabilities but could also provoke debates over accountability and the implications of deploying such technologies in combat.

“The true test of AI will not just be its capability but how responsibly we deploy it in scenarios that matter the most.”

In my opinion, the potential benefits of AI in defense must be approached with caution. Who is held accountable if AI systems fail in multitasking environments, leading to catastrophic decisions? As a society at large, we need to engage in dialogues about how best to regulate and oversee this burgeoning technology while ensuring security take precedence over efficiency.

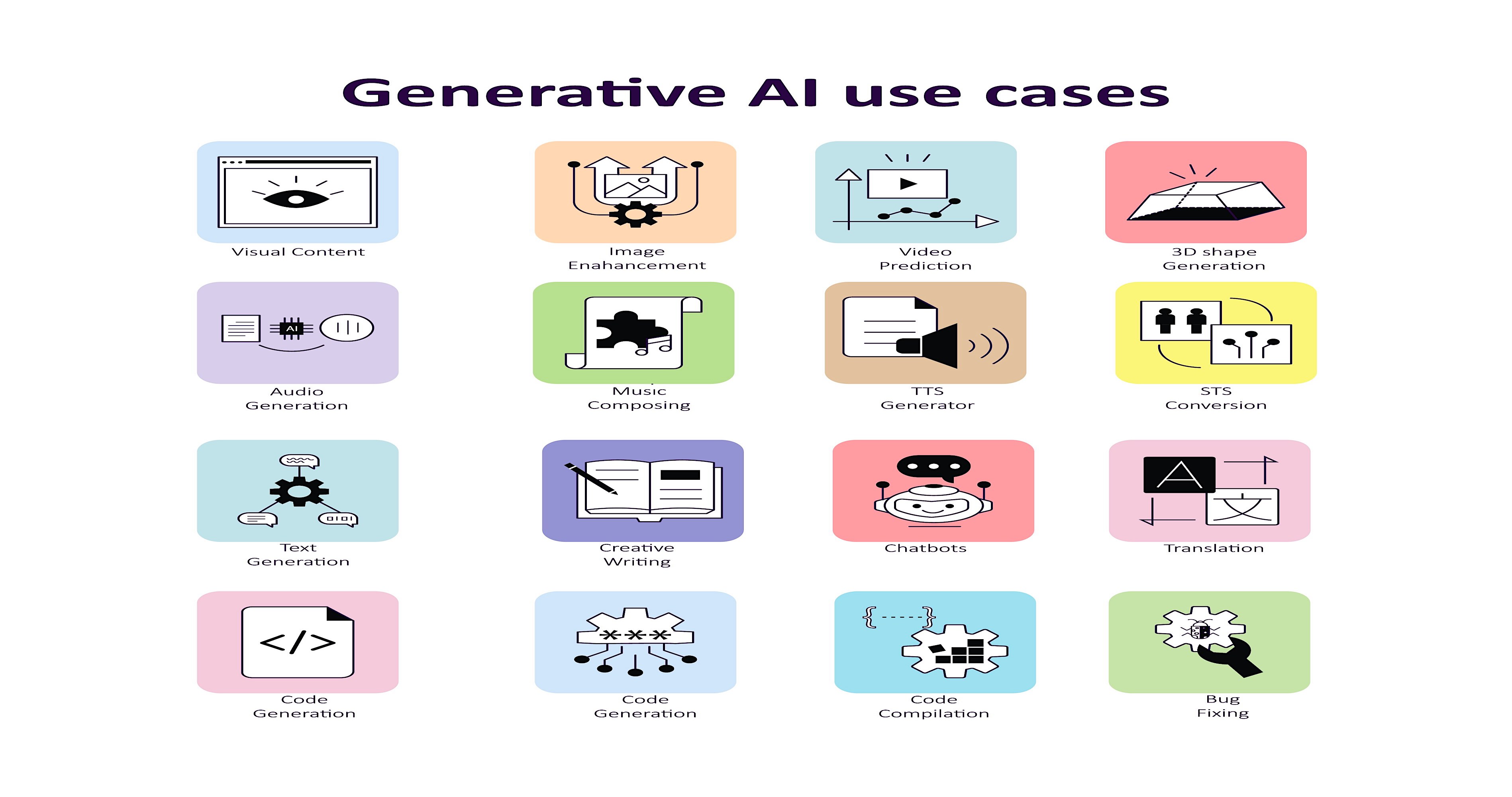

Exploring the frontier of AI-driven military applications sheds light on emerging ethical dilemmas.

Exploring the frontier of AI-driven military applications sheds light on emerging ethical dilemmas.

In conclusion, while the innovation represented by ChatBIT and the Llama series is exhilarating, we must place equal emphasis on understanding its implications, especially in military contexts. As we integrate AI deeper into our societies, balancing technological prowess with ethical considerations will be crucial for a future that prioritizes human oversight, accountability, and, ultimately, peace.