HippoRAG: A Novel Retrieval Framework Inspired by the Hippocampal Indexing Theory

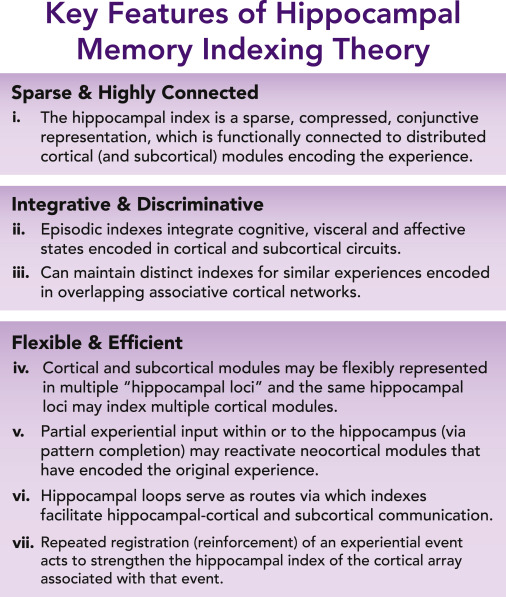

The ability of large language models (LLMs) to store and retrieve knowledge efficiently is crucial for their applications. While significant progress has been made in retrieval-augmented generation (RAG), some limitations remain unaddressed. Researchers from Ohio State University and Stanford University have introduced HippoRAG, a novel retrieval framework inspired by the hippocampal indexing theory of human long-term memory.

The Challenges of Knowledge Integration in LLMs

The human brain can store vast amounts of knowledge and continuously integrate new experiences without losing previous ones. This long-term memory system allows humans to continuously update the knowledge they use for reasoning and decision-making. In contrast, LLMs still struggle with knowledge integration after pre-training. While RAG has become a popular solution for long-term memory in LLMs, current methods struggle to integrate knowledge across different sources because each new document is encoded separately.

Integrating knowledge across multiple sources is crucial for many real-world applications.

Integrating knowledge across multiple sources is crucial for many real-world applications.

HippoRAG: A Neurobiologically-Inspired Methodology

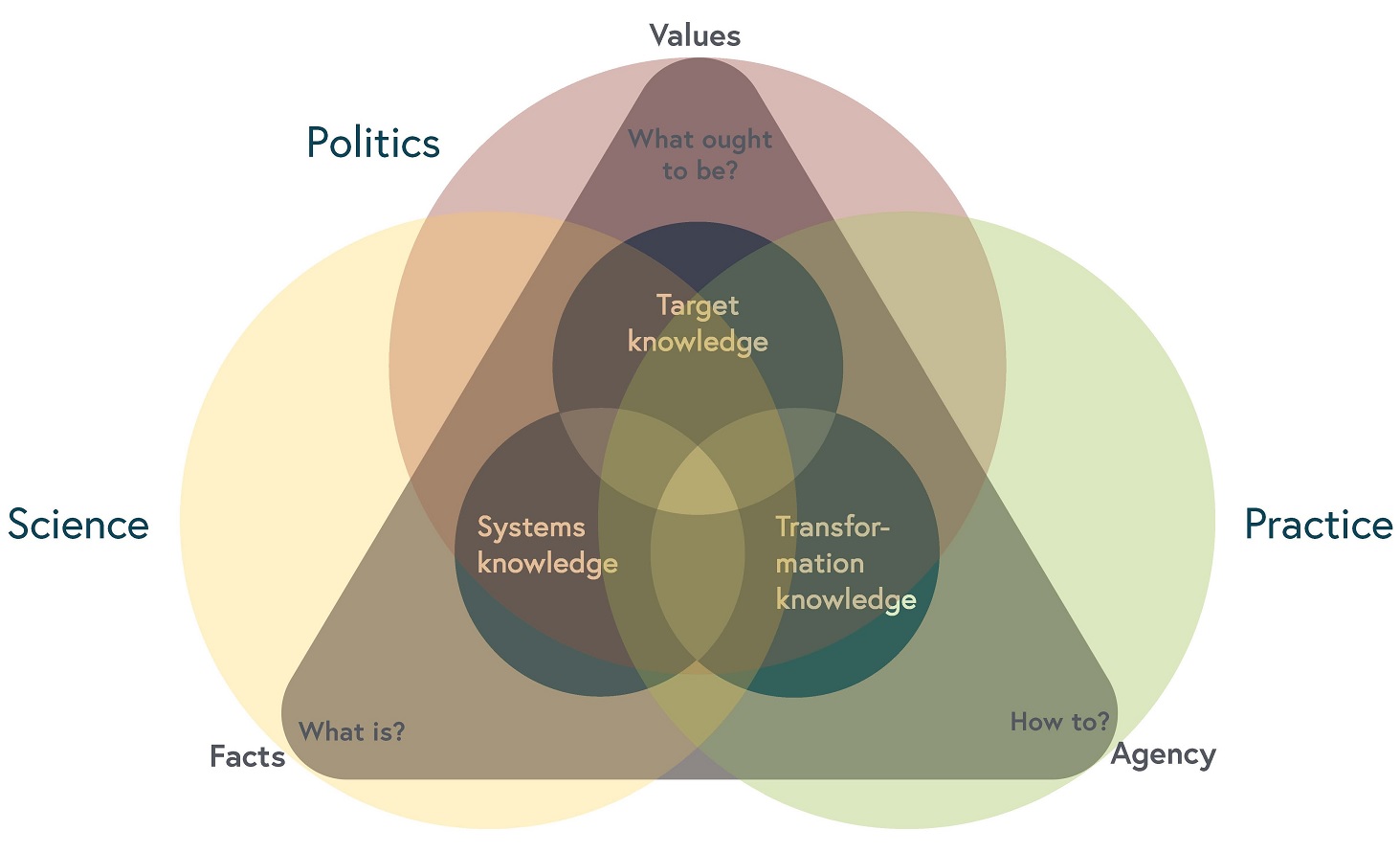

HippoRAG takes inspiration from the biological interactions between the neocortex and the hippocampus that enable the powerful context-based, continually updating memory of the human brain. HippoRAG mimics this memory model by using an LLM to transform a corpus of documents into a knowledge graph that acts as an artificial hippocampal index.

The hippocampal indexing theory inspires HippoRAG’s novel approach to knowledge retrieval.

The hippocampal indexing theory inspires HippoRAG’s novel approach to knowledge retrieval.

The offline indexing phase, analogous to memory encoding in the brain, uses an instruction-tuned LLM to extract important features from passages in the form of knowledge graph triples. This allows for more fine-grained pattern separation compared to dense embeddings used in classic RAG pipelines.

HippoRAG in Action

The researchers evaluated HippoRAG’s retrieval capabilities on two challenging multi-hop question answering benchmarks, MuSiQue and 2WikiMultiHopQA, and the HotpotQA dataset. They compared it against several strong retrieval methods and recent LLM-augmented baselines.

HippoRAG outperforms other methods on multi-hop question answering tasks.

The results show that HippoRAG outperforms all other methods, including LLM-augmented baselines, on single-step retrieval. When combined with the multi-step retrieval method IRCoT, HippoRAG provides complementary gains of up to 20% on the same datasets.

The Future of Knowledge Integration in LLMs

HippoRAG’s knowledge integration capabilities, demonstrated by its strong results on path-following multi-hop QA and promise on path-finding multi-hop QA, as well as its dramatic efficiency improvements and continuously updating nature, makes it a powerful middle-ground framework between standard RAG methods and parametric memory.

HippoRAG offers a promising solution for long-term memory in LLMs.

HippoRAG offers a promising solution for long-term memory in LLMs.

The combination of knowledge graphs and LLMs is a very powerful tool that can unlock many applications. Adding graph neural networks (GNNs) to HippoRAG can be an interesting direction to explore in the future.