Energy-Based World Models: The Future of Human-Like Cognition in AI

The advent of autoregressive models such as ChatGPT and DALL-E has revolutionized the field of AI research, enabling the generation of realistic text and images. However, these models have limitations that prevent them from achieving human-like cognitive abilities. In this article, we’ll explore the concept of Energy-Based World Models (EBWM) and how it’s poised to bring human-like cognition to AI.

AI research is rapidly advancing, and EBWM is at the forefront

AI research is rapidly advancing, and EBWM is at the forefront

The Limitations of Autoregressive Models

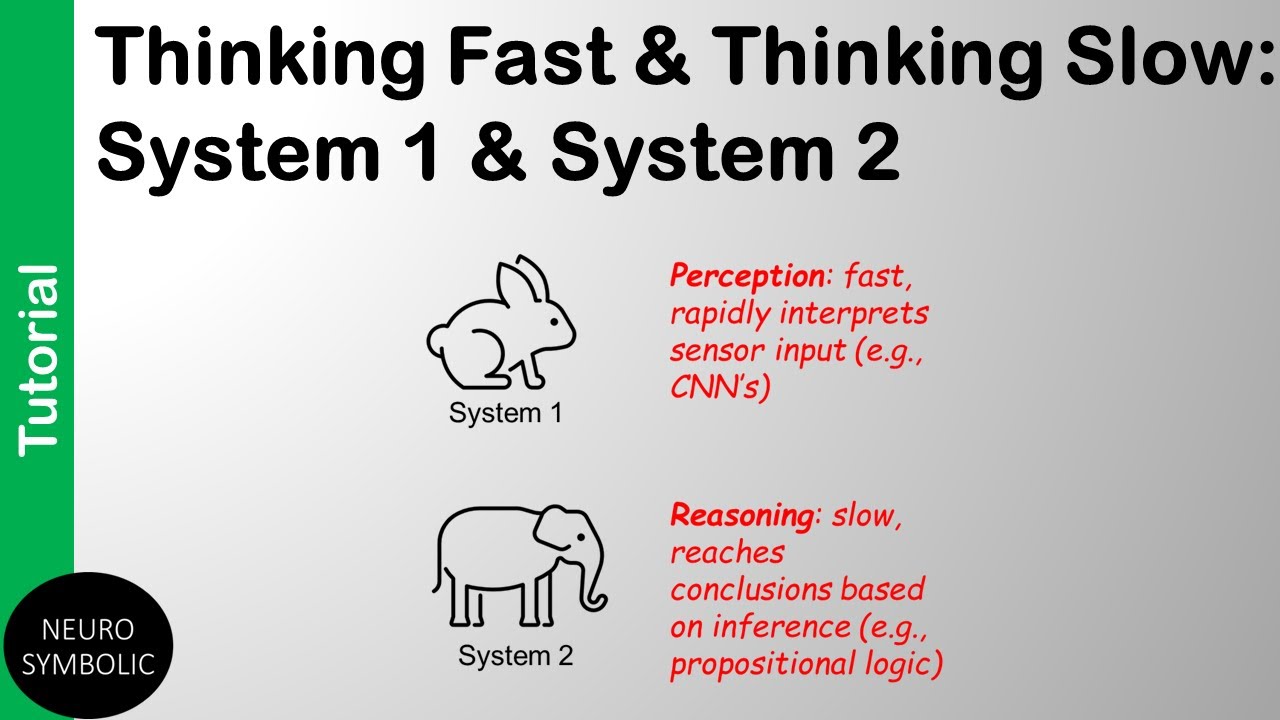

Autoregressive models have been instrumental in achieving state-of-the-art results in various AI applications. However, they struggle with tasks that require different modes of thinking and reasoning. This is because they lack the cognitive facets that are inherent in human brains.

Researchers have identified four key cognitive facets that are missing in autoregressive models:

- Predictions shaping internal state: In humans, predictions about the future naturally shape the brain’s internal state. Autoregressive models lack this ability.

- Evaluating prediction plausibility: Humans naturally evaluate the plausibility of their predictions, while autoregressive models do not assess the strength or plausibility of their outputs.

- Dynamic computation: Humans dedicate varying amounts of computational resources to making predictions or reasoning based on the task. Autoregressive models lack this dynamic computation.

- Modeling uncertainty in continuous spaces: Humans can model uncertainty in continuous spaces, while autoregressive models need to break everything down into discrete tokens.

Energy-Based World Models: A Novel Approach

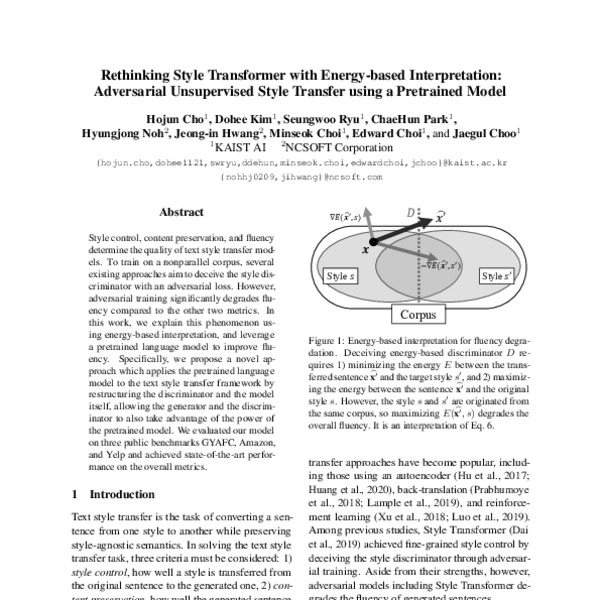

To address the limitations of autoregressive models, researchers have proposed Energy-Based World Models (EBWM). The key idea is to approach world modeling as “making future state predictions in the input space” and “predicting the energy/compatibility of these future state predictions with the current context” using an Energy-Based Model (EBM).

EBMs use a form of contrastive learning where the model tries to measure the compatibility of various inputs. By integrating its predictions in the input space, EBWM achieves the first and third cognitive facets—shaping the model’s internal state with its predictions and allowing dynamic computation.

The Energy-Based Transformer is a domain-agnostic architecture that can learn Energy-Based World Models

The Energy-Based Transformer is a domain-agnostic architecture that can learn Energy-Based World Models

The Energy-Based Transformer

To make EBMs competitive with modern architectures, researchers designed the Energy-Based Transformer (EBT), a domain-agnostic transformer architecture that can learn Energy-Based World Models. EBT incorporates elements from diffusion models and makes changes to the attention structure and computation to incorporate various predictions and better account for future states.

According to the researchers, on computer vision tasks, EBT scales well in terms of data and GPU hours compared to autoregressive models. While EBWM initially learns slower than autoregressive models, as scale increases, it matches and eventually exceeds the performance of autoregressive models in data and GPU hour efficiency.

“This outcome is promising for higher compute regimes, as the scaling rate of EBWM is higher than autoregressive models as computation increases.” - Researchers

Complementing Autoregressive Models

While EBWM shows promising results, researchers do not see it as a drop-in replacement for autoregressive models. Instead, they envision EBWM as a complementary approach that can be used in scenarios requiring long-term System 2 thinking to solve challenging problems.

EBWM is particularly useful for scenarios requiring long-term System 2 thinking

EBWM is particularly useful for scenarios requiring long-term System 2 thinking

In conclusion, Energy-Based World Models have the potential to bring human-like cognition to AI. By addressing the limitations of autoregressive models, EBWM can enable AI systems to reason, plan, and perform System 2 thinking at the same level as humans.