DeepSeek Unleashes DeepSeek-V3: A Revolution in AI Technology

Chinese artificial intelligence developer DeepSeek has officially launched DeepSeek-V3, a groundbreaking large language model (LLM) boasting 671 billion parameters. With this release, the company promises to enhance the capabilities of AI in generating text, writing software code, and a myriad of related tasks. Reports suggest that DeepSeek-V3 outstrips its competitors in the open-source domain, surpassing renowned models in several benchmark tests, a clear indication of its advanced capabilities.

Understanding the Mixture of Experts Architecture

DeepSeek-V3 utilizes a cutting-edge mixture of experts (MoE) architecture, a model comprising multiple neural networks—each expertly tuned for diverse applications. Upon receiving a user prompt, a router mechanism efficiently directs the inquiry to the most suitable neural network capable of delivering the best response.

The MoE architecture serves a critical function: it significantly reduces hardware costs. When a request is posed to DeepSeek-V3, the entire model does not activate; rather, only the specific neural network that has been optimized for the query springs into action. Each of these networks is composed of 34 billion parameters, indicating that DeepSeek-V3 can operate with comparatively modest infrastructure requirements while maintaining high performance.

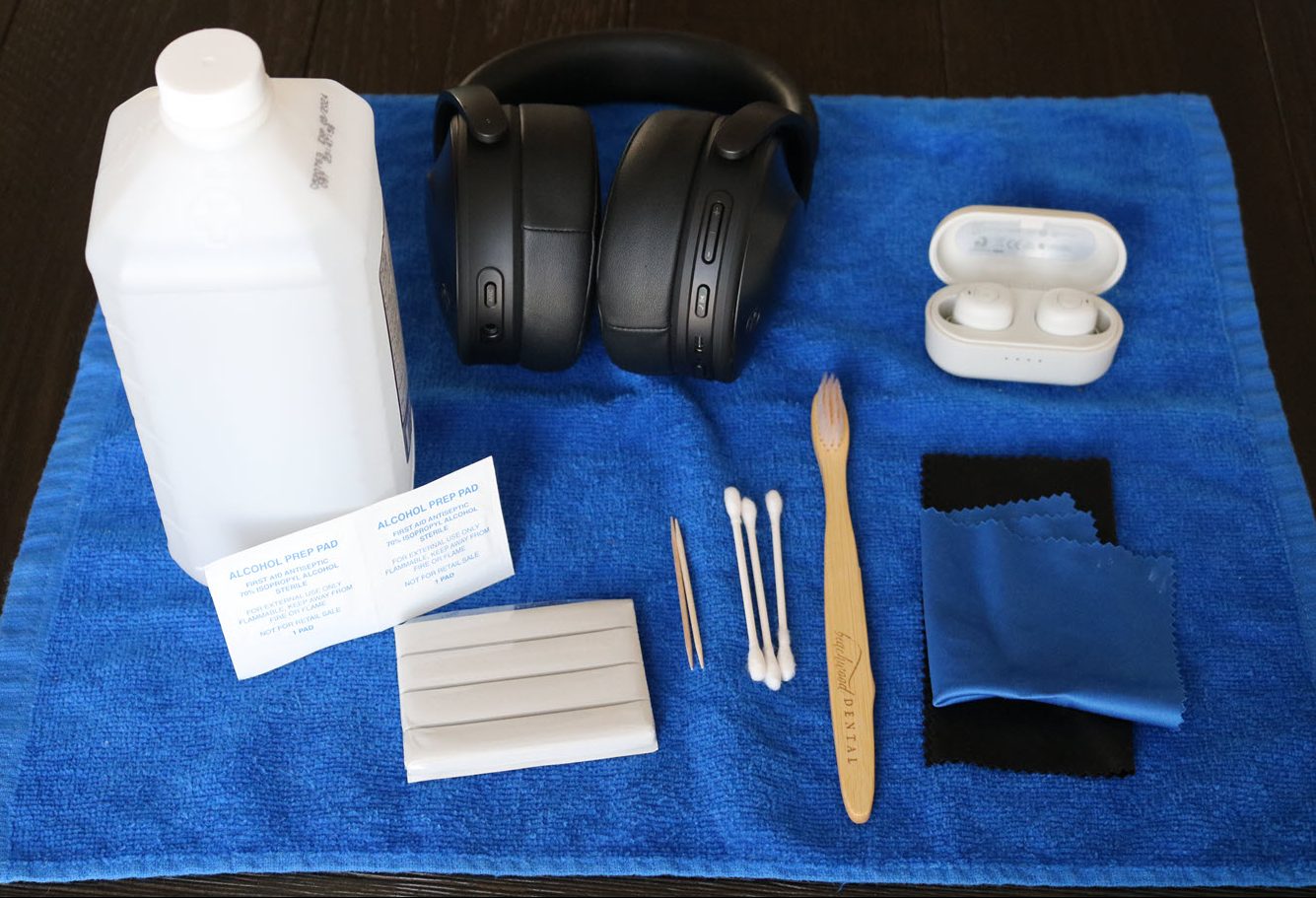

DeepSeek-V3 leveraging advanced AI architecture

DeepSeek-V3 leveraging advanced AI architecture

Nevertheless, while the MoE structure confers numerous benefits, it is not without its challenges. In the training phases, certain neural networks may receive disproportionately more data than others, resulting in inconsistent output quality across the model. To combat this issue, DeepSeek has crafted new methodologies specifically designed to ensure balanced and consistent performance throughout the network.

Training and Optimizations

The training regimen for DeepSeek-V3 was monumental, incorporating a staggering 14.8 trillion tokens of information—where each token encompasses mere letters or numbers. This exhaustive process took 2.788 million GPU hours, showcasing the impressive efficiency of the model given that top-tier AI clusters typically command voluminous resources to achieve similar outcomes in a more condensed timeframe.

Accompanying the MoE architecture, DeepSeek-V3 integrates a series of optimizations tailored to elevate output quality. Among these is a refined version of the attention mechanism, known as multihead latent attention. This advancement permits the model to highlight critical details within a text snippet multiple times, reducing the likelihood of overlooking pivotal information.

In addition, the model features a multitoken prediction capability. Unlike conventional language models that generate text token by token, DeepSeek-V3 can produce several tokens simultaneously, significantly accelerating the inference process.

Benchmark Performance

In rigorous evaluations, DeepSeek-V3 underwent comparisons against other notable open-source LLMs: the earlier generation DeepSeek-V2, Llama 3.1 at 405 billion parameters, and Qwen2.5 at 72 billion parameters. DeepSeek-V3 not only outperformed these competitors across all nine coding and math benchmarks employed in the assessments but also demonstrated superior proficiency in various text processing tasks.

The complete source code for DeepSeek-V3 is readily accessible on Hugging Face, inviting developers and researchers to explore its vast capabilities and contribute to its evolution in the AI landscape.

Fresh perspectives on AI models and technology

In the ever-expanding realm of artificial intelligence, DeepSeek’s launch of DeepSeek-V3 marks a significant milestone. With its robust framework and performance surpassing that of established competitors, it sets a high bar in the LLM arena. As the technology matures and more users leverage this advanced model, we can expect a surge in innovative applications capable of transforming various industries, paving the way for a future where AI becomes an even more integral part of our daily lives.

As we continue to witness the unfolding of AI technologies, it remains crucial for stakeholders in the industry to engage comprehensively with these models. Their applications span from generating engaging narratives to assisting in complex coding queries, establishing them as invaluable assets for businesses and developers alike.

In conclusion, as DeepSeek-V3 takes its place among the giants of artificial intelligence, the potential implications are vast and exhilarating, promising to push the boundaries of what is currently achievable in AI-driven technologies.

Latest Updates from the AI Ecosystem

- Stay up to date with the latest advancements and trends in AI. Here are some recent stories:

- Outage takes ChatGPT, Sora, and OpenAI’s APIs offline for many users

- Japan Airlines delays, cancels some flights following a cyberattack

- ChainOpera AI raises $17M to build a blockchain network for AI agents and apps

- Botnets leverage decade-old D-Link vulnerabilities in new attack campaigns

- South Korea accelerates construction of Yongin semiconductor hub set to begin in 2026