LangChain’s Built-In Eval Metrics for AI Output: Unraveling the Mysteries

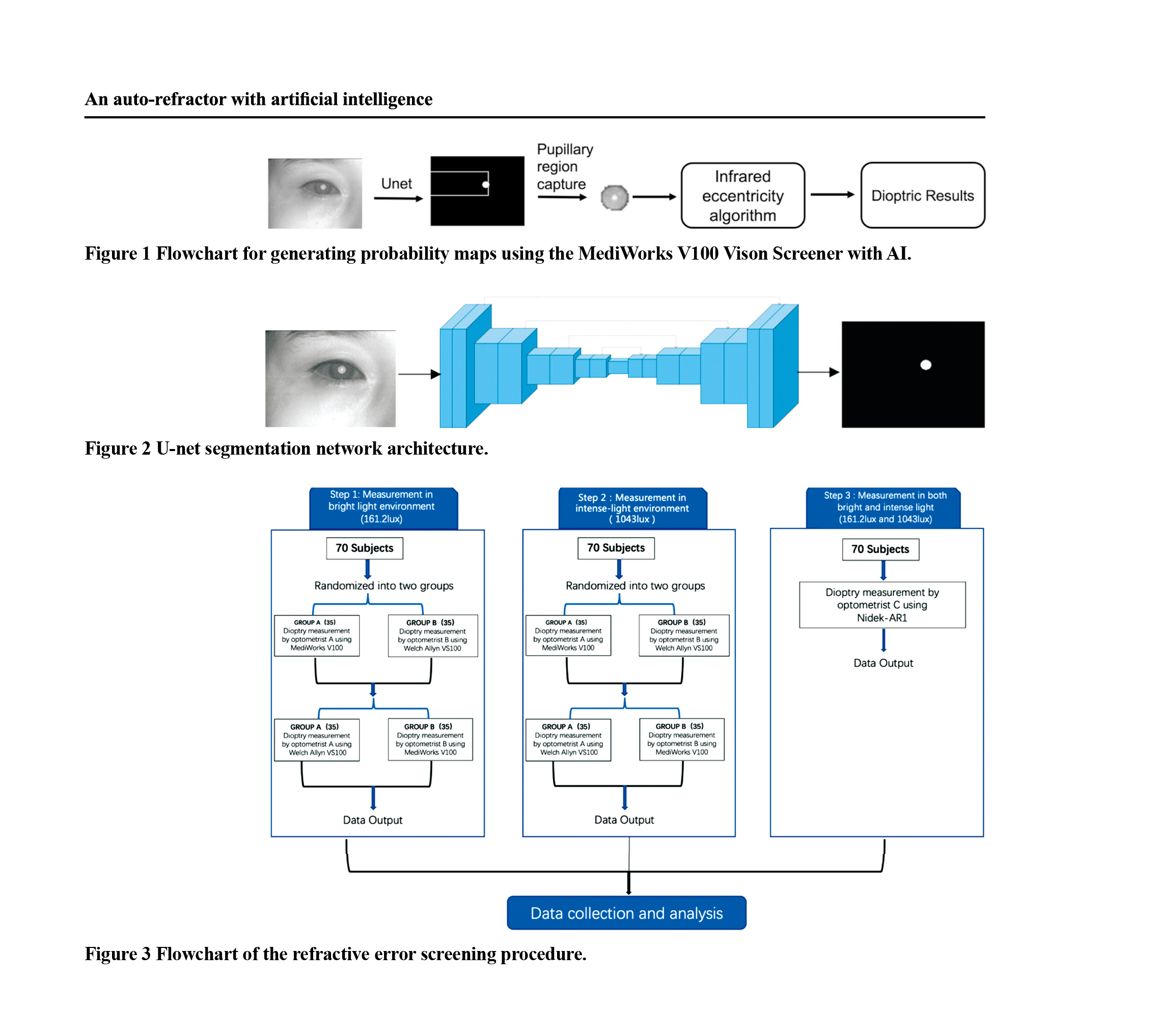

As AI models continue to evolve, evaluating their performance has become a crucial aspect of their development. LangChain, a popular AI framework, provides built-in evaluation metrics for assessing AI output. But have you ever wondered how these metrics are created and what they entail? In this article, we’ll delve into the world of LangChain’s built-in eval metrics, exploring their significance and correlations.

Correlation Analysis: Uncovering Hidden Patterns

A correlation matrix analysis revealed some fascinating insights into the relationships between LangChain’s built-in metrics. One striking correlation is between Helpfulness and Coherence, with a correlation coefficient of 0.46. This suggests that users tend to find coherent responses more helpful, emphasizing the importance of logical structuring in AI-generated content.

Correlation analysis reveals hidden patterns in LangChain’s built-in metrics.

Correlation analysis reveals hidden patterns in LangChain’s built-in metrics.

Another notable correlation is between Controversiality and Criminality, with a correlation coefficient of 0.44. This indicates that even controversial content can be deemed criminal, and vice versa, reflecting a user preference for engaging and thought-provoking material.

Coherence vs. Depth: What Do Users Prefer?

Interestingly, while Coherence correlates with Helpfulness, Depth does not. This might suggest that users prefer clear and concise answers over detailed ones, particularly in contexts where quick solutions are valued over comprehensive ones.

The trade-off between coherence and depth in AI-generated content.

The trade-off between coherence and depth in AI-generated content.

LangChain’s Built-In Metrics: A Closer Look

So, what do these built-in metrics entail, and why were they created? The hypothesis is that they were designed to explain AI output in relation to theoretical use case goals, avoiding correlations where possible.

The metrics include:

- Conciseness

- Detail

- Relevance

- Coherence

- Harmfulness

- Insensitivity

- Helpfulness

- Controversiality

- Criminality

- Depth

- Creativity

Using a standard SQuAD dataset as a baseline, we evaluated the differences between output from OpenAI’s GPT-3-Turbo model and the ground truth in this dataset.

LangChain’s built-in metrics for evaluating AI output.

Conclusion

LangChain’s built-in eval metrics offer a valuable framework for assessing AI output. By understanding the correlations and differences between these metrics, we can better design AI systems that meet user preferences and goals. As AI continues to evolve, the importance of evaluating and refining these metrics will only continue to grow.

The future of AI evaluation and refinement.

The future of AI evaluation and refinement.