Deciphering Doubt: Navigating Uncertainty in LLM Responses

As I delve into the realm of large language models (LLMs), I am struck by the sheer complexity of uncertainty that underlies their responses. It is a domain where the lines between epistemic and aleatoric uncertainties blur, making it crucial to develop methods that can accurately quantify and distinguish between them.

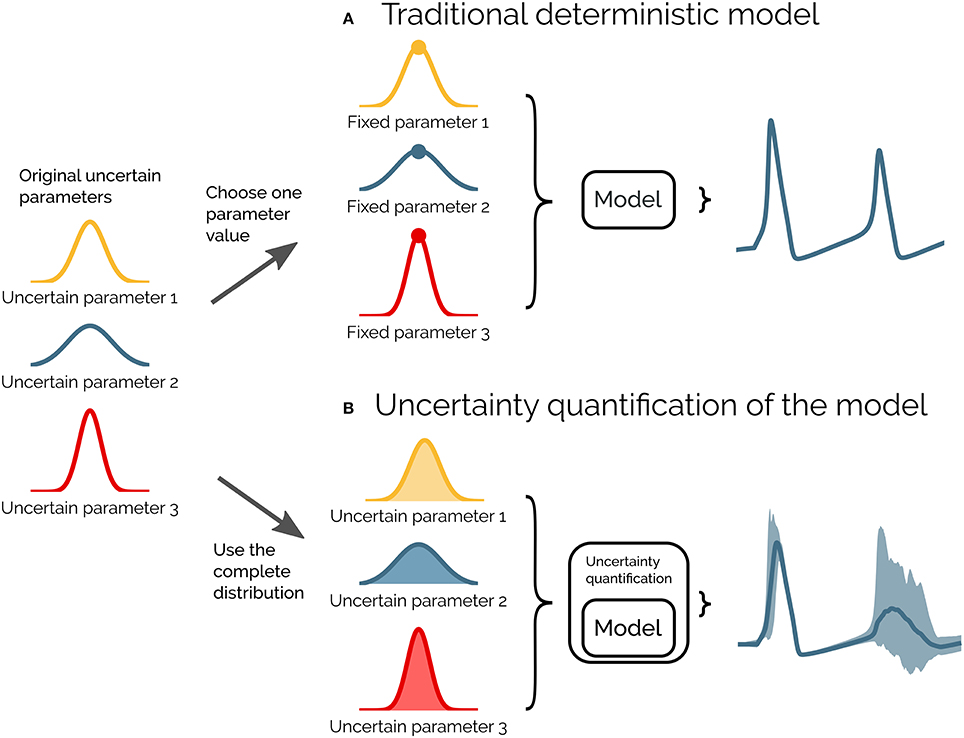

The Current State of Uncertainty Quantification

Existing methods for detecting hallucinations in LLMs, such as the probability of the greedy response (T0), semantic-entropy method (S.E.), and self-verification method (S.V.), each have their limitations. The probability of the greedy response is sensitive to the size of the label set, while the semantic-entropy method relies on first-order scores that do not consider the joint distribution of responses. The self-verification method, on the other hand, does not account for the full range of possible responses the model can generate.

Uncertainty quantification in LLMs

Uncertainty quantification in LLMs

A Novel Approach to Uncertainty Quantification

To overcome these limitations, researchers have proposed a novel approach that involves creating a combined distribution for multiple responses from the LLM for a specific query using iterative prompting. This iterative prompting procedure allows for the derivation of an information-theoretic metric of epistemic uncertainty, which is insensitive to aleatoric uncertainty. By measuring the mutual information (MI) of the joint distribution of responses, researchers can quantify epistemic uncertainty with high accuracy.

Iterative prompting for uncertainty quantification

A Robust Tool for Hallucination Detection

The proposed approach has been shown to outperform traditional entropy-based methods, especially in datasets with mixed single-label and multi-label queries. By setting a threshold through a calibration procedure, the method demonstrates superior performance in detecting hallucinations and improving overall response accuracy.

Hallucination detection using mutual information

Conclusion

The proposed approach marks a significant advancement in quantifying uncertainty in LLMs, distinguishing between epistemic and aleatoric uncertainties. By providing a more nuanced understanding of LLM confidence, this method enhances the detection of hallucinations and improves overall response accuracy. As we continue to push the boundaries of LLM capabilities, it is crucial that we develop robust tools for uncertainty quantification to ensure the reliability and truthfulness of their responses.

Uncertainty in LLM responses