Dataiku Launches LLM Cost Guard: Revolutionizing Cost Monitoring for Large Language Models

In the realm of data science and machine learning, Dataiku has once again made waves with the introduction of its latest innovation, the LLM Cost Guard. This cutting-edge solution is set to redefine how organizations manage and monitor the usage and costs associated with enterprise large language models (LLMs), providing invaluable insights for strategic decision-making and financial optimization.

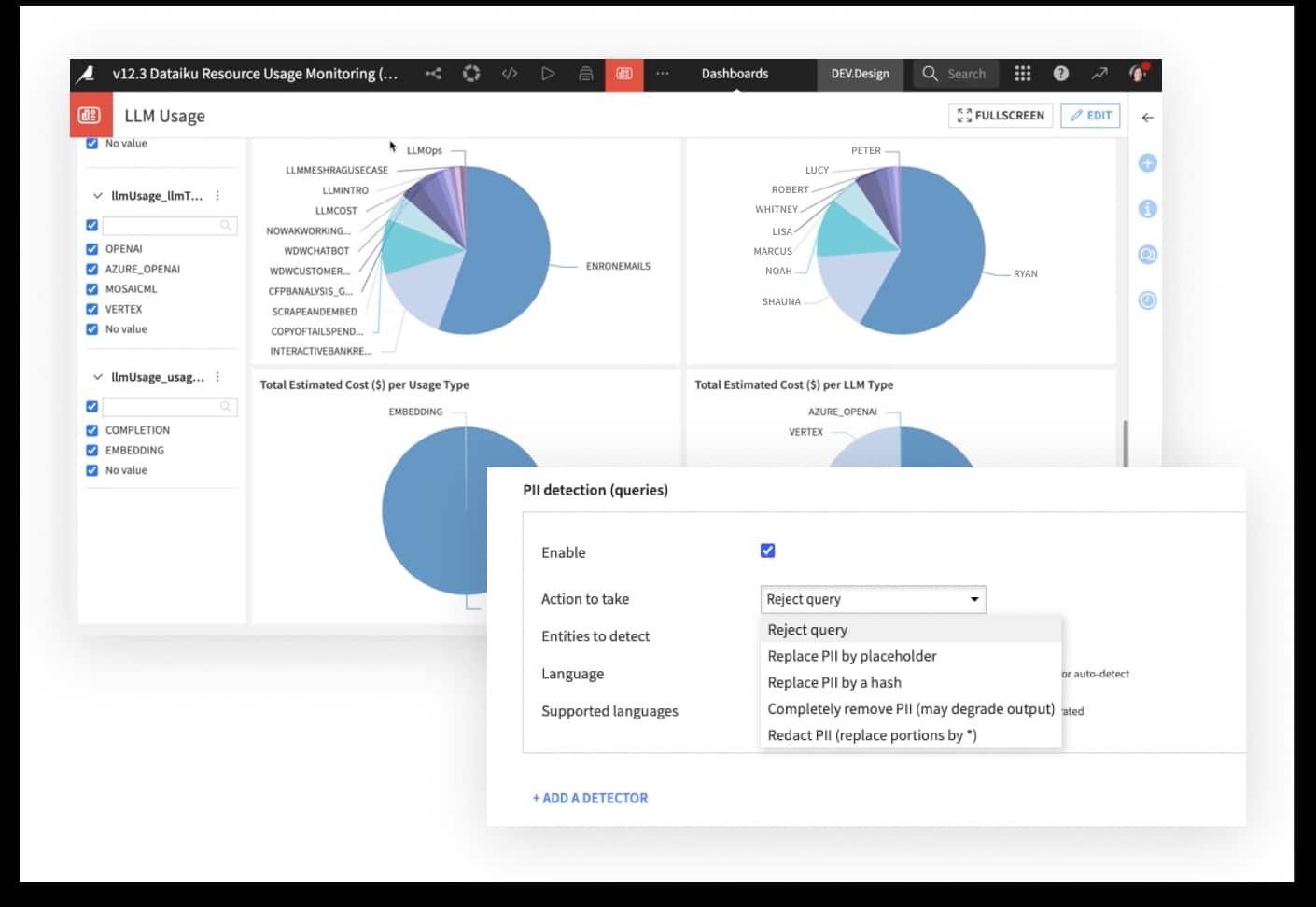

Dataiku LLM Cost Guard

Dataiku LLM Cost Guard

The surge in LLM adoption within corporate settings has underscored the critical importance of cost management and transparency. Projections indicate that Generative AI is poised to dominate 55% of AI expenditure by 2030, emphasizing the necessity for robust financial oversight in Generative AI initiatives.

LLM Mesh, a key component of Dataiku’s ecosystem, offers a comprehensive solution with unmatched connectivity to leading LLM providers. The advent of LLM Cost Guard further enhances this suite by enabling organizations to meticulously track and categorize LLM expenses, establish early warning mechanisms, and gain granular insights into usage patterns and expenditure.

Florian Douetteau, Dataiku’s co-founder and CEO, articulated the significance of LLM Cost Guard: “We consistently hear apprehensions from business leaders regarding the potential costs associated with Generative AI endeavors and the inherent cost variability. With LLM Cost Guard, our aim is to demystify these expenses, empowering leaders to concentrate on innovation with confidence.”

For organizations seeking to leverage the transformative capabilities of LLM Cost Guard, detailed information and access can be found at Dataiku’s official site.