Common Corpus: A Large Public Domain Dataset for Training LLMs

In the ever-evolving realm of Artificial Intelligence, the discussion around the necessity of copyrighted materials in the training of top AI models has been a topic of contention. OpenAI’s assertion to the UK Parliament in 2023 that training models without copyrighted content was ‘impossible’ caused a stir in the industry, leading to legal disputes and ethical dilemmas. However, recent advancements have challenged this notion, presenting compelling evidence that large language models (LLMs) can indeed be trained without relying on copyrighted materials.

The Common Corpus initiative has emerged as a groundbreaking public domain dataset designed specifically for training LLMs. Spearheaded by Pleias and a team of researchers specializing in LLM pretraining, AI ethics, and cultural heritage, this international collaboration has disrupted the traditional AI landscape, ushering in a new era of AI methodologies. This diverse and multilingual dataset showcases the feasibility of training LLMs without the constraints of copyright issues, signifying a pivotal shift in AI practices.

A large language model

A large language model

Fairly Trained, a prominent non-profit organization within the AI sector, has taken a definitive stance towards promoting fairer AI practices. The organization recently granted its inaugural certification to an LLM named KL3M, developed by the Chicago-based legal tech consultancy startup, 273 Ventures. KL3M not only represents a model but also stands as a symbol of optimism for the future of ethical AI. The meticulous certification process, overseen by Fairly Trained’s CEO, Ed Newton-Rex, instills confidence in the potential for cultivating fair AI, emphasizing that training LLMs ethically is indeed achievable.

Kelvin Legal DataPack, an intricately curated training dataset by Fairly Trained, comprises thousands of legally reviewed documents to ensure compliance with copyright regulations. Despite its substantial size of approximately 350 billion tokens, this dataset underscores the power of curation. While it may not match the scale of datasets compiled by entities like OpenAI, its performance remains exceptional. Jillian Bommarito, the founder of the company, attributes the success of the KL3M model to the rigorous vetting process applied to the data. The utilization of curated datasets to enhance AI models, tailoring them precisely to their intended functions, presents an exciting prospect. 273 Ventures has now opened a waitlist for clients eager to leverage this invaluable resource.

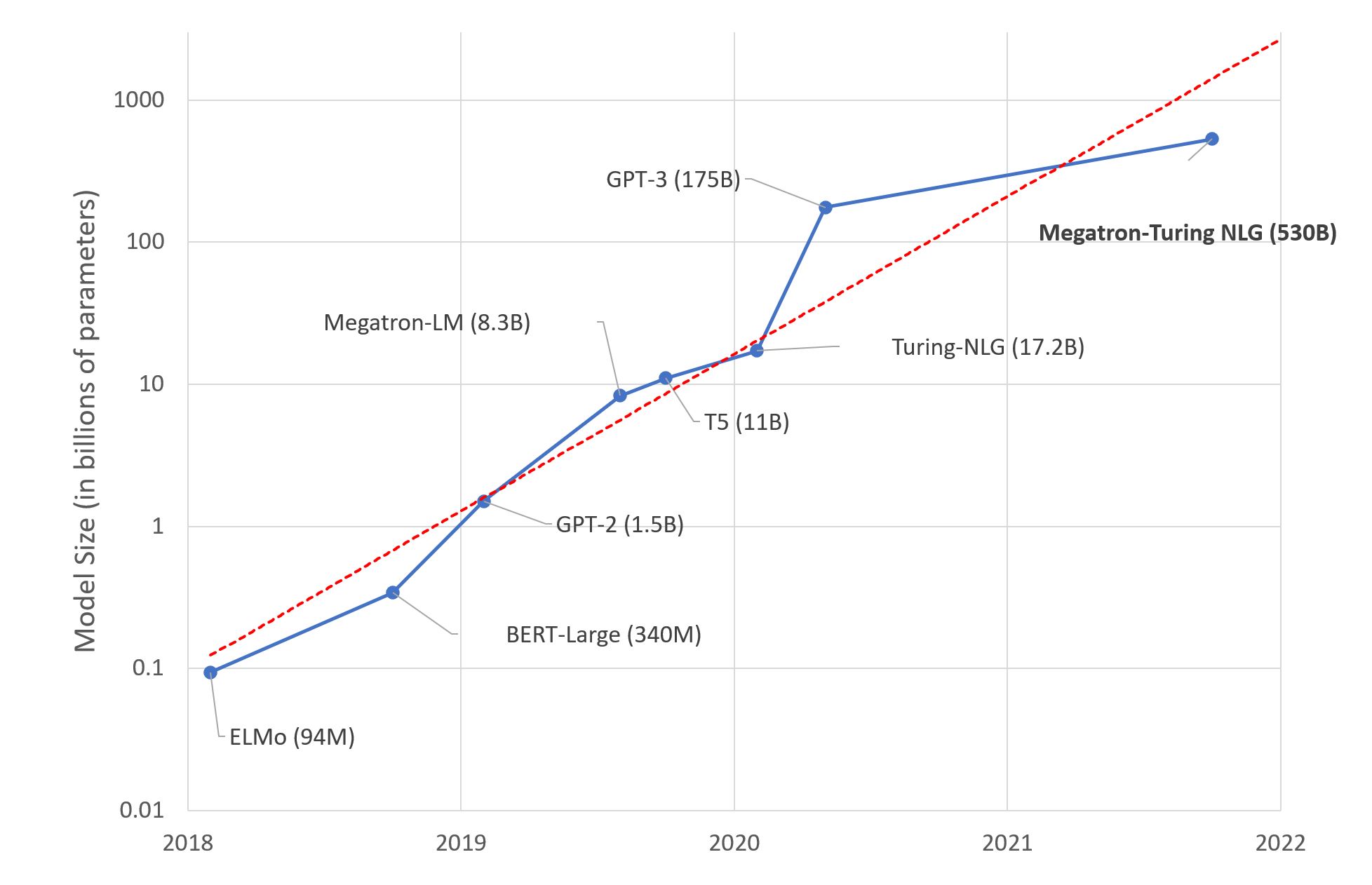

Researchers involved in the Common Corpus project demonstrated boldness by employing a text collection equivalent in size to the data used for training OpenAI’s GPT-3 model. This dataset has been made accessible on the open-source AI platform, Hugging Face. While Fairly Trained has currently certified only 273 Ventures’ LLMs, the emergence of initiatives like Common Corpus and models such as KL3M signifies a notable shift in the AI landscape. Advocates for fairer AI, particularly those advocating for artists impacted by data scraping practices, view these initiatives as pivotal in reshaping industry norms.

Fairly Trained’s recent certifications, including those for the Spanish voice-modulation startup VoiceMod and the heavy-metal AI band Frostbite Orckings, demonstrate a diversification beyond LLMs, hinting at a broader scope for AI certification. However, while the Kelvin Legal DataPack boasts numerous merits, it also presents limitations. Although the dataset comprises thousands of legally compliant documents and serves as a valuable resource, it’s essential to acknowledge that much of the public domain data available is outdated, especially in regions like the US where copyright protection extends for extended periods post the author’s demise. Consequently, this dataset may not be ideal for grounding an AI model in current affairs.