With a notepad in one hand and a camera in the other, this journalist delves into the depths of AI marvels, uncovering the untold stories woven between lines of code.

In an era where artificial intelligence (AI) is increasingly embedded into the fabric of digital services, the security of these systems is paramount. Cloudflare, a leading web infrastructure and security company, has announced the launch of its “Firewall for AI,” a pioneering solution designed to shield applications utilizing large language models (LLMs) from a spectrum of cyber threats.

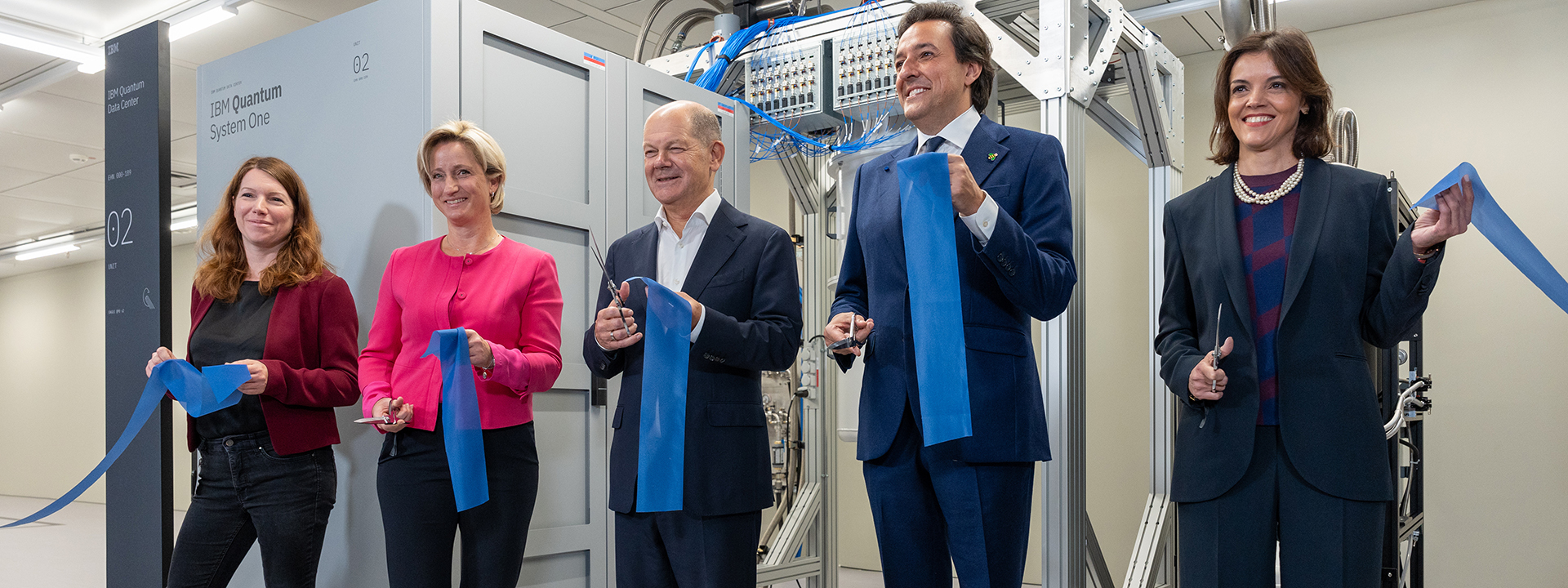

A Dual-Layered Defense Mechanism

A glimpse into Cloudflare’s innovative approach to AI security

A glimpse into Cloudflare’s innovative approach to AI security

The Firewall for AI introduces two critical features: Advanced Rate Limiting and Sensitive Data Detection. The former allows enterprises to set a cap on the number of requests an individual IP address or API key can make, effectively mitigating the risk of distributed denial of service (DDoS) attacks that could cripple the functionality of LLMs. This proactive measure ensures that LLMs can continue to process legitimate requests without interruption.

Sensitive Data Detection, on the other hand, focuses on preserving the confidentiality of information processed by LLMs. By scanning for and preventing the inclusion of sensitive details like credit card numbers and API keys in LLM responses, Cloudflare adds an essential layer of data protection. This feature addresses a critical concern surrounding LLMs — the inadvertent leakage of confidential information.

Beyond the Basics: The Future of AI Security

Cloudflare’s roadmap for the Firewall for AI includes the development of a prompt validation feature aimed at thwarting prompt injection attacks. These sophisticated cyber threats involve crafting prompts that manipulate LLMs into generating inappropriate or illegal content. The upcoming feature will evaluate each prompt, assigning a score to gauge its potential as a security threat. This scoring system will enable customers to fine-tune their security protocols, blocking malicious prompts based on predefined criteria.

Cloudflare’s Firewall for AI in action, preventing a prompt injection attack

Cloudflare’s Firewall for AI in action, preventing a prompt injection attack

Daniele Molteni, Cloudflare’s group product manager, emphasizes the flexibility of the Firewall for AI, noting its compatibility with both public and private LLMs, regardless of their hosting environment. As long as the traffic is proxied through Cloudflare, the firewall can provide its protective measures.

A Timely Solution in an Evolving Landscape

The introduction of Cloudflare’s Firewall for AI comes at a critical juncture in the evolution of AI technologies. With tech giants integrating LLMs into a wide array of products and services, the potential for errors, fabrications, and security vulnerabilities has surged. Cloudflare’s initiative represents a significant step towards establishing a secure ecosystem for AI applications, aligning with broader industry efforts to fortify AI against emerging threats.

As the AI landscape continues to evolve, the conversation around AI security is gaining momentum. With major tech conferences like RSA and Black Hat on the horizon, Cloudflare’s Firewall for AI sets the stage for a broader discussion on the need for robust security frameworks tailored to the unique challenges posed by AI technologies.

In a digital age where AI’s potential is boundless, ensuring the security of these systems is not just a necessity but a responsibility. Cloudflare’s Firewall for AI marks a promising advance in the quest to safeguard the future of AI, ensuring that as we push the boundaries of what’s possible, we do so with security at the forefront.

Photo by

Photo by