Advancing AI’s Causal Reasoning: Introducing CausalBench for a New Era of Evaluation

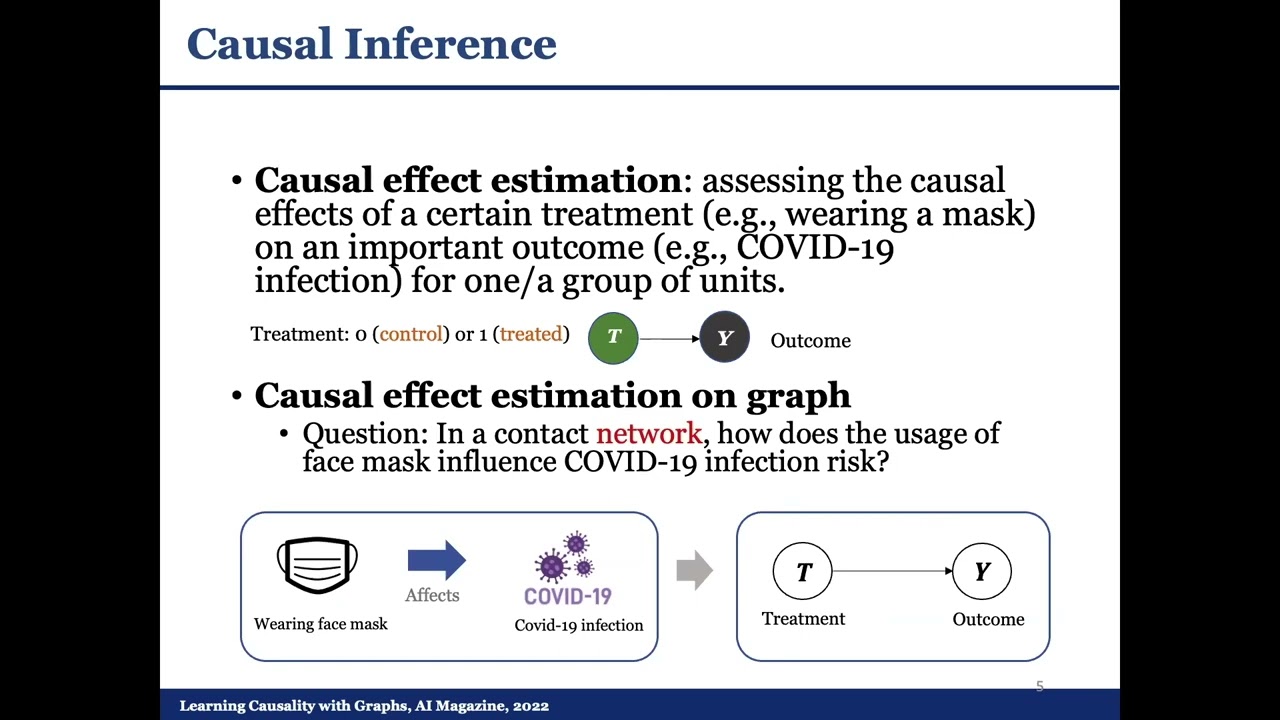

Causal learning is gaining traction as a critical area of focus in artificial intelligence (AI), drawing researchers to explore the intricate principles that govern data distributions in the real world. A model’s ability to understand causality can significantly impact its effectiveness in justifying decisions, adapting to novel data, and hypothesizing new scenarios. However, the existing landscape of large language models (LLMs) presents a challenge: evaluating their causal comprehension lacks depth due to inadequate benchmarks.

Existing assessments of LLM performance have often relied on rudimentary benchmarks and correlation tasks with limited datasets. These evaluations tend to suffer from a lack of complexity and diversity in both tasks and datasets, leading to an incomplete view of LLM capabilities. Previous frameworks integrating structured data have attempted to elevate these assessments, yet they have not fully merged with background knowledge, inhibiting a comprehensive exploration of LLM potential in complex real-world scenarios.

Researchers from Hong Kong Polytechnic University and Chongqing University have risen to this challenge by developing CausalBench, an innovative benchmarking framework aimed at rigorously assessing the causal learning capabilities of LLMs. CausalBench diverges from earlier models by incorporating layered complexity and a broad spectrum of tasks, effectively testing the ability of LLMs to navigate causal reasoning in diverse contexts.

Understanding Causality in AI Frameworks

Understanding Causality in AI Frameworks

The methodology employed in CausalBench involves evaluating LLMs using several comprehensive datasets, including Asia, Sachs, and Survey. The framework challenges models to identify correlations, construct causal structures, and ascertain the directions of causality. Evaluation metrics such as F1 score, accuracy, Structural Hamming Distance (SHD), and Structural Intervention Distance (SID) are used to gauge performance. Notably, these evaluations take place in a zero-shot scenario, measuring the intrinsic causal reasoning capabilities of each model without prior fine-tuning.

Initial results from the CausalBench evaluations reveal significant performance discrepancies across various LLMs. For example, certain models, such as GPT-4 Turbo, achieved F1 scores exceeding 0.5 in correlation tasks utilizing the Asia and Sachs datasets. However, a notable drop in performance was observed during more complex causality assessments involving the Survey dataset, with many models averaging F1 scores below 0.3. These findings illustrate the varying capabilities of LLMs when confronted with different levels of causal complexity, presenting both a clear metric for success and identifying areas for future refinement in model training and algorithm development.

“The development of CausalBench marks a pivotal step in advancing our understanding of AI’s capabilities in causal reasoning. It lays the groundwork for future improvements in AI systems,” said a representative from the research team.

Evaluating AI Performance Through Causal Benchmarks

Evaluating AI Performance Through Causal Benchmarks

In conclusion, the introduction of CausalBench by researchers from Hong Kong Polytechnic University and Chongqing University represents a significant leap forward in measuring LLMs’ causal learning capabilities. By leveraging a rich variety of datasets and intricate evaluation tasks, this research sheds light on the strengths and weaknesses of various LLMs in comprehending causality. The insights gleaned from these findings underscore the ongoing necessity for evolution in model training, an endeavor vital for real-world applications where informed decision-making and logical inference based on causality are paramount. Moving forward, the implications of this research could pave the way for enhanced AI systems capable of more sophisticated reasoning and decision-making processes.

The promising trajectory of AI in understanding causality

The promising trajectory of AI in understanding causality

To stay updated on advancements in the field, readers are encouraged to explore further research and dialogues surrounding causal reasoning and AI development.