Bridging Minds and Machines: The Future of Brain-Computer Interfaces Powered by AI

A groundbreaking collaboration between the East China University of Science and Technology (ECUST) and the tech titan Baidu is ushering in a new era of brain-computer interfaces (BCI). This initiative integrates large language models (LLMs) into BCI systems, unlocking unprecedented capabilities that promise to transform how individuals interact with technology.

Cutting-edge advancements in BCI technology are on the horizon.

Cutting-edge advancements in BCI technology are on the horizon.

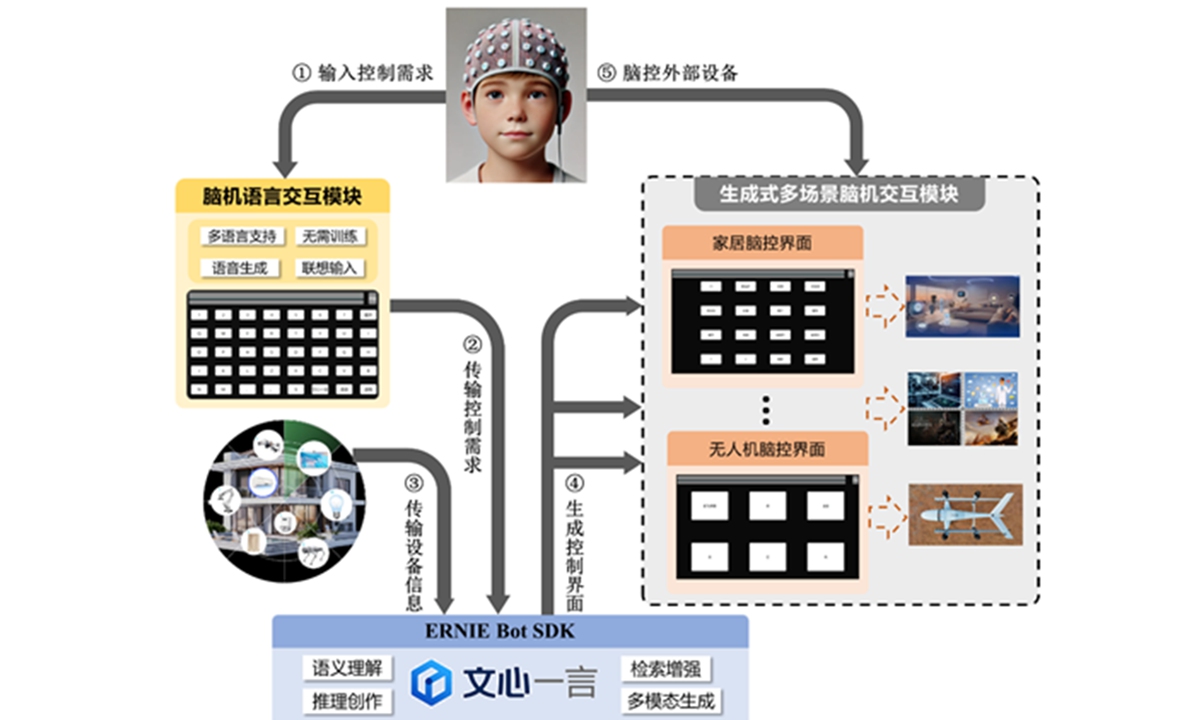

The innovative team led by Professor Jin Jing has developed a framework that employs a single BCI to dynamically adapt across multiple application scenarios. This system allows users to express their needs through a brain-language interaction module, which functions like a universal remote capable of interfacing with various devices seamlessly. This adaptability could radically change the landscape for individuals with mobility limitations, enabling control over their environments as never before.

A Leap Toward Multifaceted BCI Systems

Consider the implications of a smart housing environment where a paralyzed individual can use a singular BCI powered by Baidu’s Ernie Bot—akin to having an AI-powered assistant ready to respond to their every command. Traditionally, creating a BCI required a tailored interface for each device, a process that was not only cumbersome but also limiting in its accessibility.

With Ernie Bot at the helm, the past demand for individualized setup is obliterated; instead, the system automatically generates the necessary interfaces, ready for immediate engagement. As Liu Conglin, a product operations manager at Baidu, explains, the integration permits the translation of users’ intents into actionable controls, effectively revolutionizing home automation.

“The framework integrates Baidu’s LLM, Ernie Bot, which accurately analyzes user intent,” Professor Jin noted, showcasing how the BCI’s reasoning capabilities envelop intricate device control needs. “Our system can automatically concoct the most suitable setups based on diverse scenarios.”

This development aligns closely with global trends in BCI technology, exemplified by the London-based startup MindPortal Inc., which recently unveiled its non-invasive optical BCI that allows users to navigate ChatGPT with mere thoughts. MindPortal’s ventures into telepathic communication hint at the expansive possibilities that lie ahead in the BCI landscape.

Yet, unlike MindPortal’s approach, which simply enhances an existing BCI system, Jin’s team aims to transcend current limitations by tackling technical challenges head-on, positioning their innovations as solutions to persistent issues in BCI technology.

Challenges on the Horizon

Despite the impressive strides in technology, experts remind us that successful broad applications of BCI systems are still on the horizon. Many current systems remain primarily focused on medical applications, such as aiding patients with conditions like amyotrophic lateral sclerosis (ALS) who struggle to communicate.

Professor Gao Xiaorong of Tsinghua University stresses that further development is essential. “The performance of BCIs is still relatively weak despite their promise. Medical applications notably shape the trajectory of BCI advancements due to their interdisciplinary nature.” Yet, even marginal enhancements can significantly uplift a patient’s quality of life, breathing new communicative possibilities into their existence.

While Jin’s team is actively pursuing additional applications, including drone control systems, the ideal future sees these BCIs updating in real time based on user needs and environmental contexts, fostering a truly responsive technology.

Ethical Dimensions and Future Prospects

As exciting as these possibilities may seem, they also invite ethical debates. The prospect of BCI technology creating “superhuman” capacities—where someone’s baseline functioning is radically enhanced—underscores the urgency of addressing potential misuse and societal imbalances. Immediate implications for privacy, equity, and safety must be carefully navigated to realize the promise of this groundbreaking technology without exacerbating existing inequities.

Jin contends that the goal is to create a user-friendly system that connects thoughts with actions seamlessly. “One could say the system has the potential to allow individuals to convert their intentions into actions with a mere flicker of thought.” However, he cautions against over-romanticizing this capability; it is rooted in tangible, practical applications that prioritize user control and comfort.

Targeting the latent potential for more accessible BCI applications, Jin’s team is in the process of applying for technological patents, hoping to galvanize further research and innovation in the field. The dynamic interplay between human and machine intelligence remains a frontier ripe for exploration.

Conclusion

The fusion of brain-computer interfaces with large language models embodies a vision of future human-computer interaction that is both immediate and profound. While the road to widespread implementation brims with challenges, the collaboration between ECUST and Baidu sets the stage for tectonic shifts within the realm of assistive technology.

As humanity stands on the brink of redefining cognitive interaction with technology, the implications are as vast as they are exciting. As Jin aptly summarizes, the dream of translating thought into action is no longer just a notion; it’s a field of potential waiting to unfold—a testament to what can be achieved when we bridge the worlds of minds and machines.

Charting the course of BCI technology’s future.

Charting the course of BCI technology’s future.

Photo by

Photo by