Breaking the Reversal Curse: Meta AI’s Novel Approach to LLM Training

Large language models have revolutionized natural language processing, providing machines with human-like language abilities. However, despite their prowess, these models grapple with a crucial issue - the Reversal Curse. This term encapsulates their struggle to comprehend logical reversibility, where they often need to deduce that if ‘A has a feature B,’ it logically implies ‘B is a feature of A.’ This limitation poses a significant challenge in the pursuit of truly intelligent systems.

Understanding the Reversal Curse

Understanding the Reversal Curse

At FAIR, Meta’s AI research division, scientists have delved into this issue, recognizing that the Reversal Curse is not just an academic concern. It’s a practical problem that hampers the effective use of LLMs in various applications, from automated reasoning to natural language understanding tasks. Despite their effectiveness in absorbing vast amounts of data, the traditional one-directional training methods need to improve in teaching LLMs the reversible nature of relationships within the data.

“The Reversal Curse is not just an academic concern. It’s a practical problem that hampers the effective use of LLMs in various applications.”

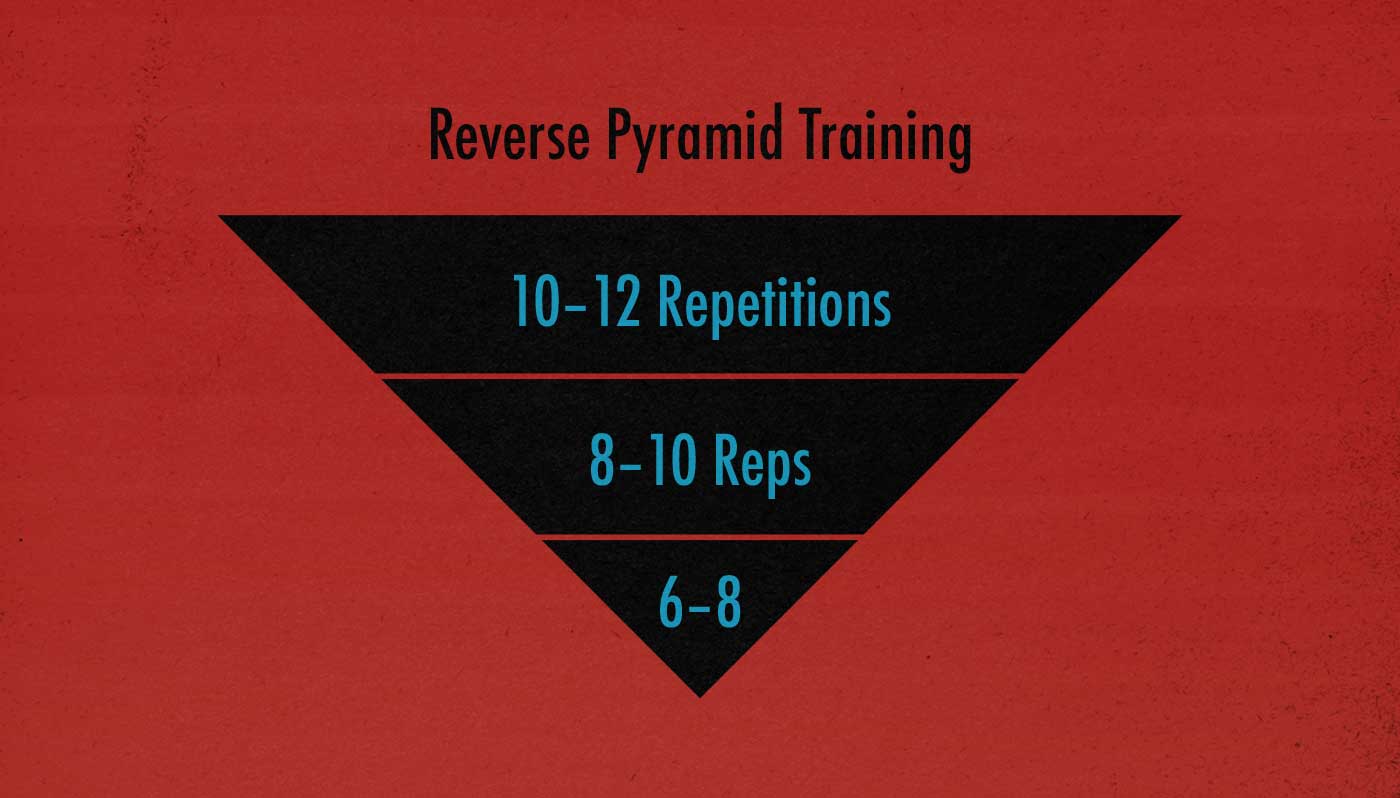

In response to this challenge, the Meta team has proposed a novel training strategy - reverse training. This approach ingeniously doubles the data’s utility by presenting information in original and reversed forms. For instance, alongside the standard training phrase ‘A has a feature B,’ the model would also encounter ‘B is a feature of A,’ effectively teaching it the concept of reversibility. This technique is akin to introducing a new language to the model, expanding its understanding and flexibility in handling language-based tasks.

Reverse training in action

Reverse training in action

The reverse training method was rigorously tested against traditional models in tasks designed to evaluate the understanding of reversible relationships. The results were telling. In experiments where models were tasked with identifying relationships in both directions, reverse-trained models displayed superior performance. For example, in the reversal task of connecting celebrities to their parents based on the training data, reverse-trained models achieved an accuracy improvement, registering a significant 10.4% accuracy in the more challenging ‘parent to celebrity’ direction, as opposed to 1.6% accuracy seen in models trained using conventional methods. Furthermore, these models enhanced performance in standard tasks, underscoring the versatility and efficiency of the reverse training approach.

Reverse training yields improved accuracy

Reverse training yields improved accuracy

This innovative methodology overcomes the Reversal Curse by training language models to recognize and interpret information in forward and backward formats. This breakthrough enhances their reasoning abilities, making them more adept at understanding and interacting with the world. The Meta team’s work exemplifies innovative thinking that pushes the boundaries of what machines can understand and achieve, contributing to the advancement of language modeling techniques.

Meta AI’s innovative approach

Meta AI’s innovative approach