The Quest for Longer Context Windows in Large Language Models

The race to extend the context windows of large language models (LLMs) is heating up. In just a few months, models have gone from supporting a few thousand tokens to over a million. But what does this mean for the future of AI research and enterprise applications?

The Need for Open-Source Long-Context LLMs

Companies like Gradient are working with enterprise customers who want to integrate LLMs into their workflows. However, even before Llama-3 came out, Gradient was facing context pain points in projects they were working on for their customers. For example, language models that help with programming tasks, often referred to as “coding copilots,” have become an important development tool in many companies. Standard coding copilots can generate small bits of code at a time, such as a function. Now, companies are looking to extend those capabilities to creating entire modules of code.

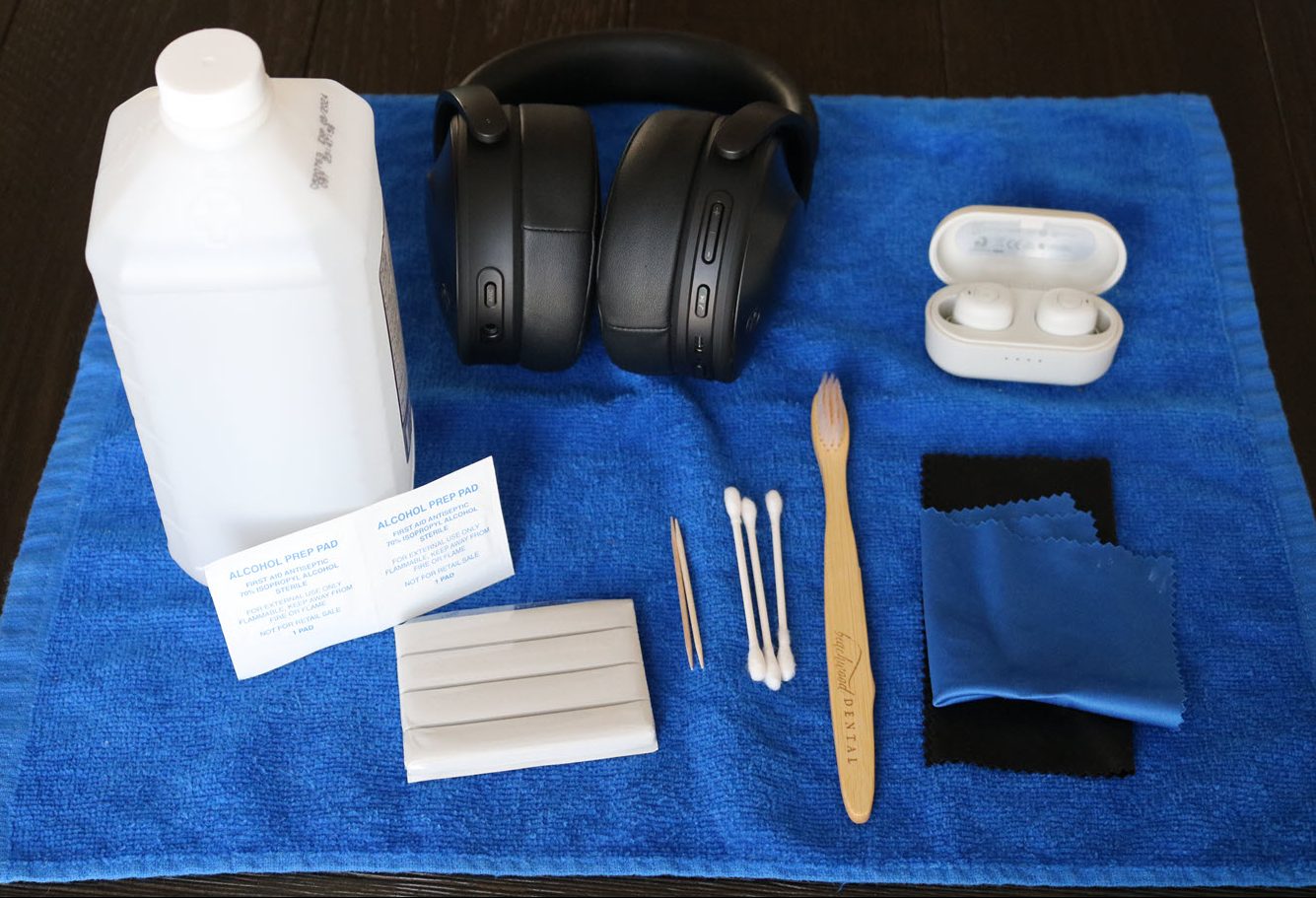

Image: A representation of a large language model’s context window

One way to do it would be to provide the codebase to the LLM piecemeal and make multiple calls. But the process would be slow, complicated, and produce inaccurate results because the model never has access to the entire codebase at any given time.

“Being able to put entire code bases right into a language model context alleviates a lot of these problems because now the language model is able to do what it can do best, which is reason over everything and its working memory and provide an answer that is both more accurate and more efficient,” said Pekelis, a researcher at Gradient.

Open Research and Collaboration

The commercialization of large language models has reduced the incentives for AI labs to share their findings and research. However, this has not prevented the open research community from sharing their findings and contributing to the overall improvement of models. Gradient relied on many papers and open research from universities and institutes across the world.

Distributed attention techniques helped increase the context length without exploding memory and compute costs

They used techniques developed by Berkeley AI Research (BAIR) on distributed attention, which helped them increase the context length without exploding memory and compute costs. The initial code implementation came from an open-source project from a research institute in Singapore. And the mathematical formulas that enabled the models to learn from long context windows came from an AI research lab in Shanghai.

Addressing the Compute Bottleneck

Compute resources are one of the main challenges of doing LLM research. Most AI labs rely on large clusters of GPUs to train and test their models. Gradient teamed up with Crusoe to research long-context LLMs. Crusoe is creating a purpose-built AI cloud that can help its partners build and explore different models cost-efficiently.

Crusoe’s L40S cluster, used for large-scale training and testing of LLMs

“The timing of this collaboration was interesting because we were bringing online an [Nvidia] L40S cluster,” said Ethan Petersen, Senior Developer Advocate at Crusoe. “Typically when people think about those chips, they think about them in terms of inference and we wanted to showcase that we’re able to do really large-scale training across these as well as inference.”

Evaluating the Models

One of the key benchmarks to evaluate long-context windows is the “needle in a haystack” test, where a very specific piece of information is inserted into different parts of a long sequence of text and the model is questioned about it.

The “needle in a haystack” test, used to evaluate long-context windows in LLMs

“Our models get near perfect needle-in-a-haystack performance up to around 2 million context length, and that kind of puts us in the realm of what I’ve seen only Gemini 1.5 Pro,” said Pekelis.

Enterprise Applications

Pekelis believes that long-context open models will make it easier for more companies and developers to build LLM-based applications.

Agentic systems, where one or more language models are put into multiple roles in a workflow, can do more with fewer calls

“Right now, there is a bit of a distance in between individual uses and applications of AI and language models and enterprise applications, which are lagging behind a little bit,” Pekelis said. “Just allowing language models to do more and to be able to put more in the context windows, unlocks new applications.”

For example, with longer contexts, agentic systems can do more with fewer calls because they can process much more information with each request.

Long-context LLMs can also do things that would have otherwise required more complex data processing pipelines. One example is style transfer. Without long-context models, if you wanted a language model to mimic the writing style of a person, you would have to first gather data from different sources. Then you would have to preprocess and summarize the data and figure out a way to feed it into the model or possibly fine-tune the model.

“Here, what we found is that, for example, you can just take all of my past emails and give it to the language model, and it learns how to write like me,” Pekelis said.

Photo by

Photo by