Breaking Down Barriers: Integrating Graph Modality in Large Language Models for Enhanced Instruction Following

=====================================================================================================================

Large Language Models (LLMs) have revolutionized the field of artificial intelligence, primarily due to their exceptional generative capabilities and ability to follow user instructions effectively. These features make LLMs ideal for developing chatbots that interact seamlessly with users. However, the text-based nature of LLMs has limited chatbots to text-only interactions.

Integrating AI capabilities for enhanced user experience

Integrating AI capabilities for enhanced user experience

In recent years, significant efforts have been made to extend LLMs to handle multimodal inputs, particularly focusing on integrating image, video, and graph data. Graph structures, such as programmable software controllers (PLCs) and Computer-Aided Design (CAD) representations, are especially important in industrial applications. Integrating graphs into LLMs is complex due to their permutation-invariant nature and relational representation.

“The integration of graph modality in LLMs is a crucial step towards developing more versatile and intelligent AI systems.”

One natural approach to integrating graph data into LLMs is to leverage LLMs’ understanding of structured input by representing graphs or subgraphs as text. This method takes advantage of in-context learning and requires minimal training. However, the textual representation of graphs often raises performance issues, particularly as the graph size increases. Alternative methods involve using learned embedding representations for node features or entire graphs, but these approaches are limited.

Graph integration in LLMs: A novel approach

Graph integration in LLMs: A novel approach

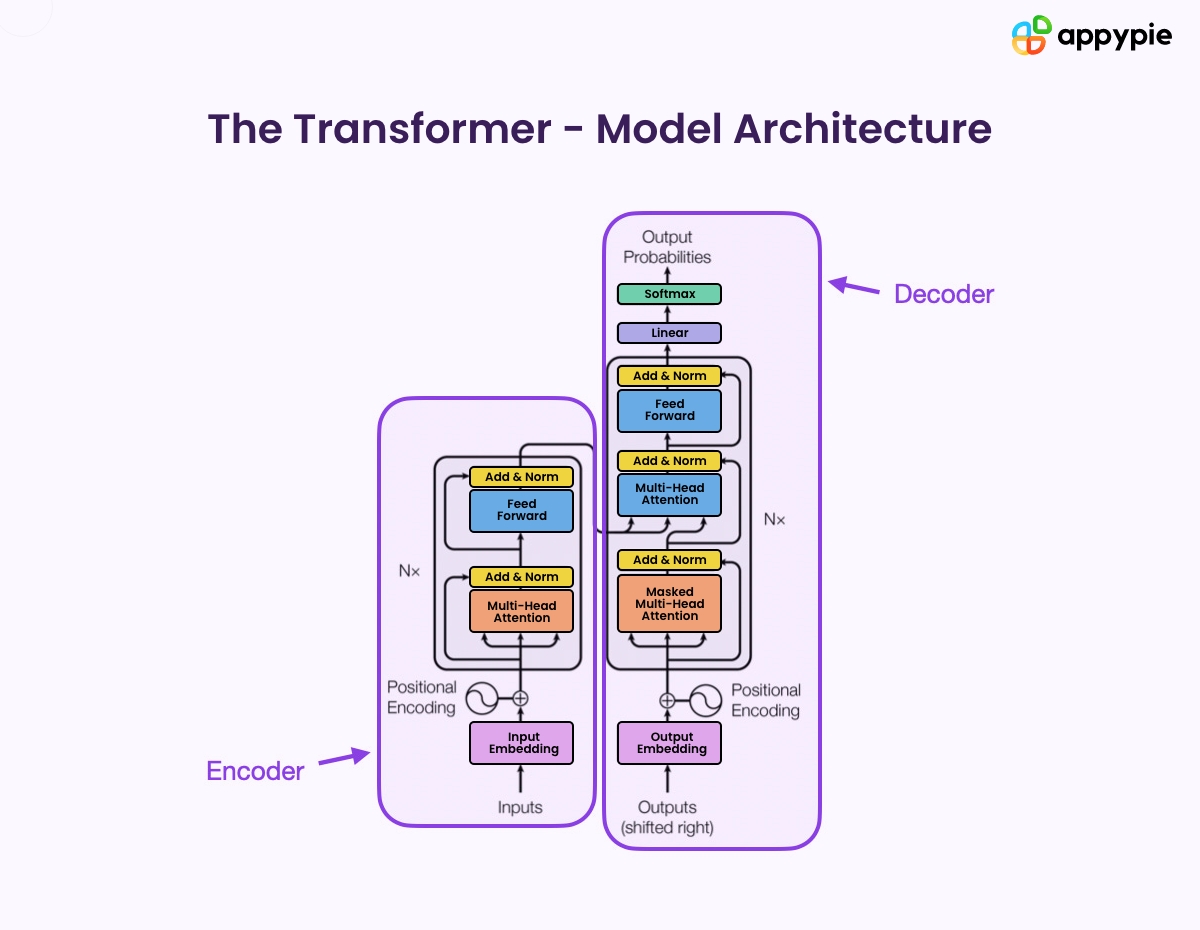

This AI paper from Siemens research introduces a novel method for graph instruction tuning of LLMs, which involves fine-tuning the models for instruction-following tasks by enhancing them with graph understanding capabilities. Inspired by the success of previous works and their scalability to modern architectures, this new method converts graphs into a fixed number of embeddings. These embeddings are then injected into the LLM alongside user instructions.

The LLM is trained to interpret the graph embeddings and use them to generate accurate responses to user queries. This approach outperforms the graph-to-text method and maintains performance regardless of graph size. Additionally, it operates at the embedding layer, making it agnostic to the LLM architecture used as the backbone, thus offering greater scalability.

The novel approach to graph instruction tuning of LLMs

The novel approach to graph instruction tuning of LLMs

The experimental results demonstrate that the proposed method significantly enhances LLMs’ ability to handle graph data. The model achieves better performance by converting graphs into embeddings and integrating them with user instructions than traditional graph-to-text approaches. This method also avoids the performance decay associated with larger graphs, ensuring consistent results. The approach’s independence from the underlying LLM architecture further highlights its potential for broad applicability.

In conclusion, integrating graph embeddings into LLMs represents a significant advancement in artificial intelligence. By addressing the limitations of previous methods and maintaining high performance across various graph sizes, this new approach offers a robust solution for enhancing LLMs with graph understanding capabilities. Future research can build on these findings to further refine the method and explore additional applications, ultimately contributing to developing more versatile and intelligent AI systems.

The future of AI: Enhanced capabilities and applications

The future of AI: Enhanced capabilities and applications

Photo by

Photo by