AWS Invests in LLMops: The Future of AI Assistants

As the world of artificial intelligence continues to evolve, Amazon Web Services (AWS) is investing heavily in building tools for LLMops, or the operationalizing and managing of foundation large language models. This move is set to revolutionize the way enterprises adopt and utilize generative AI chatbots.

The Need for LLMops

Currently, enterprises that want to build their own generative AI assistants with their own or someone else’s large language model face a complex and daunting task. This is where AWS comes in, with its commitment to making it easier for enterprises to adopt and manage LLMs.

The future of AI assistants

AWS’s Investment in LLMops

According to Ankur Mehrotra, general manager of SageMaker at AWS, the company is investing heavily in building and adding new tools for LLMops to Amazon SageMaker, its machine learning and AI service. These capabilities will help enterprises manage various LLMs and ML models in production, allowing them to move fast and swap parts of models or entire models as they become available.

Amazon SageMaker: The future of machine learning

Modelling Scenarios

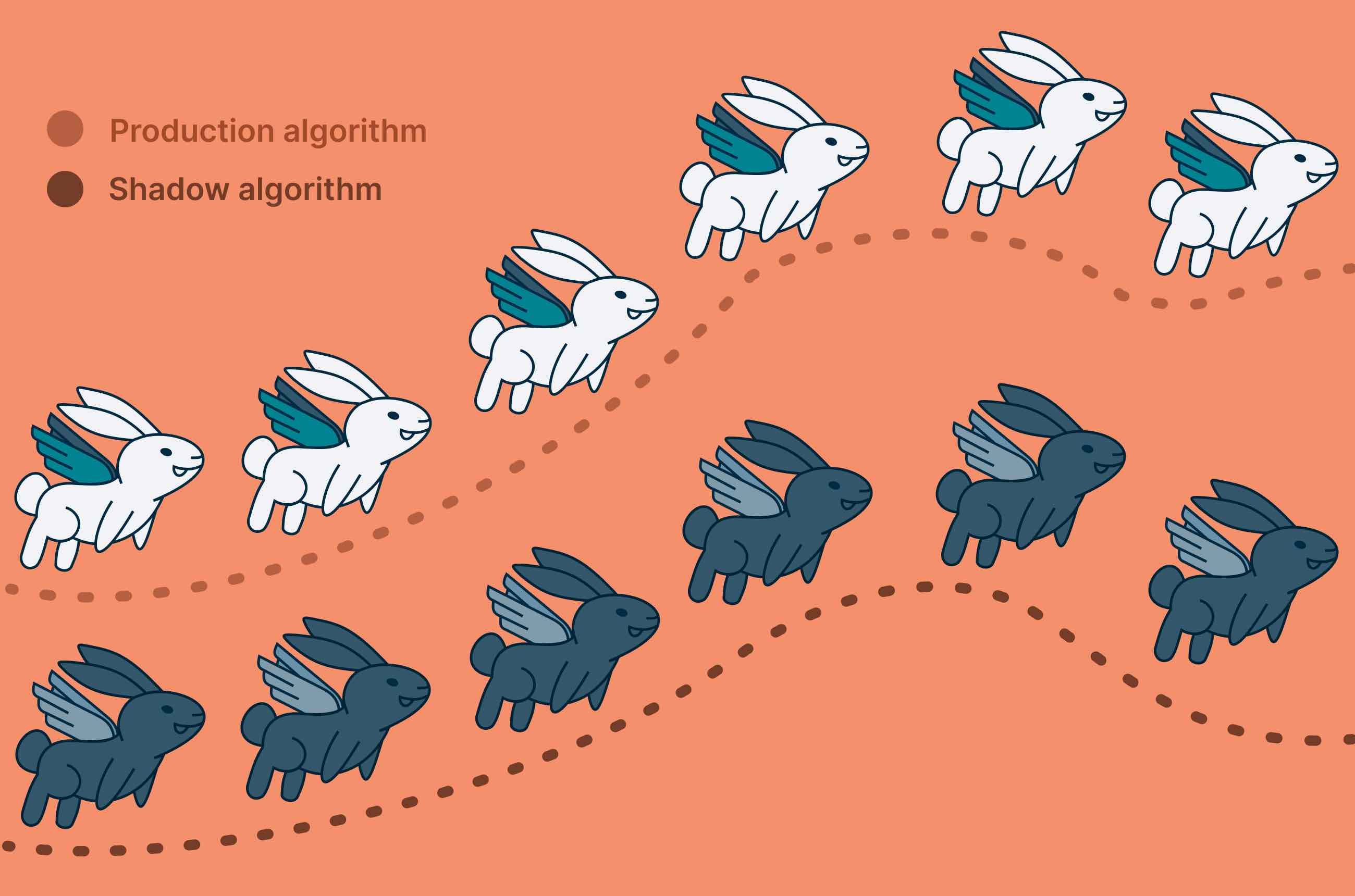

There are several scenarios in which enterprises will find these LLMops capabilities useful. For instance, when a new version of the model being used, or a model that performs better for that use case, becomes available. Enterprises need tools to assess the model performance and its infrastructure requirements before it can be safely moved into production. This is where SageMaker tools such as shadow testing and Clarify can help.

Shadow testing: Assessing model performance

Shadow testing: Assessing model performance

Another scenario is when a model throws up different or unwanted answers as the user input to the model has changed over time depending on the requirement of the use case. This would require enterprises to either fine-tune the model further or use retrieval augmented generation (RAG).

RAG: Fine-tuning models

RAG: Fine-tuning models

SageMaker’s Role in LLMops

SageMaker started out as a general AI platform, but of late, AWS has been adding more capabilities focused on implementing generative AI. Last November, it introduced two new offerings, SageMaker HyperPod and SageMaker Inference, to help enterprises train and deploy LLMs efficiently.

SageMaker HyperPod: Efficient model training

The Rise of Generative AI

AWS has seen a huge rise in demand for model training and model inferencing workloads in the last few months as enterprises look to make use of generative AI for productivity and code generation purposes. The company has seen approximately 10x growth in just a few months, with hundreds of thousands of customers now using SageMaker.

The rise of generative AI

The rise of generative AI

In conclusion, AWS’s investment in LLMops is set to revolutionize the way enterprises adopt and utilize generative AI chatbots. With its commitment to building and adding new tools for LLMops to Amazon SageMaker, AWS is paving the way for a future where AI assistants are more accessible and manageable than ever before.